This Week

Ethics

On Sunday, I met with my group to discuss the ethical considerations of our project, and discussed again with our classmates on Monday for an outside perspective.

Secondary UI (Sheet Music Updates)

I downloaded the code I identified last week as being a candidate for MIDI to sheet music conversion [1][2], and also Mono, the framework the author used [3]. I had to make one simple edit to the makefile in order for the program to run, but otherwise the code was compatible with the most current version of Mono, despite being 11 years old.

From there, it was fairly straightforward to implement the functionality to convert the MIDI file to sheet music on button press. To finish this task, I added some code to make the image update in the system on each edit by the user. For file saving and running the executable, is used the os module in Python [4].

Below is an example of what the sheet music output might look like for a simple sequence of notes.

Seeing the sheet music in front of me made me realize that my program had been saving the MIDI notes in the wrong way. The note pitches appeared to be correct, but the lengths were note, and rests appeared that I had not placed.

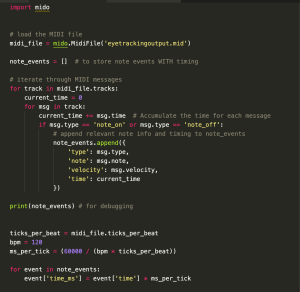

MIDI File Updates [9]

Because of this, I had to go back into my code from last week and identify the issue. I examined the Mido messages from one of the example MIDI files in BYVoid’s repository [1] against the sheet music it generated, and discovered I had misunderstood the Mido “time” parameter; I thought it was relative to the entire piece, but rather it is relative to the note itself (so, notes start at 0 unless preceded by a rest). After fixing that error in my code, it appears that time and pitch are both correct.

I also added the functionality to create a new MIDI file from within the UI [4], which will allow the user to create multiple compositions without opening and closing the application. Additionally, I coded a function that allows the user to open an existing MIDI file from the UI, using a Tkinter module, filedialog [5].

Finally, I added the code to move the cursor backwards and forwards in the MIDI file, inserting and deleting notes at those locations. This marks the completion of the MIDI file responses task on the Gantt chart.

Frontend

Next, I started working on finalizing the frontend code for the project. Previously, I had been using a UI for testing responses with buttons, but we will also need a final UI for the eye-tracking functionality, so I started writing that, see below [6][7].

Among other changes, I added progress bars, which is a widget that the Tkinter library offers [8], above each command in order to make it easier to add the functionality to show the user how long they have to look at a command.

Right now the UI is pretty simple; I will ask my group if they think we should incorporate any colors or other design facets into it. I also would like to test the UI on other machines to ensure that the size-factor is not off on different screens.

UI Responses

In the UI, I set up some preliminary functionality for UI responses, like a variable string input to the message box, and each of the progress bars. I did not make other progress on the UI responses.

Next Week

I am still a little behind in the Gantt chart, but making good progress. From the previous tasks, I still need to complete:

- the integration between the MIDI to sheet music code and our system, such that the current cursor location is marked in the sheet music. This will require me to update the MIDI to sheet music code [1].

- the identification of the coordinate range of the UI commands, which can be done now that I have a final idea of the UI.

- the coordinates to commands mapping program, and loading bars. I’ve outlined some basic code already, but I will need to start integrating with the eye-tracking first to make sure I’ve got the right idea of it.

- error messages and alerts to the user.

In the Gantt chart, my task next week is to integrate the primary and secondary UI, so I will work on that.

Another thing I want to do next week which is not on the Gantt chart is organize my current code for readability and style, and upload it to a GitHub repository, since there are more and longer files now.

References

[1] BYVoid. (2013, May 9) MidiToSheetMusic. GitHub. https://github.com/BYVoid/MidiToSheetMusic

[2] Vaidyanathan, M. Convert MIDI Files to Sheet Music. Midi Sheet Music. (n.d.). http://midisheetmusic.com/

[3] Download. Mono. (2024). https://www.mono-project.com/download/stable/

[4] os – Miscellaneous operating system interfaces. python. (n.d.). https://docs.python.org/3/library/os.html

[5] Tkinter dialogs. Python documentation. (n.d.). https://docs.python.org/3.13/library/dialog.html

[6] Shipman, J.W. (2013, Dec 31). Tkinter 8.5 reference: a GUI for Python. tkdocs. https://tkdocs.com/shipman/tkinter.pdf

[7] Graphical User Interfaces with Tk. python. (n.d.). https://docs.python.org/3.13/library/tk.html

[8] tkinter.tkk – Tk themed widgets. Python documentation. (n.d.). https://docs.python.org/3.13/library/tkinter.ttk.html

[9] Overview. Mido – MIDI Objects for Python. (n.d.). https://mido.readthedocs.io/en/stable/index.html

Global Considerations, Written by Shravya

Global Considerations, Written by Shravya