Last Week

Interim Demo Prep

On Sunday, I worked on preparing my subsystem for the interim demo on Monday. That required some bug fixes:

- Ensured the cursor would not go below 0 after removing a note at the 0-th index in the composition.

- Fixed an issue that caused additional notes added onto the chord to be 0-length notes. But the fix requires that notes of the same chord to be the same length, which I will want to fix later.

- Fixed an issue affecting notes written directly after a chord, in which they were stacked onto the chord even if the user didn’t request it and also could not be removed properly.

I also added some new functionality to the application in preparation for the demo.

- Constrained the user to chords of two notes and gave them an error message if they attempt to add more.

- Allowed users to insert rests at locations other than the end of the composition.

Then, before the second interim demo, I fixed a small bug in which the cursor location did not reset when opening a new file.

This Week

Integrating with Eye-Tracking

I downloaded Peter’s code and its dependencies [1][2][3][4] to ensure it could run on my computer. I also had to downgrade my Python version to 3.11.5 because we had been working in different versions of Python. Fortunately there were no bugs I noticed immediately when I downgraded my code.

In order to integrate the two, I had to adjust the eye-tracking program so that it did not display the screen capture of the user’s face and so that the coordinates would be received on demand rather than continuously. Also, I had to remove the code’s dependence on the wxPython GUI library, because it was interfering with my code’s use of the Tkinter GUI library [5][6].

The first step of integration was to draw a “mouse” on the screen indicating where the computer things the user is looking [7][8].

Then, I made the “mouse” actually functional such that the commands are controlled by the eyes instead of the key presses. In order to do this and make the eye-tracking reliable, I made the commands on the screen much larger. This required me to remove the message box on the screen, but I added it back as a pop-up that exists for ten seconds before deleting itself.

Additionally, I had to change the (backend) strategy in which the buttons were placed on the screen so that I could identify the coordinates of each command for the eye-tracking. In order to identify the coordinates of the commands and (hopefully) ensure that the calculations hold up for screens of different sizes, I had the program do calculations based on width and height of the screen within the program.

I am still tinkering with the number of iterations and the time between each iteration to see what is optimal for accurate and efficient eye tracking. Currently, five iterations with 150ms in between each seems to be relatively functional. It might be worthwhile to figure out a way to allow the user to set the delay themselves. Also, I currently have it implemented such that there is a longer delay (300ms) after a command is confirmed, because I noticed that it would take a while for me to register that the command had been confirmed and to look away.

Bug Fixes

I fixed a bug in which the note commands were highlighted when they shouldn’t have been. I also fixed a bug that caused the most recently seen sheet music to load on start up instead of a blank sheet music, even though that composition (MIDI file) wouldn’t actually be open.

I also fixed some edge cases where the program would exit if the user performed unexpected behavior. Instead, the UI informs the user with a (self-deleting) pop-up window with the relevant error message [9][10]. The edge cases I fixed were:

- The user attempting to write or remove a note to the file when there was no file open.

- The user attempting to add more than the allowed number of notes to a chord (which is three)

- The user attempting to remove a note at the 0-index.

I also fixed a bug in which removing a note did not cause the number of notes in the song to decrease (internally), which could lead to various issues with the internal MIDI file generation.

New Functionality

While testing with the eye-tracking, I realized it was confusing that the piano keys would light up while the command was in progress and then also while waiting for a note length (in the case that pitch was chosen first). It was hard to tell if a note was in progress or finished. For that reason, I adjusted the program so that a note command would be highlighted grey when finished and yellow when in progress.

I also made it such that the user could add three notes to a chord (instead of the previous two) before being cut off, which was the goal we set for ourselves earlier in the semester.

I made it so that the eye-tracking calibrates on start-up of the program [11], and then the user can request calibration again with the “c” key [12]. Having a key press involved is not ideal because that is not accessible, however since calibration automatically happens on set-up, hopefully this command will not be necessary most of the time.

Finally, I made the font sizes bigger on the UI for increased readability [13].

Demo

After the integration, bug fixes, and new functionality, here is a video demonstrating the current application while in use, mainly featuring the calibration screen and an error message: https://drive.google.com/file/d/1dMUQ976uqJo_J2QwzubH3wNez9YMPM8Y/view?usp=drive_link

(Note that I use my physical cursor to switch back and forth between the primary and secondary UI in the video. This is not the intended functionality, because the secondary UI is meant to be on a different device, but this was the only way I could video-record both UIs at once).

Integrating with Embedded System

On Tuesday, I met with Shravya to set up the STM32 environment on my computer and verified that I could run the hard-coded commands Shravya made for the interim demo last week with my computer and set-up.

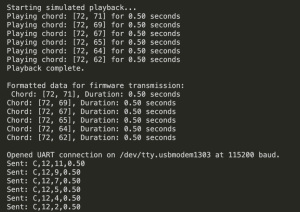

On Saturday (today), we met again because Shravya had written some Python code for the UART communication. I integrated that with my system so that the parsing and UART could happen on the demand of the user, but when we attempted to test, we ran into some problems with the UART that Shravya will continue debugging without me.

After that, I made a short README file for the entire application, since the files from each subsystem were consolidated.

Final Presentation

I made the six slides for the presentation, which included writing about 750 words for the presentation script corresponding to those slides. I also worked with Shravya on two other slides and wrote another 425 words for those slides.

Next Week

Tomorrow, I will likely have to finish working on the presentation. I wrote a lot for the script, so I will likely need to edit that for concision.

There is still some functionality I need to finish for the UI:

- Writing the cursor on the sheet music. I spent quite a while trying to figure that out this week, but had a lot of trouble with it.

- Creating an in-application way to open existing files (for accessibility).

- Adding note sounds to the piano while the user is hovering over it.

- Have the “stack notes” and “rest” commands highlighted when that option is selected.

And some bug fixes:

- Handle the case in which there are more than one page of sheet music and how to determine which page the cursor is on.

- Double-check that chords & rests logic are 100% accurate. I seem to be running into some edge cases where stacking notes and adding rests do not work, so I will have to do stress tests to figure out exactly why that is happening.

However, the primary goal for next week is testing. I am still waiting on some things from both Peter (eye-tracking optimization) and Shravya (debugging of UART firmware) before the formal testing can start, but I can set-up the backend for the formal testing in the meantime.

Learning Tools

Most of my learning during this semester was trial and error. I generally learn best by just testing things out and seeing what works and what doesn’t, rather than by doing extensive research first, so that was the approach I took. I started coding with Tkinter pretty early on and I think I’ve learned a lot through that trial and error, even though I did make a lot of mistakes and have to re-write a lot of code.

I think this method of learning worked especially well for me because I have programmed websites before and am aware of the general standards and methods of app design, such as event handlers and GUI libraries. Even though I had not written a UI in Python, I was familiar with the basic idea. Meanwhile, if I had been working on other tasks in the project, like eye tracking or embedded systems, I would have had to do a lot more preliminary research to be successful.

Even though I didn’t have to do a lot of preliminary research, I did spend a lot of time learning from websites online while in the process of programming, as can be seen in the links I leave in each of my reports. Formal documentation of Tkinter and MIDO were helpful for getting a general idea of what I was going to write, but for more specific and tricky bugs, forums like StackOverflow and websites such as GeeksForGeeks were very useful.

References

[1] https://pypi.org/project/mediapipe-silicon/

[2] https://pypi.org/project/wxPython/

[3] https://brew.sh/

[4] https://formulae.brew.sh/formula/wget

[5] https://www.geeksforgeeks.org/getting-screens-height-and-width-using-tkinter-python/

[6] https://stackoverflow.com/questions/33731192/how-can-i-combine-tkinter-and-wxpython-without-freezing-window-python

[7] https://www.tutorialspoint.com/how-to-get-the-tkinter-widget-s-current-x-and-y-coordinates

[8] https://stackoverflow.com/questions/70355318/tkinter-how-to-continuously-update-a-label

[9] https://www.geeksforgeeks.org/python-after-method-in-tkinter/

[10] https://www.tutorialspoint.com/python/tk_place.htm

[11] https://www.tutorialspoint.com/deleting-a-label-in-python-tkinter

[12] https://tkinterexamples.com/events/keyboard/

[13] https://tkdocs.com/shipman/tkinter.pdf