Week Nov 17-23.

Using OpenCV to get frames to pass into a Mediapipe function to track the user’s irises, I was able to create a basic gaze-tracker that estimates where you are looking on a computer screen based on the location of the user’s irises. In Figure 1, the blue dot—located to the left of the head—shows the user the estimated location of where they are looking on the screen. Currently, there are two main configurations that the gaze-tracking can have. Configuration (1) has precise gaze tracking that requires head movement to make up for the small movements of the eyes, and for Configuration (2) gaze tracking only requires eye-movement, but also requires the head to be kept impractically still and has jittery estimates. In order to improve the Configuration 2, the movement of the head needs to be taken into consideration when calculating the estimated gaze of the user.

Figure 1: Mediapipe gaze-tracker

Week Nov 24-30.

Tested the accuracy of Fiona’s implementation of UI and eye-tracking integration. To test accuracy of the current gaze-tracking implementation, in Configuration 1 and Configuration 2, we looked at each command twice, and if it did not correctly identify a command, I kept trying to select the intended command until the correct command was selected. Using this method to test accuracy, Configuration 1 had precise control and, had 100% accuracy. Configuration 2, while having 89.6% accuracy in testing, had a very jittery gaze estimation, making it difficult to feel confident about the the cursor’s movements, and the user’s head has to be kept too still to be practical for widespread use. Preferably, the user only needs to move their eyes to track their gaze. As a result, the eye-tracking will be updated next week to take head movement into consideration, hopefully making the gaze estimate more smooth.

Tools for learning new knowledge

Using Mediapipe and OpenCV are new to me. To get comfortable with these libraries, read the online Mediapipe documentation and I followed different online video tutorials. Following these tutorials, I was able to discover applications for the Mediapipe library functions that were useful for my implementation.

This Week

This week, I hope to complete the eye-tracking, taking into consideration head movements to make, what is currently being referred to as, configuration 2 a more viable solution.

Figure 1: Solenoid Bracket

Figure 1: Solenoid Bracket

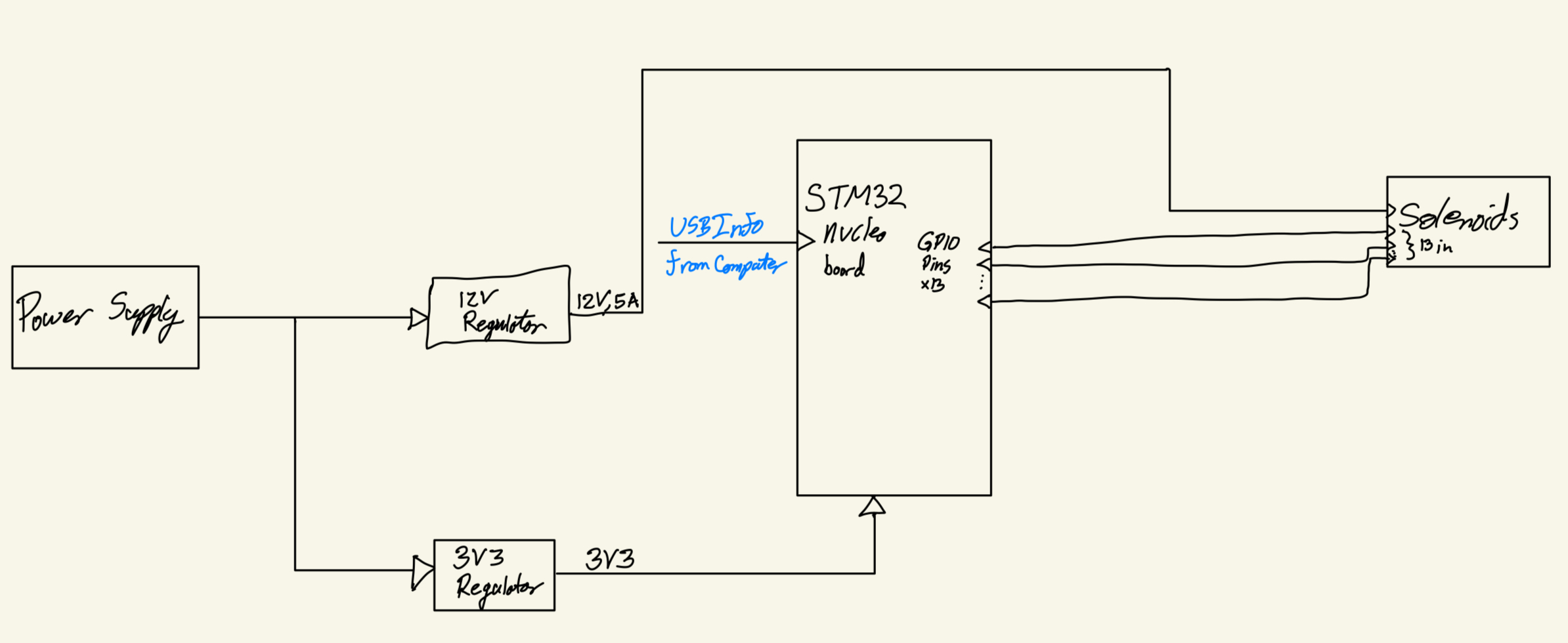

Figure 1: Hardware Block Diagram

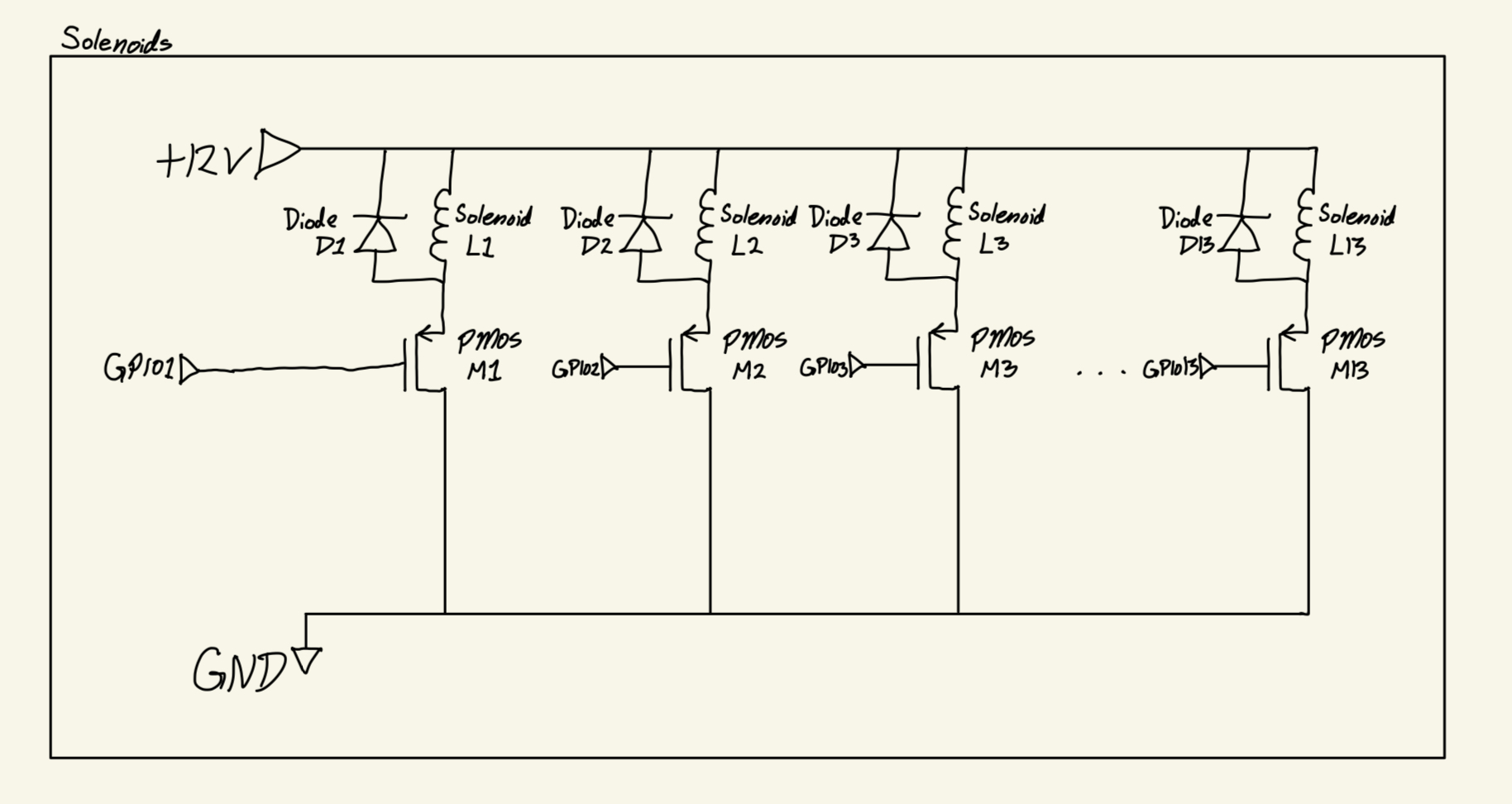

Figure 1: Hardware Block Diagram Figure 2: Solenoids Schematic

Figure 2: Solenoids Schematic