This week, significant effort was spent implementing UART communication between the STM32 microcontroller and an external system, such as a Python script or serial terminal, to control solenoids. The primary goal was to parse MIDI commands from the Python script, transmit them via UART, and actuate the corresponding solenoids based on the received commands.

- Description of Code Implementation:

- The UART receive interrupt (HAL_UART_RxCpltCallback) was set up in main.c to handle incoming data byte by byte and process commands upon receiving a newline character (\n).

- Functions for processing UART commands (process_uart_command) and actuating solenoids (activate_solenoid and activate_chord) were written and tested.

- The _write() function was implemented to redirect printf output over UART for debugging purposes.

- Testing UART Communication:

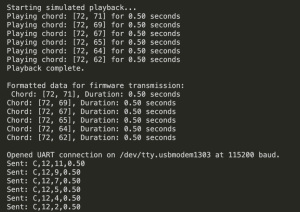

- Python script (send_uart_data) confirmed successful transmission of parsed MIDI commands. See screenshot. This implies my computer is sending the data correctly but the STM32 is not receiving it properly.

- Minicom and other terminal tools were used to TRY to verify whether UART data was received on the STM32 side. They did not work because I can’t monitoring/”occupy” the port without inhibhiting the data being sent. It seems that sending data and monitoring data on that port are mutually exclusive. This makes sense, but I saw online that monitoring a port with a serial terminal was a common way that people debug communication protocols. I also don’t see any point in monitoring a port other than the one the communication is occurring on.

- Unexpected and oddly specific solenoid activation:

- Observed that solenoids 5 and 7 actuated unexpectedly at program startup. This happened multiple times when I reflashed. Also, the movements were somewhat intricate and oddly specific. Solenoids 5 and 7 SIMULTANEOUSLY turned on, off, on (for shorter this time), off, on and off. This makes it seem as if I hardcoded a sequence of GPIO commands for solenoids 5 and 7 which I most definitely did not.

- Added initialization code in MX_GPIO_Init to set all solenoids to an off state (GPIO_PIN_RESET).

- Temporarily disabled HAL_UART_Receive_IT to rule out UART-related triggers but found solenoids still actuated, indicating the issue may not originate from UART interrupts.

I have been debugging this for a week. Initially, there were a few mistakes I genuinely did make (some by accident/oversight, some by genuine conceptual misunderstanding): I realised I had to explicitly flush my RX buffer, create a better mapping system from my python code which deals with an octave of notes that are called “60-72” (because this is MIDI’s convention) and I had to map them to the 0-12 numbering system I use for my solenoids in the firmware code. I also noticed one small mismatch in the way I mapped each solenoid in the firmware code to an STM32 GPIO pin. Also, the _write() function was implemented to redirect print() over UART for debugging purposes.

I now feel like I have a good conceptual understanding of UART, the small portion of autogenerated code that stm32cube ide generates (this is inherent to the way stm32cube ide does some clock configurations, it’s necessary and there is no way to stop this), and any additional functions I’ve written. I definitely knew far less when I started testing out my uart code a week ago. Yet, I am still stuck. I might be out of ideas on what component of this code to look at next. I’ve now shared my files with Peter who may be a fresh set of eyes, as well as a friend of mine who is a CS major. I have an exam on Monday, but after it ends, I will work on this in HH1307 with my friend until it functions. I need it to work before our poster submission on Tuesday night and video submission on Wednesday night.