Nick’s Status Report for 12/7/24:

1.Accomplished This Week

- Built Web Interface – Has all the features of my simulator, and also added the ability to quickly build the room with obstacles – no need for mapping if you already know the layout. It also has a feature where you can select only part of your room to clean

Musing: I’m so close to graduating I don’t quite believe it. Part of me thinks I’m going to fail something and have to stay another semester. This project maybe wasn’t what I wanted to do but it is what we ended up doing and there are some unrealistic goals we set for ourselves that we won’t end up achieving. I think we could have made it so much cooler as well but oh well. Like a robot that can clean corners. and can actually clean. I think I’m done with college now that I know what I want to do and want to only do that instead of juggling so many different classes.

- 2. Schedule Status

- On Schedule: Everything is on track according to Gantt Chart

3. Plans for Next Week

- Presenting! Need to get the video and poster done. My contributions are pretty much finalized, might take another look at the SLAM but I think the consensus is that we’re mostly using the ros2 library.

Nick’s Status Report for 11/30/24:

1.Accomplished This Week

- Simulation Feature: Matthew mentioned that the robot might be lacking in precision. I built in fault tolerances to the simulation, to give a fixed distance from corners and walls that the robot might not be able to reach. This works much faster than sliding window (my initial approach), at least an order of magnitude faster on 15,000,000 points, so it should be good enough to run every second in real-time. My concern is that with a +/- threshold, although we might guarantee that we don’t collide with walls, we might fall well under our % room cleaned metric. Even in simulation, if I added too much fault tolerance the maximum area coverable goes below 90% – moreso if there are numerous obstacles.

- Simulation Feature: Map objects generated by robot are parseable and can be visualized in simulation.

- Simulation Feature: Can add a robot following an arbitrary pathing algorithm. It shoots lidar beams and lights up the room 🙂

- 2. Schedule Status

- On Schedule: Everything is on track according to Gantt Chart

3. Plans for Next Week

- Build a web interface for the simulator

Nick’s Status Report for 11/16/24:

1.Accomplished This Week

- Finalized Simulation Software: Robust room generation software with tunable hyperparameters, high granularity (0.1cm), ability to mask reachable areas of the room. Demonstrated that a robot only needs on average 5-6 different scans to reliably map a room with dimensions of 3×5 meters that has fewer than 5 obstacles.

- Stream Parsing LIDAR Data: I wrote a ROS package to read in previously truncated LIDAR /scan data, it subscribes to the LIDAR published stream and reads only the relevant data (point cloud-like) back.

- Robot: Robot is driving and responds to keyboard commands

2. Schedule Status

- On Schedule: Everything is on track according to Gantt Chart

3. Plans for Next Week

- I need to look into LIDAR odometry, since motor encoders seem to be unreliable. Yet to see how this may conflict with objectives of identifying

- Port over room mapping to a web-based UI

Nick’s Status Report for 11/9/24:

1.Accomplished This Week

- LiDAR: We recorded LIDAR readings for different map topologies and thought about different maze configurations for testing, which we built out of cardboard.

- Deep Learning: Since one of the benefits of the Jetson is that we can run on-board inference, I explored the possibility of training a deep learning model to interpet lidar readings instead of using an algorithm.

- Robot: We have a functional setup and got the motors and the motor drivers working. We got an Arduino from Professor Bain to modulate PWM signals to the motors. We received an adapter for the battery that will allows us to test LIDAR while moving with our real Roomba and set up ssh with out Jetson.

2. Schedule Status

- On Schedule: Everything is on track according to Gantt Chart

3. Plans for Next Week

- I will use the data obtained to generate point clouds to work with my visualization and simulation software I’ve written. The output format for our graph will likely be CSV, with -1 for unmapped, 0 for mapped and blocked by an obstacle, and 1 for an obstacle, with a granularity of 0.1cm. This granularity should be low enough to still capture roundness and diagonal walls with sufficient accuracy for path planning.

Nick’s Status Report for 11/2/24:

1.Accomplished This Week

Jetson Orin Nano Up and Running

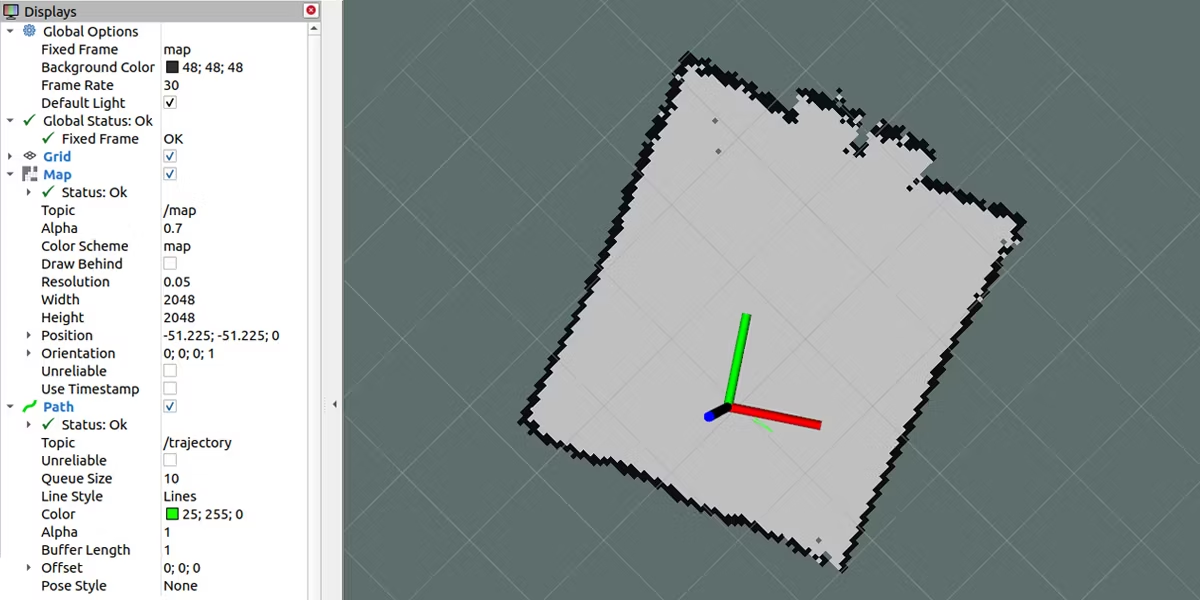

Like some other groups, we had been experiencing some difficulties in our setup of the Jetson Orin Nano. The previous week, we needed to perform a firmware update before the Jetson was compatible with our JetPack image flashed on our microSD. However, the firmware update required a different JetPack image than the one we installed, leading to display issues on the monitor. This week we successfully booted with our new flash image, downloaded ROS2 Humble onto the Jetson, and managed to get the RPLidar ROS2 package installed, which allowed us to collect and visualize data from our LIDAR.

LIDAR Data Stream

The LIDAR datastream we obtained does not come in a point cloud version. Instead, it is an array of ranges and intensities. It measure in a 360 degree sweep at around 10Hz. Since there is nanosecond measurements, we can determine it is precisely ~0.15 seconds between the two measurements shown here. If we travel at our maximum desired straight line speed of 0.1m/s, our robot will have travelled 1 to 1.5 cm in this timeframe which is something to consider for mapping purposes (where odometry comes to play!)

The LIDAR datastream we obtained does not come in a point cloud version. Instead, it is an array of ranges and intensities. It measure in a 360 degree sweep at around 10Hz. Since there is nanosecond measurements, we can determine it is precisely ~0.15 seconds between the two measurements shown here. If we travel at our maximum desired straight line speed of 0.1m/s, our robot will have travelled 1 to 1.5 cm in this timeframe which is something to consider for mapping purposes (where odometry comes to play!)

header:

stamp:

sec: 1730583058

nanosec: 349649947

frame_id: laser_frame

angle_min: -3.1241390705108643

angle_max: 3.1415927410125732

angle_increment: 0.005806980188935995

time_increment: 0.0001358969893772155

scan_time: 0.1466328650712967

range_min: 0.15000000596046448

range_max: 12.0

ranges:

- 4.815999984741211

- 4.76800012588501

- 4.76800012588501

- 4.76800012588501

- 4.760000228881836

- 4.760000228881836

- 4.760000228881836

- 4.751999855041504

- 4.74399995803833

- 4.736000061035156

- 4.71999979019165

header:

stamp:

sec: 1730583058

nanosec: 497179843

frame_id: laser_frame

angle_min: -3.1241390705108643

angle_max: 3.1415927410125732

angle_increment: 0.005806980188935995

time_increment: 0.00013578298967331648

scan_time: 0.14650984108448029

range_min: 0.15000000596046448

range_max: 12.0

2. Schedule Status

- On Schedule: Everything is on track according to Gantt Chart

3. Plans for Next Week

- I will use the data obtained to generate point clouds to work with my visualization and simulation software I’ve written. The output format for our graph will likely be CSV, with -1 for unmapped, 0 for mapped and blocked by an obstacle, and 1 for an obstacle, with a granularity of 0.1cm. This granularity should be low enough to still capture roundness and diagonal walls with sufficient accuracy for path planning.

Nick’s Status Report for 10/26/24:

1.Accomplished This Week

- LiDAR: After researching how to use 2D lidar to map a room, I implemented basic point cloud mapping algorithm using other people’s LIDAR data.

- Visualization Software: I started working on a user interface that allows the user to hover over a grid and select squares. The squares will represent the areas of the room that the robot will be assigned to clean. In later updates, this interface will store maps of all the rooms in the user’s house. How the user will inform the Roomba of what room it’s currently in is a problem still in consideration at the moment.

2. Schedule Status

- On Schedule: Everything is on track according to Gantt Chart

3. Plans for Next Week

- LiDAR Mapping: I need to create some kind of algorithm for robot movement to ensure the robot can map an entire room. It will need to use the information recorded to evaluate which areas of the room are open. It’s ok for this step to have inefficiencies.

- Map Interpolation: I will convert the point cloud mapping into a 2d graph of 1’s and 0’s to match Kevin’s spec for path planning

Nick’s Status Report for 10/12/24:

1.Accomplished This Week

- LiDAR: We focused on incorporating the LiDAR module with our Jetson Orin Nano. We needed a microSD to flash the Jetson image and then connect the LiDAR with the Jetson via USB. Then we worked on installing ROS on the Jetson.

2. Schedule Status

- Still Behind: Need more progress on visualization software. Once completed, it will speed up everything else, and if I can complete it early enough it will catapult us ahead of schedule

3. Plans for Next Week

- Continue Visualization Software. With edge grabbing completed, I’ll continue my work on converting point clouds into 2d maps.

- LiDAR Mapping: I will create a point cloud mapping of my room using LiDAR, first while stationary, then while moving to attempt to map the entire room. This test will involve manually moving the LiDAR around by hand, so there will be the additional obstruction of my body and my laptop.

Nick’s Status Report for 10/5/24:

1.Accomplished This Week

- LiDAR: We obtained our 2D LiDAR this week. I was able to connect to my laptop with the LiDAR to provide power and perform some rudimentary testing. I confirmed that the LiDAR data output format was compatible with the previous visualization software I wrote. Then, I wrote a script to perform basic range detection (treating it like I would an ultrasonic sensor). The basis of my testing was if I could recognize if a paper towel roll was within 0.5m distance. (Dimensions of the paper towel roll approximately 10cm width by 30cm height) The rotation of the LiDAR made this nontrivial to test, since my laptop was connected to the lidar and it recognized it as in range. To remedy this, I created a small stand to elevate both the LiDAR and the paper towel roll to a plane above my laptop. The granularity of the distance check is somewhat iffy, the paper towel roll was still being detected at a distances greater than 0.5m. This is purely a software processing problem, as I expected the LiDAR to be able to detect objects >5m away. Part of it has to do not wanting to overwork my laptop/throwing away too many data points during pruning. Will continue to work on this next week. If I have am able to demonstrate mapping capabilities for a stationary LiDAR, this problem will be solved alongside it.

- Design Presentation: I rehearsed and presented our design slides on Wednesday. Still haven’t received feedback in Slack, but my general perception was positive. Most of the audience questions/gripes were about the scale of a model that we put in as a placeholder.

2. Schedule Status

- Still Behind: Need more progress on visualization software. Once completed, it will speed up everything else, and if I can complete it early enough it will catapult us ahead of schedule

3. Plans for Next Week

- Continue Visualization Software. With edge grabbing completed, I’ll continue my work on converting point clouds into 2d maps.

- LiDAR Mapping: I will create a point cloud mapping of my room using LiDAR, first while stationary, then while moving to attempt to map the entire room. This test will involve manually moving the LiDAR around by hand, so there will be the additional obstruction of my body and my laptop.

Nick’s Status Report for 9/28/24:

1.Accomplished This Week

- Edge Grabbing Software: Using the library open3d, I processed my las point cloud files created by my simulation script and converted them into an o3d point cloud. Using o3d vectors, I performed edge detection in chunks, first finding normal vectors for small groups of vectors with a k-d tree, computing curvatures, then attempting to label edges beyond a certain curvature threshold.

- Ordering Supplies: We obtained our Jetson Orin Nano and placed our first order for the 2-D LiDAR, finalizing our design implementation.

- Design Presentation: I will be presenting the design review slides for our team the following week. I revamped the slides, created new visuals and diagrams including block diagrams and design trade studies. I also rehearsed my presentation.

2. Schedule Status

- Slightly Behind Schedule: I got slightly less work done than anticipated on the software side while preparing for the design presentation.

3. Plans for Next Week

- Continue Visualization Software. With edge grabbing completed, I’ll continue my work on converting point clouds into 2d maps.

- Lidar Testing. If the LIDAR arrives in the next week, I’ll work on integrating my simulation software with the LIDAR reading from a stationary position (plugged in to my laptop)

Nick’s Status Report for 9/21/24:

1.Accomplished This Week

- Simulation Software. Following Prof. Bain’s suggestions, I attempted various simulations of LIDAR point clouds to map a room. Point cloud output format most frequently comes in the LAS (LASer) or LAZ (zipped LAS). The basic amount of information encoded in each data point is (x,y,z) coordinates as well as laser signal strength which we can denote as A. Further information such as scan angle, scan direction can also be saved. This suggests that as long as we can obtain accurate odometry, we can use basic geometry to piece together separate lidar scans.

For my initial tests, I used Python’s numpy and laspy libraries to generate multiple iterations of an increasingly complicated room. I used CloudCompare, a free open-source software, solely for visualization. The first test randomly generated coordinates in a square. Then I created a rectangular room with approximately 300 m2 surface area, slowly adding basic obstacles, corridors, and alcoves.

Simulation took scans of radius <7.5m from height of z=0 (the surface) meaning each scan could capture maximally ~50% of the surface area of the room, although it was usually less than since the center of the scan radius would approach the wall. To make the scans more realistic and simulate occlusion, I included angle binning, which sorted all the points from each scan by distance to the center, separated them into bins based on polar coordinates, and only accounted for the closest data points for each “angle bin”. I also threw in Gaussian noise and removed 10% of my dataset at random. My result was with 9 separate scans, I could achieve a decent match to the ground truth scan, accurately capturing the two pillars I placed and covering almost the entire room. However – each of my scans takes < 1 MB while the ground truth scan was a 4GB file. One of the reasons for this was because my ground truth file filled in the geometry in three dimensions. With an actual LIDAR, we will need significant pruning of the data stream to ensure that our microcontroller does not run out of memory, assuming we will not be using the cloud for data processing due to latency issues.

2. Schedule Status

- On Schedule:This week is on schedule.

3. Plans for Next Week

- Plans For Simulation and Visualization Software. In the following week I aim to further refine the simulations and increase the complexity of the geometry. I also want to write a toy program that will further reduce the file size and estimate a 2d outline for the room based on point cloud data. In the future, this would become a piece of software that would save point clouds for each room as interactive 2d maps.