Kevin’s Status Report for Dec 6. 2024

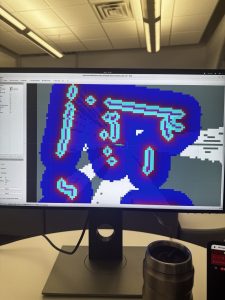

This week, Matthew and I finished up the dynamic occupancy matrix from the LiDAR using Slam Toolbox. We had been working tirelessly over Thanksgiving break as well as the week back to get it to work (it wouldn’t work for some reason), and we ended up having to incorporate another library rf2o for the LiDAR odometry. After getting that to work, I got right to work with the Nav2 things. I managed to get the costmap up this week, and it was particularly difficult as we were using a custom robot rather than a turtlebot. Here is a picture of it working!

In terms of unit tests and overall system tests carried out for experimentation of the system.

- Algorithm speed – Our current A* based algorithm is more than 30% faster than the basic DFS + Backtracking algorithm on our self designed rooms.

- Motors going in a straight line – successful

- Controller motors through ROS2 – successful

- Getting an accurate cost map that allows us to send navigation instructions to the Arduino – successful

As mentioned in the previous week, now that we have the LiDAR setup, and Nav2 Setup, we can finally send command vectors to the Arduino and get everything to start working together.

In terms of schedule, we are still behind but we are definitely catching back up. Hoping to make a comeback this weekend 🙏

Kevin’s Status Report for Nov 30. 2024

For the past two weeks, I have been struggling to get the LiDAR to correctly update the map in RViz using slam_toolbox on ROS 2 Humble. I’ve tried many different solutions including using a docker container to host a ubuntu 18.04 so I could use ROS 1 Melodic and hector slam but that unfortunately ran into issues with visualization due to incompatible Nvidia drivers. Then I tried using ROS 1 Noetic with a ubuntu 20.04 docker container but then it also ran into issues with hector slam. Then I went back to using slam_toolbox on ROS2 Humble trying to get it to update the map, but for some reason it doesn’t seem to be updating the map even when I move the robot. I am currently looking into the issue, but I suspect that the LiDAR is not detecting enough change for it to update the map (?).

Without getting this first part done, it would be practically impossible for us to get Nav2 Setup, which is what I plan on using to send cmd_vec to the Arduino. This has taken us nearly a month, and is severely delaying our progress. I have emailed professor Ziad Youssfi to ask for his mentorship to help us solve this problem because he teaches the class autonomous robots that seems to use the same stack that we are using. Hopefully he can save us.

In terms of schedule, we really are just falling majorly behind on the integrations because of this LiDAR mapping thing. Everything else is already in order. If we can solve this bottleneck ASAP, things could work out.

I also made the final presentation as I will be the one presenting.

Kevin’s Status Report for Nov 17. 2024

This week, I did some intense research on how the entire tech stack would communicate with each other to further refine my path planning algorithm. Then based on that, I implemented a simulation a* algorithm for SLAM during the first phase that I will load into Nav2 when we integrate SLAM with the robot. The stack that I think we would follow looks like this:

Motors <-> Arduino Uno <-> Jetson Orin Nano <-> Nav2 (generate velocity vector) <-> Nav2 Planner <-> RPLiDAR Occupancy Matrix <-> RPLiDAR Slam ToolKit

After the first pass, we could try something like the Boustrophedon Cellular Decomposition algorithm for coverage planning. However, if we want something more dynamic, we would go with D-star.

This week, I was able to simulate what SLAM would look like using an A* Algorithm for path planning and nearest frontier search to decide which node to search for. I managed to make a gif for what it would look like below:

In terms of the schedule, I actually think we’re a little behind. However, if we’re able to configure Nav2 and the Arduino this week and get the robot moving by Friday using Nav2, we should be able to survive.

Kevin’s Status Report for Nov 10. 2024

This week, I worked on creating more simulations and algorithms for the path planning. I managed to get an A* algorithm to work by using manhattan distance between each “next square” that we’re supposed to go to. Right now, I am simulating a room that we’re slowly “discovering.” The main problem with this is that it is a little difficult to estimate how much we can discover at each square since it would probably be highly variable. We should come up with a backup plan in case the initial mapping doesn’t work. Maybe like manually scanning the room first or something.

In terms of schedule, we’re right on schedule! The robot, lidar, and path planning is all at decent spots. We’ll probably be able to get a nice version out by next weekend.

Kevin’s Status Report for Nov 3. 2024

This week, I worked with Nick to get ROS 2 and the LiDAR to work. We managed to get ROS 2 Humble to run on the Jetson. We had previously thought that we were going to use ROS 2 Melodic, but we into ran into issues with getting it to run. We then found out that ROS 2 Melodic was meant to be ran on Ubuntu 18.04 rather than Ubuntu 22.04 which was the linux distribution on our Jetson Orin Nano. Following that, we tried using a github library to install ROS 2 Humble, but it was overcomplicating it by using docker containers which we did not thing that we needed. Hence, we finally got ROS 2 to work after going through some ROS 2 documentation on their official website. After that, getting the RP LiDAR to work also cost us a lot of time. While trying to get the RP LiDAR to “launch,” we kept running into issues. We then slowly worked through it and found that there was a permission issue on the USB port and then later an issue with the baud rate that we were using. But after this hard battle, we managed to get ROS and the LiDAR to work together and managed to visualize it in RViz.

Here is an image I took of Nick looking at the visualization in RViz!

Some potential problems that we may run into is primarily the range of the LiDAR. We found that if an object was too close to the LiDAR, it would not be able to detect it. However, this might be okay because the radius that cannot be detected seems to be the radius of our robot body. We thus, must end up putting the lidar in the middle of the robot rather than at one edge.

In terms of schedule, I think we have caught right back up to it and everything is looking good! I believe Nick is almost done making a map with the point cloud data, and my path planning algorithm is also pretty much done. The next step would be mainly optimization and just integration.

Kevin’s Status Report for Oct 26. 2024

This week, I personally managed to get the Jetson to work by upgrading the firmware and flashing the SD card with the right version of Jetpack. Getting it to connect to the Wi-Fi was a big issue, but we managed to solve it. After that, I decided to work on a simulation path finding algorithm as professor Bain had suggested. I have attached a gif that I generated for a room with a table , to show how a robot would potentially go about the room. I used a DFS + Backtracking Coverage algorithm as professor Bain had suggested, but I personally think that it is too slow, and can be further optimized.

I also decided how we should go about representing the graph—by using 1 and 0s where 1 is a traversable grid square and a 0 is a non-traversable grid square. Below is an example:

A potential issue that I think might arise, that this simulation uncovered is that our robot cannot be too small. If the grid size becomes much larger than a 20×20 grid, an inefficient algorithm could end up taking the robot a significant amount of time to traverse the entire room. Hence, I think we had originally thought of a smaller form size, but now I think we should pick a slightly bigger size.

My progress is currently on schedule. I think I just need to implement intermediary path finding between each square to find the most efficient way to visit unvisited squares, while still keeping much of the coverage algorithm the same.

I hope to develop a more efficient path coverage algorithm by next week and succeed in making a room map out of the point cloud from our LiDAR.

Kevin’s Status Report for Oct 20.2024

This week, we got the parts needed for the Jetson (sd card + internet adapter). We got the the Jetson to finally turn on as we found out that the monitor at my house was not the right dimensions (?), and decided to try it out on the ece lab clusters. We did however run into an issue where the UEFI version was not what was expected. We were expecting it to be 36.xx but it was 3.xx. As a result of this, I have reflashed the SD card with an older version of Jetpack and am currently in progress re-installing ross on our Jetson.

In terms of schedule, if I am able to successfully get some path searching algorithm done, we should be on schedule. Hopefully we can get the LiDAR completely up and running.

Kevin’s Status Report for Oct 6. 2024

This week, we got the LiDAR and we are planning to meet up tomorrow to test it. I spent a lot of time looking into existing implementations with the LiDAR that is in the catalog and have found a couple of youtube videos that we could reference while building our product.

In terms of schedule, we are slowly catching back up so it’s all good.

Kevin’s Status Report for Sep 29. 2024

This week, I was sick and had to make a business trip to India (currently on the flight back), and therefore was unable to make much progress.

In terms of schedule, we are falling behind our Gantt chart, but I believe we can easily make back the progress if we really focus next week. We’ve made a purchase order for a 2D Lidar, which means I’ll be able to start playing with it next week and hopefully get a working piece of software up by next weekend.

Kevin’s Status Report for Sep 21. 2024

This week, I mainly looked more in-depth into the potential LiDAR libraries that we can use that are compatible with the LiDAR that we are looking at (UniTree L1 LiDAR).

Here is the link to the repo that I have found: https://github.com/unitreerobotics/point_lio_unilidar?tab=readme-ov-file

I’ve played around with the sample point cloud data in the repository and discussed with Matthew about using IMU’s on our robot since that seems to be something that could massively enhance the efficacy of the LiDAR.

In terms of progress, I believe that we are on schedule. I need to get a working sample by tomorrow so we can submit a purchase request for the LiDAR before Tuesday so that the purchase can be made. The main deliverable that I hope to complete in the next week is to get the LiDAR and get a rudimentary mapping of a small room.