Team Status Report for 12/7

1. What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

- Bluetooth Low Energy (BLE) Reliability: While BLE integration is functional, the reliability of the communication in different environments and during prolonged use needs further validation.

- User Feedback Integration: Noah implemented averaging over 3 seconds to stabilize emotional feedback, and Mason introduced a Kalman filter to reduce noise in model output.

- Demo Room Setup: Mason has tested the Jetson with Ethernet in the demo room and confirmed functionality. As a contingency, offline operation using pre-loaded models and configurations is ready.

2. Were any changes made to the existing design of the system? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

- Averaging for Emotion Feedback: Noah added a 3-second averaging mechanism to the model output to stabilize emotional transitions and reduce frequent buzzing in the bracelet.

- Kalman Filter Implementation: Mason integrated a Kalman filter into the system to reduce noise and unnecessary state transitions in the model’s output.

- BLE Integration via USB Dongle: Kapil and Mason discovered that the Jetson does not natively support Bluetooth, requiring the use of a USB Bluetooth adapter.

3. Provide an updated schedule if changes have occurred:

- The project is on track overall.

4. List all unit tests and overall system tests carried out for experimentation of the system. List any findings and design changes made from your analysis of test results and other data obtained from the experimentation.

Unit Tests:

- BLE Communication Testing (Kapil): Tested the latency and stability of BLE communication between the Jetson and the Adafruit Feather. Verified that latency is within acceptable limits and that commands are transmitted accurately.

- Haptic Feedback Regulation (Noah): Added averaging for emotional feedback, stabilizing the output and reducing frequent buzzing. Verified that emotional transitions occur only when the model is confident.

- Kalman Filter Integration (Mason): Tested the Kalman filter on the model’s output to reduce noise. Found that it significantly improved stability in feedback transitions but requires further tuning for video input.

Overall System Tests:

- User Testing: Conducted user tests with three participants to assess the usability and practicality of the system. Feedback was positive, noting that the system is intuitive and functional, with suggestions for minor improvements in feedback timing and UI clarity.

Findings and Design Changes:

- Feedback Stabilization: Averaging and Kalman filtering significantly improved feedback quality, reducing noise and unnecessary transitions. These features will remain in the final design.

- BLE Integration: The USB dongle resolved the Bluetooth issue, enabling seamless communication without major redesigns or delays.

Noah’s Status Report for 12/7

Given that integration into our entire system went well, I spent the last week tidying things up and getting some user reviews. The following details some of the tasks I did this past week and over the break:

-

- Added averaging of the detected emotions over the past 3 seconds

- Decreases frequent buzzing in the braclet

- Emotions are much more regulated and only change when the model is sufficiently confident.

- Coordinated time with some friends to use our system and got user feedback

- Tended to be pretty possible recognizing that this is only a first iteration

- Started working on the poster and taking pictures of our final system.

- Waiting on the bracelet’s completion, but it’s not a blocker.

- Added averaging of the detected emotions over the past 3 seconds

List all unit tests and overall system test carried out for experimentation of the system. List any findings and design changes made from your analysis of test results and other data obtained from the experimentation.

*

I am done with my part of the project, and will mainly be working on the reports and such going forward.

Goals for next week:

- Finish the poster, final report, and presentation!

Kapil’s Status Report for 12/7

1. What did you personally accomplish this week on the project?

- I coded the entire Bluetooth Low Energy (BLE) functionality on the Adafruit Feather, ensuring it can communicate effectively as part of the system.

- Mason and I began integrating BLE with the Jetson. We discovered that the Jetson does not natively support Bluetooth, but I resolved this issue by using a USB Bluetooth adapter. After configuring it, I successfully got BLE to work between the Jetson and the Adafruit Feather.

- I obtained straps for the bracelet and 3D printed a prototype of the enclosure design. This prototype will house all the components and provide the structure needed for the final version.

2. Is your progress on schedule or behind?

- My schedule is on track. BLE integration and the 3D printed prototype are significant milestones, and I am progressing as planned toward final assembly and testing.

3. What deliverables do you hope to complete in the next week?

- Integrate all bracelet components inside the 3D printed enclosure. This will involve resoldering the connections to fit the prototype and ensuring everything is securely housed.

- Glue the straps onto the 3D printed enclosure to finalize the wearable structure.

Mason’s Status Report for 12/7

I spent the majority of this week working on the Kalman filter for our model output, conducting tests, and working on our upcoming deadlines. Here is what I did this past week:

-

- Made and integrated Kalman filter

- I worked independently on writing Kalman filter code for the model output

- The goal of the system is to reduce noise associated with model output

- The system achieves the desired effect of reducing unnecessary state transitions and reducing noise

- Still needs to be tuned for video and final demo

- Set up and tested system in demo room

- Made sure that the jetson will be able to set up on ethernet in our demo room

- Began work on poster and video

- Added poster components related to the web app subsystem

- Conducted user tests with 3 people

- Had them use the system and give feedback on the UI and system usabiliity/practicality

- Worked with Kapil on Jetson BLE integration. Researched a few options and ultimately found a suitable usb dongle for Bluetooth.

- Made and integrated Kalman filter

Goals for next week:

- Tune Kalman filter

- Further prepare system for video and demo

- Finish final deadlines and objectives

Mason’s Status Report for 11/30

This week, I focused on preparation for the final presentation, testing, and output control ideation. Here’s what I accomplished:

This week’s progress:

- Testing

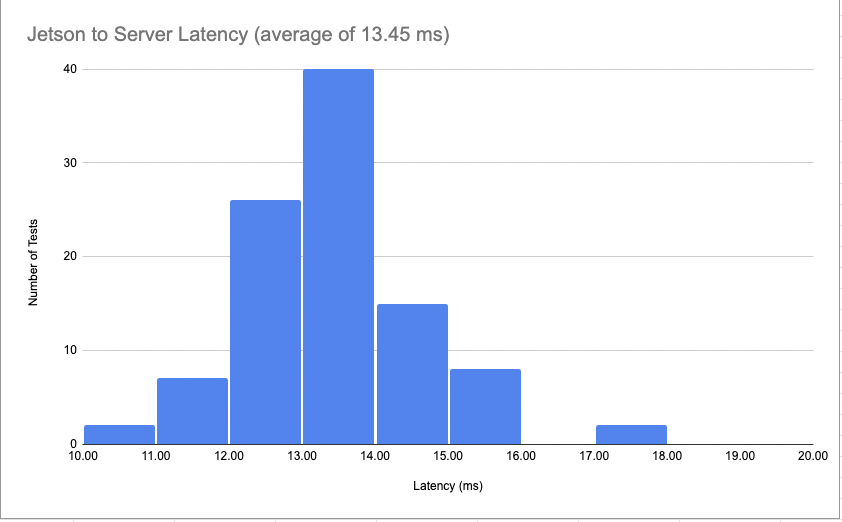

- Ran tests on API latency using python test automation.

- Found that the API speed is well within our latency requirement (in good network conditions).

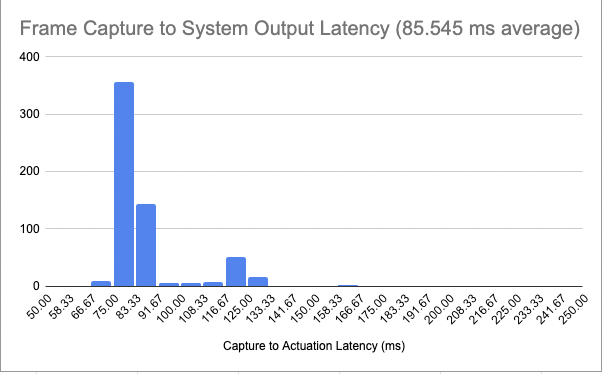

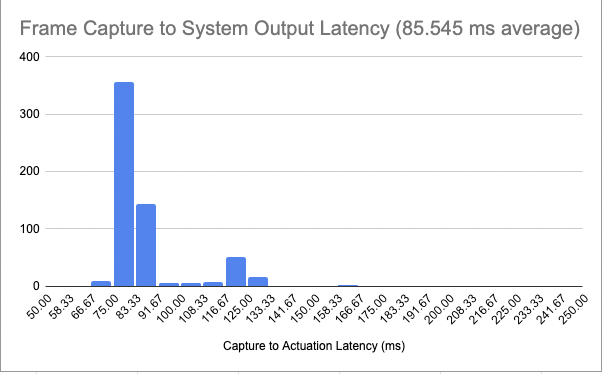

- Ran latency tests on the integrated system, capturing the time between image capture and system output.

- Found that our overall system is also well within our latency requirement of 350 ms, in fact operating consistently under 200 ms.

- Wrote out user testing feedback form for user testing next week.

- Final presentation

- Worked on our final presentation, particularly the testing, tradeoff, and system solution sections.

- Wrote notes and rehearsed the presentation in lead up to class.

- Control, confidence score Ideation

- In an effort to enhance our systems confidence score efficacy, I decided to integrate a control system.

- I plan to use a Kalman filter to regulate the display of the system output in order for account for the noise present in the system output.

- By using the model output probabilistic weights, I will try to analyze the output and make a noise adjusted likelihood estimate.

- I will implement this with numpy on the Jetson side of the system and update the API in conjuction with this.

Goals for Next Week:

- Implement kalman filter on Jetson.

- Assist Kapil with BLE Jetson integration for the Adafruit bracelet.

- Continue user testing and integrate changes into UI in conjunction with feedback.

- Work on poster and finalize system presentation for final demo.

I’d say we’re on track for final demo and final assignments.

As you’ve designed, implemented and debugged your project, what new tools or new knowledge did you find it necessary to learn to be able to accomplish these tasks? What learning strategies did you use to acquire this new knowledge?

For the web app development, I’ve had to learn better how to optimize UIs for fast latency times, reading the Jquery Docs to figure out how to best integrate AJAX to dynamically update the system output. I had to make a lightweight interface, and build an API that can run fast enough to have real time feedback, for which I read the requests (HTTP for humans) docs. I read some forum postings on fast APIs in python, as well as watched a couple development videos on people building fast apis for their own applications in python. For the system integration and Jetson configuration, I watched multiple videos on flashing the OS, and read forum posts and docs from NVIDIA. I also consulted LLMs on how to handle bugs with our system integrations and communication protocols. The main strategies and technologies I used were forums (stack overflow, NVIDIA), Docs (Requests, jQuery, NVIDIA), and Youtube videos (independent content creators).

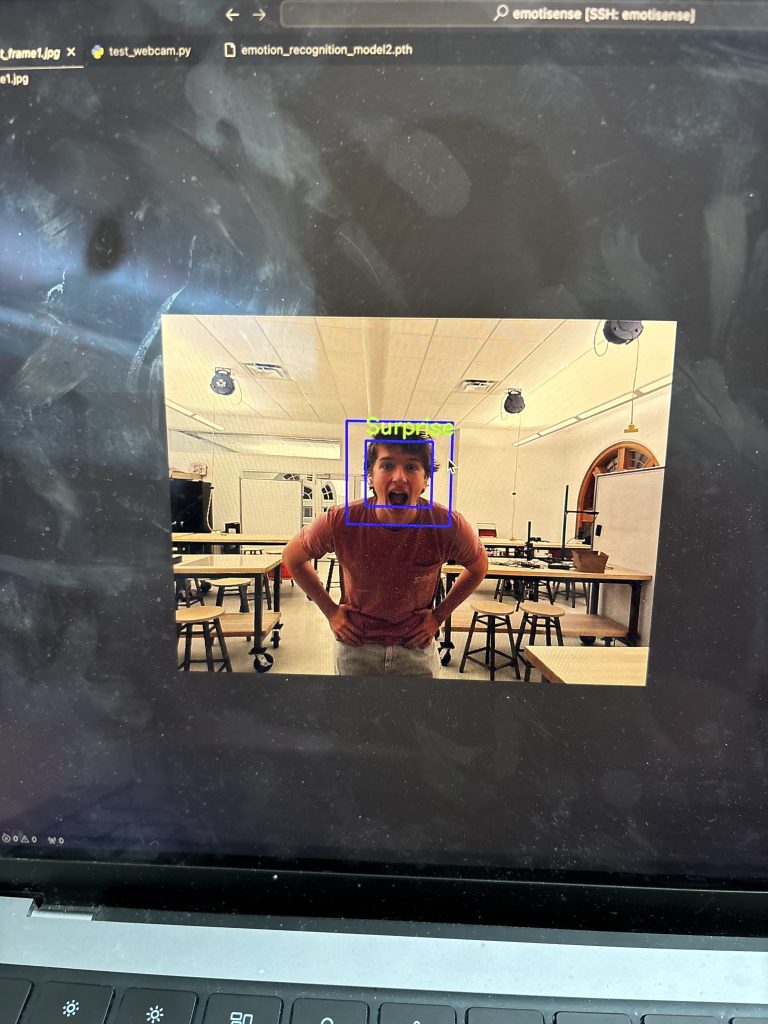

Images:

Kapil’s Status Report for 11/30

1. What did you personally accomplish this week on the project?

- For the demo, integrated the bracelet with the NVIDIA Jetson, ensuring that the entire setup functions as intended.

- Inquired at TechSpark to gather more information about the 3D printing process and requirements

- Base of the bracelet can be printed at TechSpark, however, the flexible straps require external printing.

- Downloaded the new Bluetooth Low Energy libraries onto the Adafruit Feather

- CircuitPython is not compatible with these libraries and requires an alternative method for pushing code. Exploring best approach to resolve this issue.

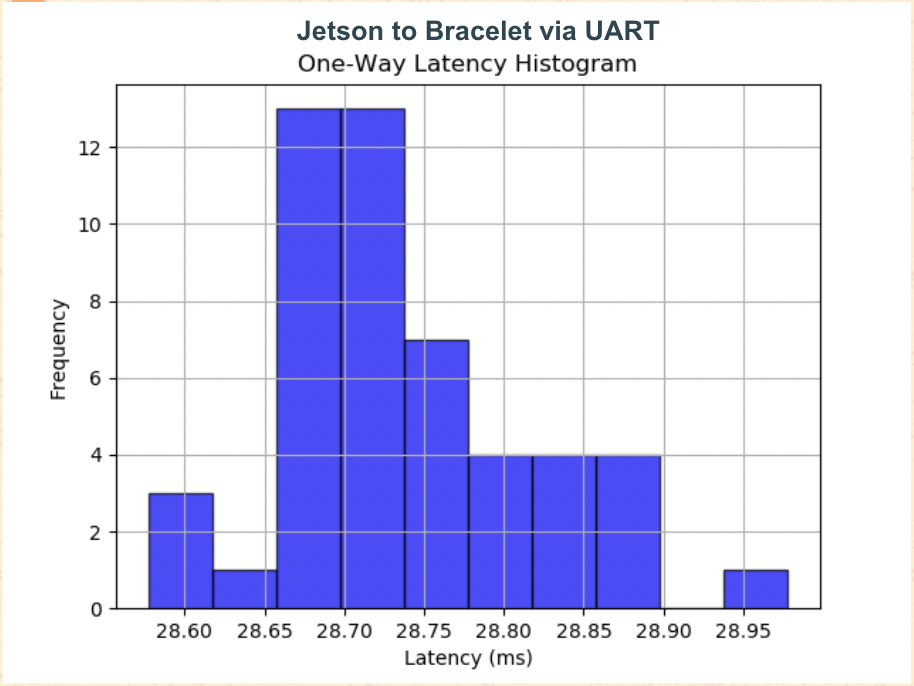

- Tested and validated UART communication latency.

- Measured latency was approximately 30ms, which is significantly below the 150ms requirement

- Worked on the final presentation for the project

2. Is your progress on schedule or behind?

- My progress is on schedule. The successful demo and latency validation ensure that the system is performing as required. I’ve also laid the groundwork for the remaining tasks, including Bluetooth integration and 3D printing.

3. What deliverables do you hope to complete in the next week?

- Implement Bluetooth Low Energy (BLE) integration to replace UART communication, ensuring seamless wireless connectivity.

- Begin printing the 3D enclosure, ensuring it securely houses all components while maintaining usability

Noah’s Status Report for 11/30

Given that integration into our entire system went well, I spent the last week tidying things up and getting some user reviews. The following details some of the tasks I did this past week and over the break:

- We decided to reduce the emotion count from 6 to 4. This was because surprise and disgust had much lower accuracy than the other emotions. Additionally, replicating and presenting a disgusted or surprised face is pretty difficult and these faces come up pretty rarely over the course of a typical conversation.

- Increased the threshold to present an emotion.

- Assume a neutral state if not confident in the emotion detected.

- Added a function to output a new state indicating if a face is even present within the bounds.

- Updates website to indicate if no face is found.

- Also helps for debugging purposes.

- Updates website to indicate if no face is found.

- Did some more research on how to move our model to Onyx & Tensorflow which might speed up computation and allow us to load a more complex model.

- During the break, I spoke to a few of my autistic brother’s teachers from my highschool regarding what they thought about the project and what could be better.

- Overall, they really liked and appreciated the project and liked the easy integration with the IPads already present in the school

I am ahead of schedule, and my part of the project is done for the most part. I’ve hit MVP and will continue making slight improvements where I see fit.

Goals for next week:

- Add averaging of the detected emotions over the past 3 seconds which should increase the confidence in our predictions.

- Keep looking into compiling the CV model onto Onyx – a Jetson-specific way of running models – which would lower latency and allow us to use our slightly better model.

- Help Mason conduct some more user tests and get feedback so that we can make small changes.

- Writing the final report and getting ready for the final presentation.Additional Question: What new tools or new knowledge did you find it necessary to learn to be able to accomplish these tasks? What learning strategies did you use to acquire this new knowledge?

- The main tools I needed to learn for this project were Pytorch, CUDA, Onyx, and the Jetson.

- I already had a lot of experience in Pytorch; however, each new model I make teaches me new things. For emotion recognition models, I watched a few YouTube videos to get a sense of how existing models already work.

- Additionally, I read a few research papers to get a sense of what top-of-the-line models are doing.

- For CUDA and Jetson, I relied mostly on the existing Nvidia and Jetson documentation as well as ChatGPT to help me pick compatible softwares.

- Public forums are also super helpful here.

- Learning Onyx has been much more difficult and I’ve mainly looked at the existing documentation. I imagine that ChatGPT and YouTube might be pretty helpful here going forward.

- I already had a lot of experience in Pytorch; however, each new model I make teaches me new things. For emotion recognition models, I watched a few YouTube videos to get a sense of how existing models already work.

Team Status Report 11/30

- What are the most significant risks that could jeopardize the success of the project?

BLE Integration: Transitioning from UART to BLE has been challenging. While UART meets latency requirements and works well for now, our BLE implementation has revealed issues with maintaining a stable connection. Finishing this in time for the demo is critical to reach our fully fledged system.

- Limited User Testing: Due to time constraints, the scope of user testing has been narrower than anticipated. This limits the amount of user feedback we can incorporate before the final demo. However, we are fairly satisfied with the system given the feedback we have received so far. The bracelet will be a major component of this though.

- Were any changes made to the existing design of the system?

- We planned to add a Kalman filter to smooth the emotion detection output and improve the reliability of the confidence score. This will reduce the impact of noise and fluctuations in the model’s predictions. If this does not work we will instead use a rolling average

- Updated the web interface to indicate when no face is detected, improving user experience and accuracy of the output.

- Updated schedule if changes have occurred:

We remain on schedule outside of bracelet delays and have addressed challenges effectively to ensure a successful demo. Final user testing and BLE integration are prioritized for the upcoming week.

Testing results:

Model output from Jetson to both Adafruit and Webserver:

Team’s Status Report 11/16

Team’s Status Report 11/16

1. What are the most significant risks that could jeopardize the success of the project?

- Jetson Latency: Once the model hit our accuracy threshold of 70%, we had planned to continue doing improvement as time allowed. However, upon system integration, we have recognized that the Jetson cannot handle a larger model due to latency concerns. This is fine; however, does limit the potential of our project while running on this Jetson model.

- Campus network connectivity: We identified that the Adafruit Feather connects reliably on most WiFi networks but encounters issues on CMU’s network due to its security. We still need to tackle this issue, as we are currently relying on a wired connection between the Jetson and braclet.

2. Were any changes made to the existing design of the system?

- We have decided to remove surprise and disgust from the emotion detection model as replicating a disgusted or surprised face has proven to be too much of a challenge. We had considered this at the beginning of the project given that they emotions were the 2 least important; however, it was only with user testing that we recognized that they are too inaccurate.

3. Provide an updated schedule if changes have occurred:

We remain on schedule and were able to overcome any challenges allowing us to be ready for a successful demo.

Documentation:

- We don’t have pictures of our full system running; however, this can be seen in our demos this week. We will include pictures next week when we have cleaned up the system and integration.

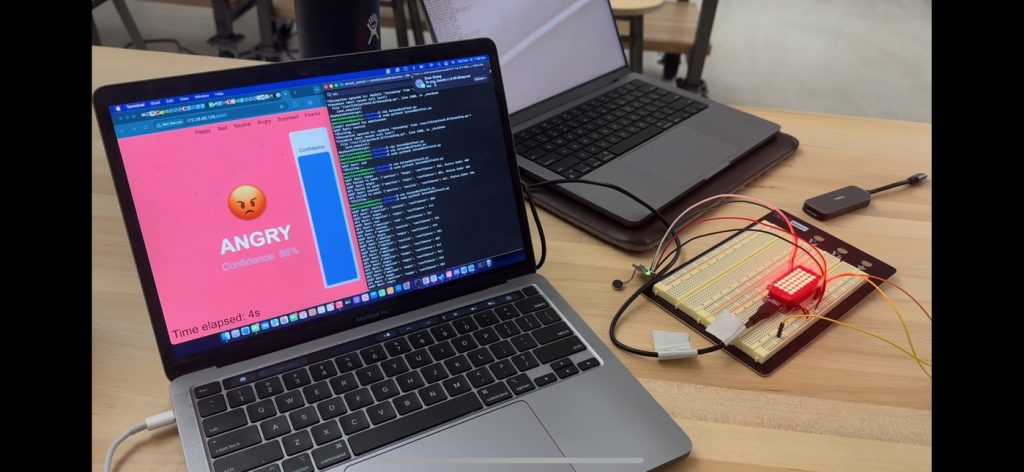

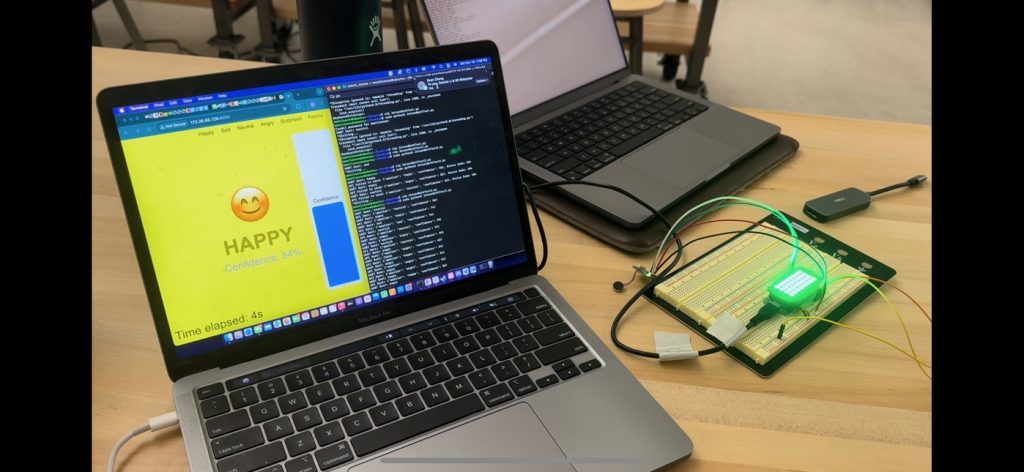

Model output coming from jetson to both Adafruit and Webserver:

Model output running on Jetson (shown via SSH):