1. What did you personally accomplish this week on the project?

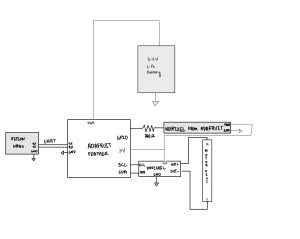

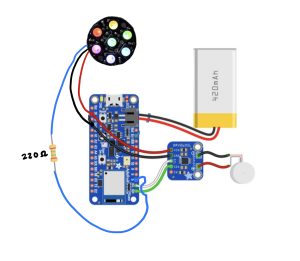

- This week, I assembled the preliminary circuit for the bracelet, bringing together the main components to start testing their functionality as a system. I successfully got the haptic feedback motor to operate with different vibration modes, confirming that the motor can provide the varied feedback required by the design.

- During testing, I identified an issue with the NeoPixel’s power supply. The NeoPixel requires 5V for both power and the data line, but the Adafruit Feather can only supply 3.3V. Although we initially thought this voltage difference wouldn’t affect performance, I observed unexpected behavior in the NeoPixel’s operation. To address this, I ordered a part specifically designed to handle the voltage issue, ensuring consistent functionality for the NeoPixel.

https://www.adafruit.com/product/2945

- While waiting for this part, I’m preparing to start working on the 3D printed enclosure, finalizing the design so it will be ready for printing. I also plan to begin establishing the communication protocols between the Adafruit Feather and the Jetson, which will be crucial for our system’s data flow.

2. Is your progress on schedule or behind?

- My progress is on schedule, despite the NeoPixel power issue. The haptic motor testing was successful, and I’ve already ordered the part needed to resolve the NeoPixel issue. I’ll stay productive by focusing on the enclosure design and the Feather-Jetson communication setup while waiting for the part to arrive.

3. What deliverables do you hope to complete in the next week?

- Next week, I plan to finalize the 3D printed enclosure design and begin printing, ensuring the bracelet housing is ready for the final assembly.

- I’ll also aim to complete the initial setup for communication between the Feather and Jetson, which will allow data transmission and control commands to be integrated into the bracelet.

- Once the new part arrives, I’ll revisit the NeoPixel setup to confirm that the circuit operates smoothly with the correct voltage levels.