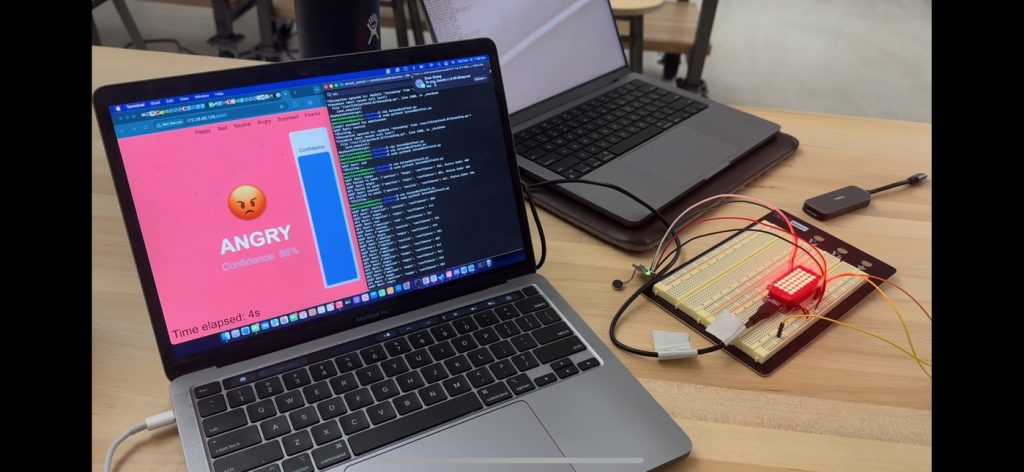

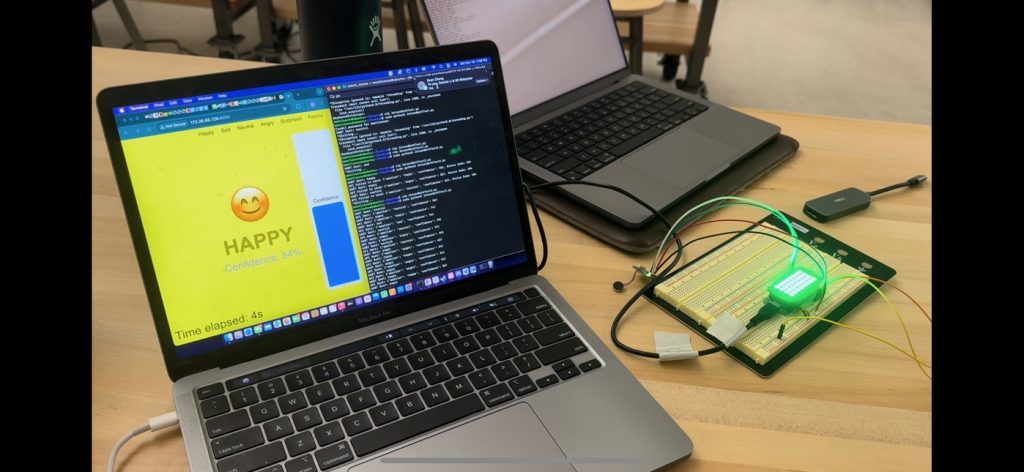

Given that integration into our entire system went well, I spent the last week tidying things up and getting some user reviews. The following details some of the tasks I did this past week and over the break:

-

- Added averaging of the detected emotions over the past 3 seconds

- Decreases frequent buzzing in the braclet

- Emotions are much more regulated and only change when the model is sufficiently confident.

- Coordinated time with some friends to use our system and got user feedback

- Tended to be pretty possible recognizing that this is only a first iteration

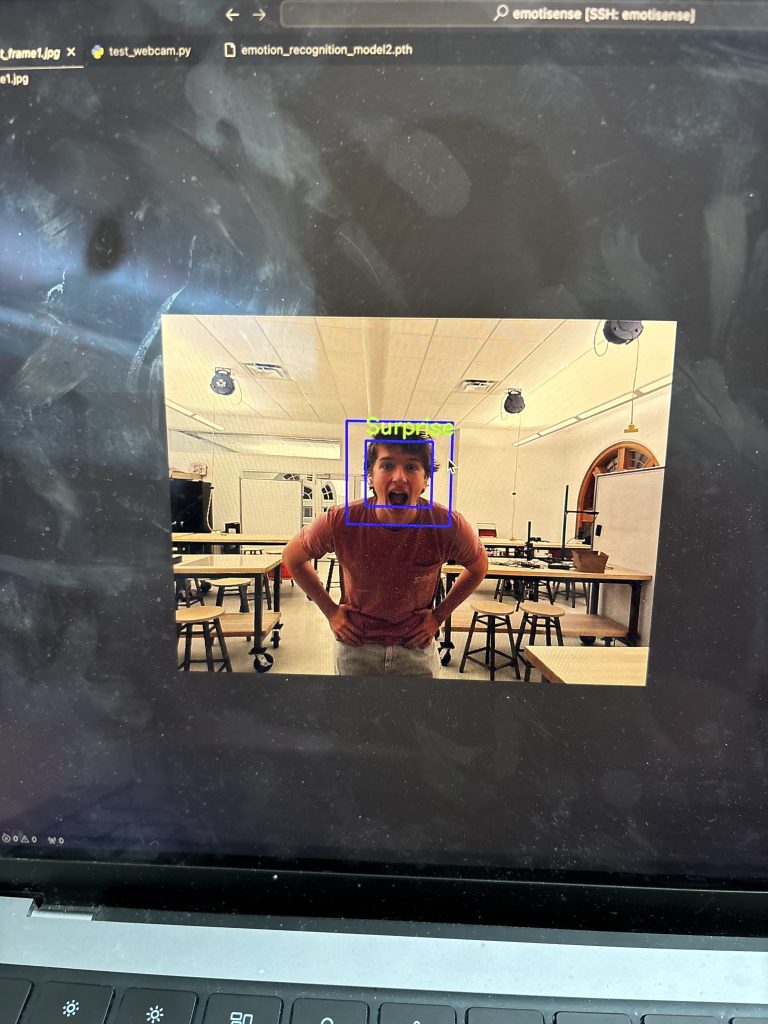

- Started working on the poster and taking pictures of our final system.

- Waiting on the bracelet’s completion, but it’s not a blocker.

- Added averaging of the detected emotions over the past 3 seconds

List all unit tests and overall system test carried out for experimentation of the system. List any findings and design changes made from your analysis of test results and other data obtained from the experimentation.

*

I am done with my part of the project, and will mainly be working on the reports and such going forward.

Goals for next week:

- Finish the poster, final report, and presentation!