Team Status Report for 12/7

1. What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

- Bluetooth Low Energy (BLE) Reliability: While BLE integration is functional, the reliability of the communication in different environments and during prolonged use needs further validation.

- User Feedback Integration: Noah implemented averaging over 3 seconds to stabilize emotional feedback, and Mason introduced a Kalman filter to reduce noise in model output.

- Demo Room Setup: Mason has tested the Jetson with Ethernet in the demo room and confirmed functionality. As a contingency, offline operation using pre-loaded models and configurations is ready.

2. Were any changes made to the existing design of the system? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

- Averaging for Emotion Feedback: Noah added a 3-second averaging mechanism to the model output to stabilize emotional transitions and reduce frequent buzzing in the bracelet.

- Kalman Filter Implementation: Mason integrated a Kalman filter into the system to reduce noise and unnecessary state transitions in the model’s output.

- BLE Integration via USB Dongle: Kapil and Mason discovered that the Jetson does not natively support Bluetooth, requiring the use of a USB Bluetooth adapter.

3. Provide an updated schedule if changes have occurred:

- The project is on track overall.

4. List all unit tests and overall system tests carried out for experimentation of the system. List any findings and design changes made from your analysis of test results and other data obtained from the experimentation.

Unit Tests:

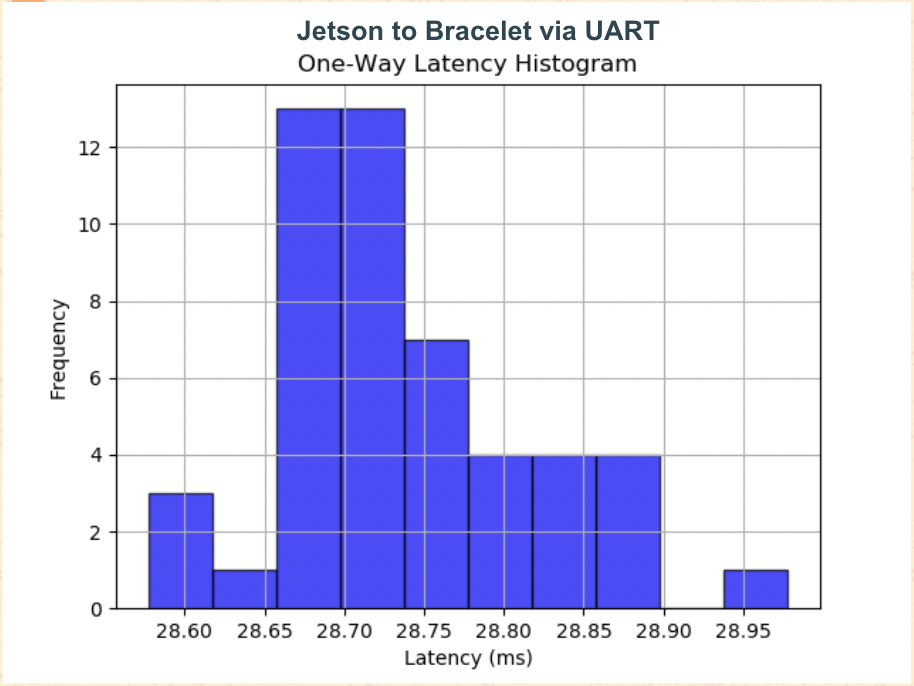

- BLE Communication Testing (Kapil): Tested the latency and stability of BLE communication between the Jetson and the Adafruit Feather. Verified that latency is within acceptable limits and that commands are transmitted accurately.

- Haptic Feedback Regulation (Noah): Added averaging for emotional feedback, stabilizing the output and reducing frequent buzzing. Verified that emotional transitions occur only when the model is confident.

- Kalman Filter Integration (Mason): Tested the Kalman filter on the model’s output to reduce noise. Found that it significantly improved stability in feedback transitions but requires further tuning for video input.

Overall System Tests:

- User Testing: Conducted user tests with three participants to assess the usability and practicality of the system. Feedback was positive, noting that the system is intuitive and functional, with suggestions for minor improvements in feedback timing and UI clarity.

Findings and Design Changes:

- Feedback Stabilization: Averaging and Kalman filtering significantly improved feedback quality, reducing noise and unnecessary transitions. These features will remain in the final design.

- BLE Integration: The USB dongle resolved the Bluetooth issue, enabling seamless communication without major redesigns or delays.

Team Status Report 11/30

- What are the most significant risks that could jeopardize the success of the project?

BLE Integration: Transitioning from UART to BLE has been challenging. While UART meets latency requirements and works well for now, our BLE implementation has revealed issues with maintaining a stable connection. Finishing this in time for the demo is critical to reach our fully fledged system.

- Limited User Testing: Due to time constraints, the scope of user testing has been narrower than anticipated. This limits the amount of user feedback we can incorporate before the final demo. However, we are fairly satisfied with the system given the feedback we have received so far. The bracelet will be a major component of this though.

- Were any changes made to the existing design of the system?

- We planned to add a Kalman filter to smooth the emotion detection output and improve the reliability of the confidence score. This will reduce the impact of noise and fluctuations in the model’s predictions. If this does not work we will instead use a rolling average

- Updated the web interface to indicate when no face is detected, improving user experience and accuracy of the output.

- Updated schedule if changes have occurred:

We remain on schedule outside of bracelet delays and have addressed challenges effectively to ensure a successful demo. Final user testing and BLE integration are prioritized for the upcoming week.

Testing results:

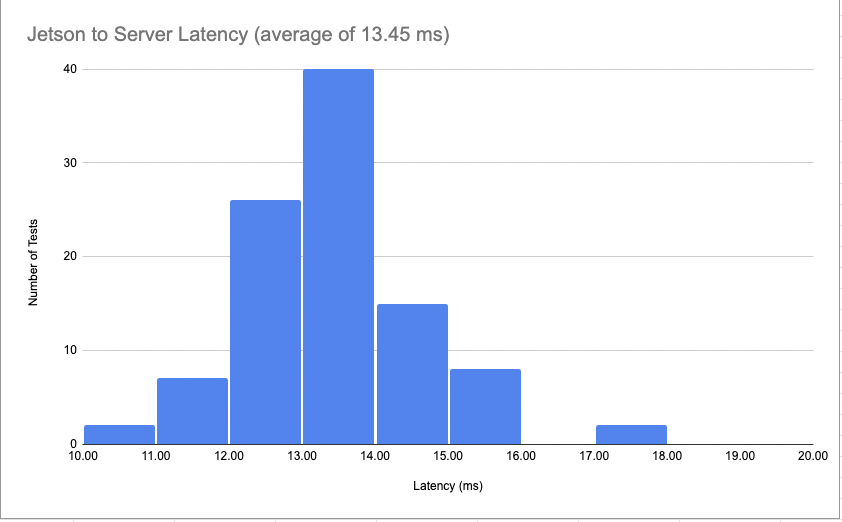

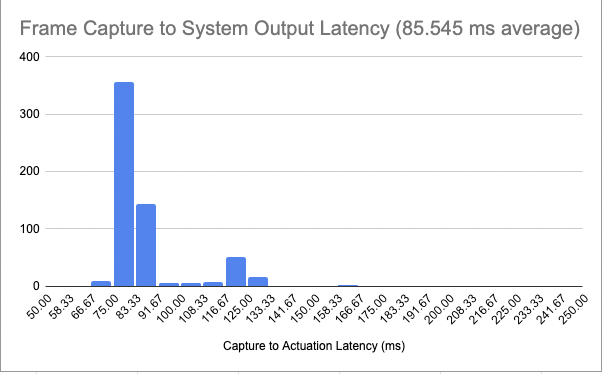

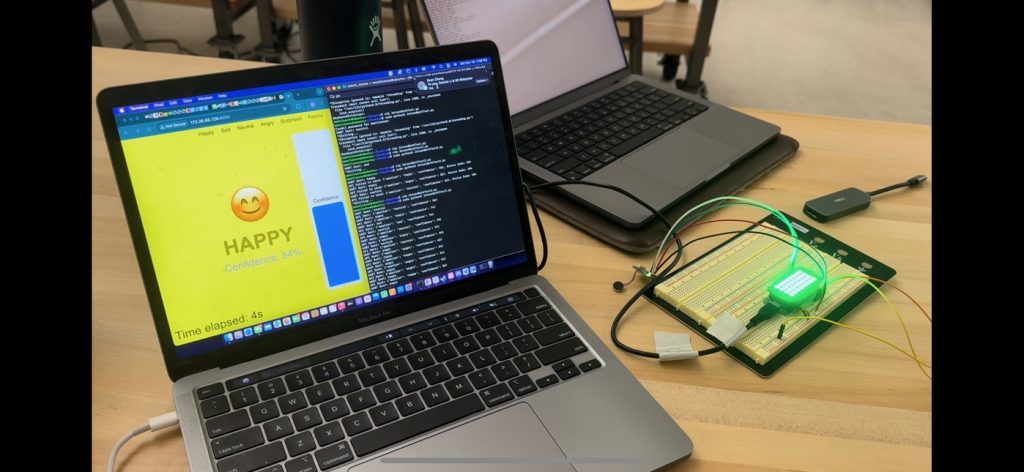

Model output from Jetson to both Adafruit and Webserver:

Team’s Status Report 11/16

Team’s Status Report 11/16

1. What are the most significant risks that could jeopardize the success of the project?

- Jetson Latency: Once the model hit our accuracy threshold of 70%, we had planned to continue doing improvement as time allowed. However, upon system integration, we have recognized that the Jetson cannot handle a larger model due to latency concerns. This is fine; however, does limit the potential of our project while running on this Jetson model.

- Campus network connectivity: We identified that the Adafruit Feather connects reliably on most WiFi networks but encounters issues on CMU’s network due to its security. We still need to tackle this issue, as we are currently relying on a wired connection between the Jetson and braclet.

2. Were any changes made to the existing design of the system?

- We have decided to remove surprise and disgust from the emotion detection model as replicating a disgusted or surprised face has proven to be too much of a challenge. We had considered this at the beginning of the project given that they emotions were the 2 least important; however, it was only with user testing that we recognized that they are too inaccurate.

3. Provide an updated schedule if changes have occurred:

We remain on schedule and were able to overcome any challenges allowing us to be ready for a successful demo.

Documentation:

- We don’t have pictures of our full system running; however, this can be seen in our demos this week. We will include pictures next week when we have cleaned up the system and integration.

Model output coming from jetson to both Adafruit and Webserver:

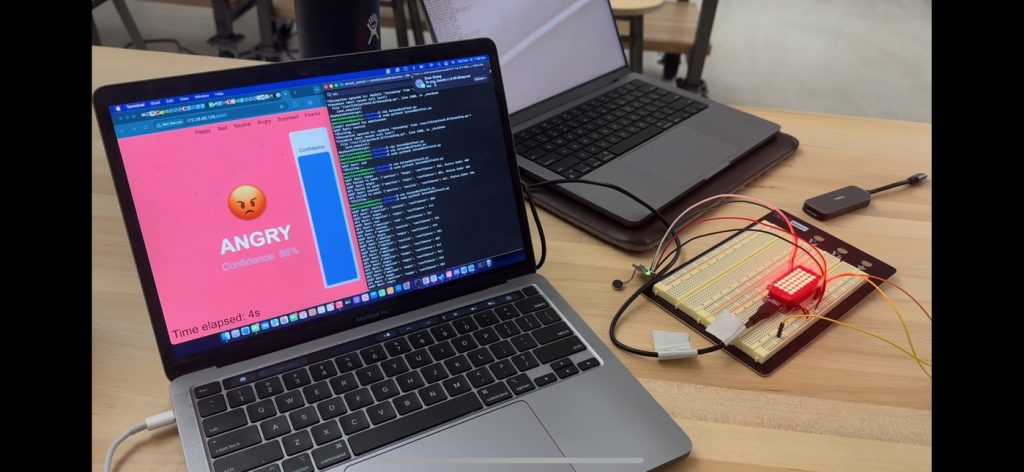

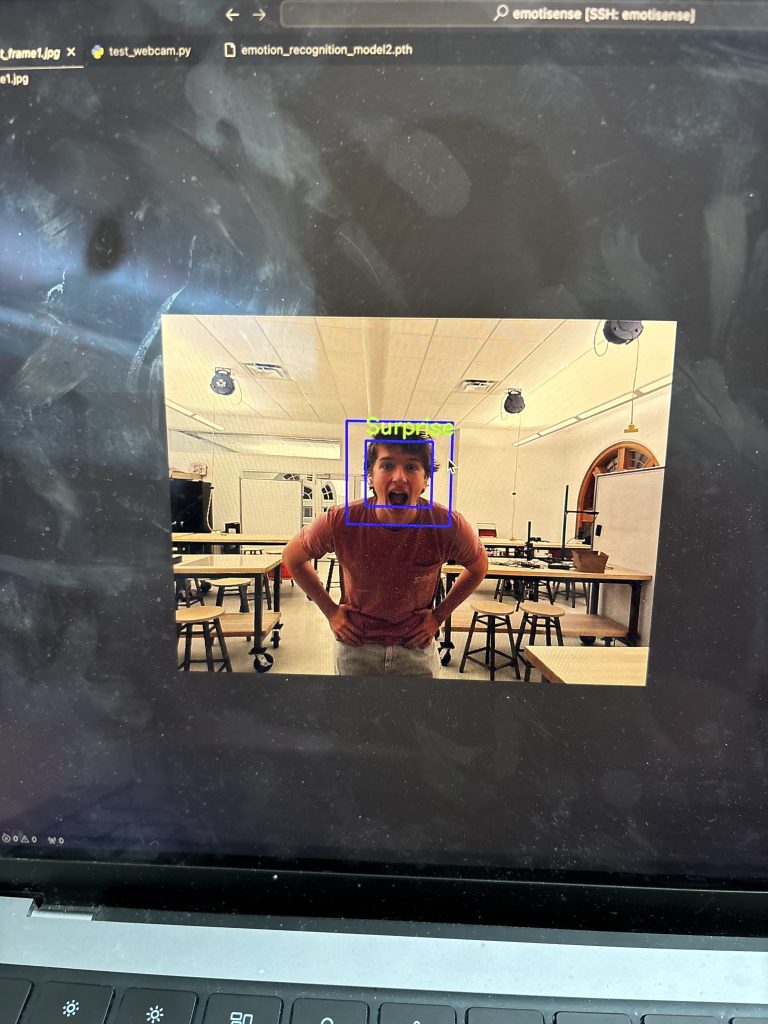

Model output running on Jetson (shown via SSH):

Team’s Status Report 11/9

Team’s Status Report 11/9

1. What are the most significant risks that could jeopardize the success of the project?

- Model Accuracy: While the model achieved 70% accuracy, meeting our threshold, real-time performance with facial recognition is inconsistent. It may require additional training data to improve reliability with variable lighting conditions.

- Campus network connectivity: We identified that the Adafruit Feather connects reliably on most WiFi networks but encounters issues on CMU’s network due to its security. We will need to tackle this in order to get the Jetson and Adafruit communication working.

2. Were any changes made to the existing design of the system?

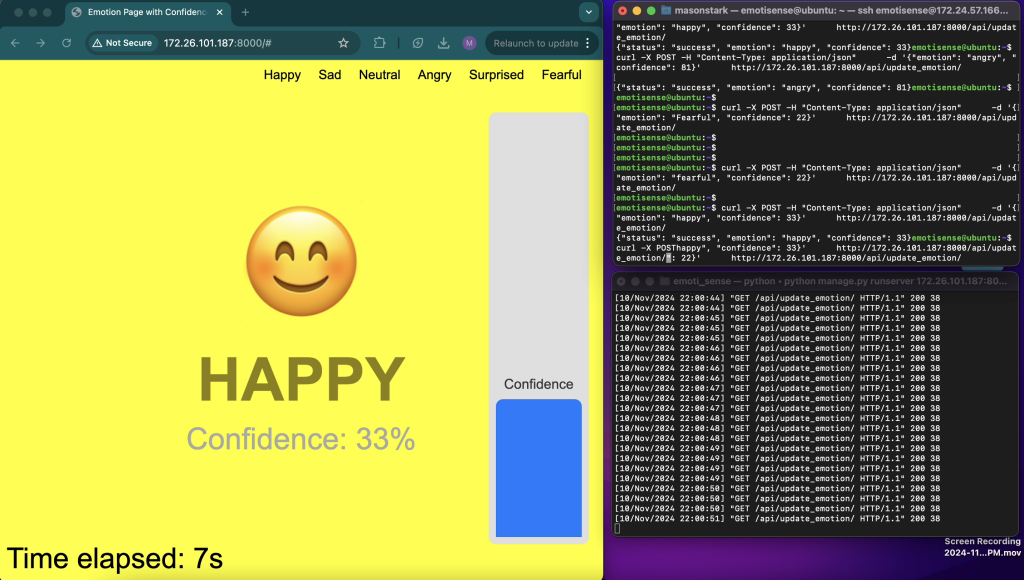

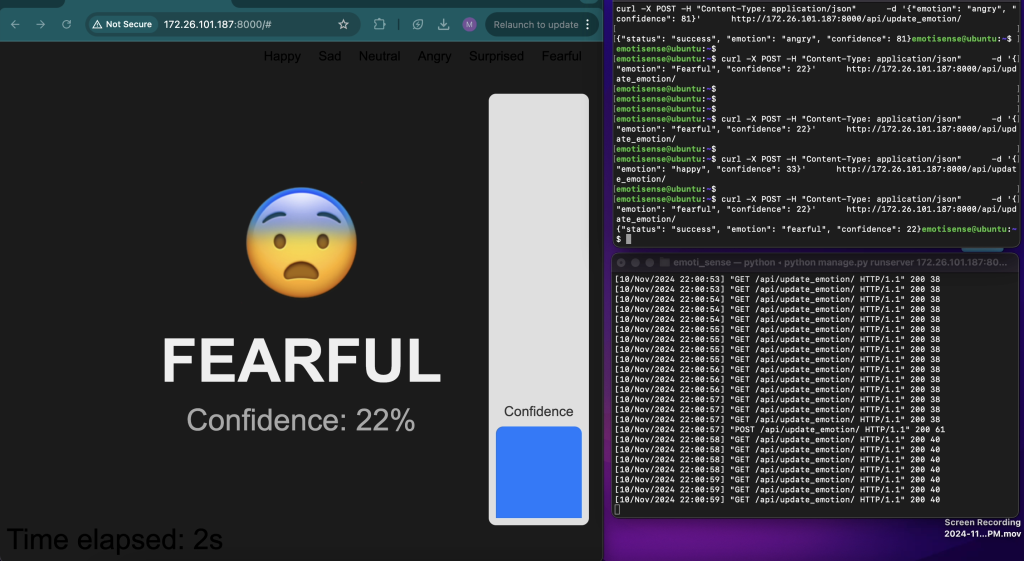

- AJAX Polling and API inclusion for Real-time Updates: We implemented AJAX polling on the website, allowing for continuous updates from the Jetson API. This feature significantly enhances user experience by dynamically displaying real-time data on the website interface.

- Jetson Ethernet: We have decided to use ethernet for the jetson to connect it to the cmu network.

3. Provide an updated schedule if changes have occurred:

The team is close to being on schedule, though some areas require focused attention this week to stay on track for the upcoming demo. We need to get everything up and running in order to transition fully to testing and enhancement following the demo.

Photos and Documentation:

- Jetson-to-website integration showcasing successful data transmission and dynamic updates. emotisense@ubuntu is the ssh into the Jetson. The top right terminal is the jetson, the left and bottom right are the website UI and request history respectively. I also have a video of running a test file on the jetson but video embedded doesn’t work for this post.

Team’s Status Report 11/2

1. What are the most significant risks that could jeopardize the success of the project?

- Power and Component Integration: For the embedded bracelet, we identified a power issue with the NeoPixel which caused unexpected behavior. Although we ordered a part to address this, any further power discrepancies could delay testing.

- Integration with Jetson We have not received our NVIDIA Jetson yet and still need to complete integration between the three subsystems.

2. Were any changes made to the existing design of the system?

- Power Adjustment for NeoPixel: To resolve the NeoPixel’s voltage requirements, we ordered a new component, modifying the power design for stable operation.

- Real-time API and UI Enhancements: We designed a REST API for real-time data transmission and updates, which adds a dynamic element to the website interface. Upcoming UI enhancements, including a timer and confidence bar, will improve the usability and clarity of the feedback display.

3. Provide an updated schedule if changes have occurred:

- The team remains on schedule with component integration. Kapil will continue with the 3D printed enclosure and communication setup while awaiting the NeoPixel component. Noah will transition to Jetson-based model testing and optimization. Mason’s API testing and UI improvements will align with the next integration phase, ensuring that system components function cohesively.

Photos and Documentation:

- Initial bracelet circuit, highlighting successful haptic motor tests and NeoPixel power issues.

- Images of the real-time labeling function, which demonstrates the model’s current performance and areas needing improvement.

Team Status Report for 10/20

Part A: Global Factors by Mason

The EmotiSense bracelet is developed to help neurodivergent individuals, especially those with autism, better recognize and respond to emotional signals. Autism affects people across the globe, creating challenges in social interactions that are not bound by geographic or cultural lines. The bracelet’s real-time feedback, through simple visual and haptic signals, supports users in understanding the emotions of those they interact with. This tool is particularly valuable because it translates complex emotional cues into clear, intuitive signals, making social interactions more accessible for users.

EmotiSense is designed with global accessibility in mind. It uses components like the Adafruit Feather microcontroller and programming environments such as Python and OpenCV, which are globally available and widely supported. This ensures that the technology can be implemented and maintained anywhere in the world, including in places with limited access to specialized educational resources or psychological support. By improving emotional communication, EmotiSense aims to enhance everyday social interactions for neurodivergent individuals, fostering greater inclusion and improving life quality across diverse communities.

Part B: Cultural Factors by Kapil

Across cultures, emotions are expressed and more importantly interpreted in different ways. For instance, in some cultures, emotional expressions are more subdued, while in others, they are more pronounced. Despite this, we want EmotiSense to recognize emotions without introducing biases on cultural differences. To achieve this, we are designing the machine learning model to focus on universal emotional cues, rather than specific cultural markers.

One approach we are taking to ensure that the model does not learn differences in emotions across cultures or races is by converting RGB images to grayscale. This eliminates any potential for the model to detect skin tone or other race-related features, which could introduce unintended biases in emotion recognition. By focusing purely on the structural and movement-based aspects of facial expressions, EmotiSense remains a culturally neutral tool that enhances emotional understanding while preventing the reinforcement of stereotypes or cultural biases.

Another way to prevent cultural or racial biases in the EmotiSense emotion recognition model is by ensuring that the training dataset is diverse and well-balanced across different cultural, ethnic, and racial groups. This way we can reduce the likelihood that the model will learn or favor emotional expressions from a specific group.

Part C: Environmental Factors by Noah

While environmental factors aren’t a primary concern in our design of EmotiSense, ensuring that we design a product that is both energy-efficient and sustainable is crucial. Since our system involves real-time emotion recognition, the bracelet and the Jetson need to run efficiently for extended periods without excessive energy consumption. We’re focusing on optimizing battery life to ensure that the bracelet can last for at least four hours of continuous use. To complete this task while maintaining a lightweight bracelet, the design naturally requires us to maintain energy efficiency.

Additionally, a primary concern would be ensuring that our machine-learning model does not utilize excessive energy in its prediction. By maintaining a lightweight model running on a pretty efficient machine, the Nvidia Jetson, we minimize our reliance on computations relying on lengthy computation.

Team Status Report for 10/5

1. What are the most significant risks that could jeopardize the success of the project?

- Facial Recognition and Image Preprocessing: Noah is working on developing the facial recognition and image preprocessing components from scratch, which presents a risk if model performance doesn’t meet expectations or integration issues arise with Jetson or other hardware. However, Noah is mitigating this by researching existing models (RFB-320 and VGG13) and refining preprocessing techniques.

- Website Deployment and User Experience Testing: Mason’s deployment of the website is slightly delayed due to a busy week. If this stretches out, it could impact our ability to conduct timely user experience testing, but Mason plans to have it completed before the break to refocus on the UI and Jetson integration.

- Component Delivery Delays: There is a risk that delays in ordering and receiving key hardware components (such as the microcontroller and potentially a new camera for Noah’s work) could affect the project timeline. We are mitigating this by ensuring orders are placed promptly and making use of simulation tools or placeholder hardware in the meantime.

2. Were any changes made to the existing design of the system?

- Haptic Feedback System: Based on feedback from our design presentation, we decided to move away from binary on/off vibration feedback in the bracelet. Instead, the haptic feedback will now dynamically adjust its intensity. This required the addition of a microcontroller, but it improves the overall functionality of the bracelet.

- Facial Recognition Model: Noah is creating a custom facial recognition model instead of using a pre-built model, as our project goals shifted to developing this from scratch. This adjustment will give us more flexibility and control over the system’s performance, but also adds additional development time.

- Website User System and Database: Mason has made progress on the user system and basic UI elements but is slightly behind due to other commitments. No structural changes have been made to the overall website design, and deployment is still on track.

3. Provide an updated schedule if changes have occurred:

- Bracelet Component and PCB Changes: The decision to remove the PCB from the bracelet and instead use a 3D printed enclosure has been made. This simplifies the next steps and focuses more on the mechanical assembly of the bracelet.

- Website Deployment: Mason’s deployment of the web app is scheduled to be completed before the October break, and the UI/Jetson configuration work will continue afterward.

Photos and Documentation:

- The team is awaiting final feedback from TA and faculty on the circuit design for the bracelet before ordering components.

- Noah’s bounding box and preprocessing work on facial recognition will need further refinement, but initial results are available for review.

Team Status Report for 9/28

- What are the most significant risks that could jeopardize the success of the

project? How are these risks being managed? What contingency plans are ready?

The most significant risk at this stage is developing an emotion recognition model that is accurate enough to be useful. Top-of-the-line models currently are nearing the 80% accuracy rate which is somewhat low. Coming within reach of this metric will be crucial to ensuring our use cases are met. This has become a larger concern now that we will be making our own, custom model. This risk is being managed by ensuring that we have backup, pre-existing models that can be averaged with our model in case our base accuracy is too low.

Additionally, user testing has become a concern for us as we do not want to trigger the need for review by the IRB. This prevents us from doing user testing targeted at autistic individuals; however, we can still conduct them on the general population.

2. Were any changes made to the existing design of the system (requirements,

block diagram, system spec, etc)? Why was this change necessary, what costs

does the change incur, and how will these costs be mitigated going forward?

The main change was cited above. We will be making our own computer vision and emotional recognition model. This is primarily with the goal to show our prowess within the area of computer vision and not leveraging too many existing components. This constricts Noah’s time somewhat; however, Mason will be picking up the camera and website integration so that Noah can fully focus on the model.

3. Provide an updated schedule if changes have occurred.

No Schedule changes at this time.

4. This is also the place to put some photos of your progress or to brag about a

the component you got working.

The physical updates are included in our design proposal.

Additionally, here is the dataset we will use to train the first version of the computer vision model (https://www.kaggle.com/datasets/jonathanoheix/face-expression-recognition-dataset).

Part A (Noah Champagne):

Due to the intended audience of EmotiSense being a particularly marginalized group of people, it is our utmost concern that the product can deliver true and accurate readings of emotions. We know that EmotiSense can greatly increase neurodivergent people’s ability to engage socially, and want to help facilitate that benefit to the best of our abilities. But, we care deeply about maintaining the safety of our users and have duly considered that a false reading of a situation could discourage or bring about harm to them. As such we have endeavored to create a system that only offers emotional readings once a very high confidence level has been reached. This will help to ensure the health of those using the product who can confidently use our system.

Additionally, our product serves to increase the welfare of a marginalized group who suffers to a larger extent than the general population. Providing them with this product helps to close the gap in conversational understanding between those with neurodivergence and those without it. This will help to provide this group with more equity in terms of welfare.

Part B (Kapil Krishna):

EmotiSense is highly sensitive to the needs of neurodivergent communities. We want to emphasize the importance of being sensitive to the way neurodivergent communities interact and support one another. The design of EmotiSense aims to be sensitive to this fact and simply enhances self-awareness and social interactions, not combat neurodivergence itself. Additionally. EmotiSense aims to provide support for those who interact with neurodivergent individuals in providing a tool and strategy to facilitate better communication without compromising autonomy. Lastly, we observe that there has been increasing advocacy for technology to empower those with disabilities. EmotiSense aims to align with this trend in reducing social barriers and creating more inclusivity and awareness. With regard to economic organizations, we notice that affordability and availability of the device are key in creating the most impact possible.

Part C (Mason Stark):

Although our primary aims fit better into the previous two categories, Emotisense can still suit significant use cases for economic endeavors. One such use case is customer satisfaction. Many businesses will try to get customer satisfaction data by polling customers online, or even by physical customer satisfaction polling systems (think of those boxes with frown and smile faces in airport bathrooms). However these systems are oftentimes under utilized, and subject to significant sampling biases. Being able to get the emotions of customers in real time could better assist businesses in uncovering accurate satisfaction data. When businesses have access to accurate customer satisfaction data, they can leverage that data to improve their business functionality and profitability. Some specific businesses where emotisense could be deployed include “order at the counter” restaurants, banks, and customer service desks.

Team Status Report for 9/21

- What are the most significant risks that could jeopardize the success of the

project? How are these risks being managed? What contingency plans are ready?

Primary risks include Model accuracy, environment control, and speed/user experience. We are planning to try to manage accuracy by experimenting with a few models and by using a composition of models if needed. The environment control is something that we will working on when prototyping the hardware and our physical system. We make a protocol for keeping the environment consistent. User experience and speed will be monitored as we get into the later stages of development.

2. Were any changes made to the existing design of the system (requirements,

block diagram, system spec, etc)? Why was this change necessary, what costs

does the change incur, and how will these costs be mitigated going forward?

No changes at this moment.

3. Provide an updated schedule if changes have occurred.

No Schedule changes at this time.

4.This is also the place to put some photos of your progress or to brag about a

component you got working.

We don’t have many physical updates at this time but they certainly will be included in the next report. The majority of our work this week surrounded prep for and rehearsing our presentation.