Noah’s Status Report for 12/7

Given that integration into our entire system went well, I spent the last week tidying things up and getting some user reviews. The following details some of the tasks I did this past week and over the break:

-

- Added averaging of the detected emotions over the past 3 seconds

- Decreases frequent buzzing in the braclet

- Emotions are much more regulated and only change when the model is sufficiently confident.

- Coordinated time with some friends to use our system and got user feedback

- Tended to be pretty possible recognizing that this is only a first iteration

- Started working on the poster and taking pictures of our final system.

- Waiting on the bracelet’s completion, but it’s not a blocker.

- Added averaging of the detected emotions over the past 3 seconds

List all unit tests and overall system test carried out for experimentation of the system. List any findings and design changes made from your analysis of test results and other data obtained from the experimentation.

*

I am done with my part of the project, and will mainly be working on the reports and such going forward.

Goals for next week:

- Finish the poster, final report, and presentation!

Noah’s Status Report for 11/30

Given that integration into our entire system went well, I spent the last week tidying things up and getting some user reviews. The following details some of the tasks I did this past week and over the break:

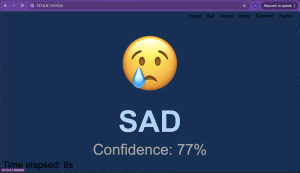

- We decided to reduce the emotion count from 6 to 4. This was because surprise and disgust had much lower accuracy than the other emotions. Additionally, replicating and presenting a disgusted or surprised face is pretty difficult and these faces come up pretty rarely over the course of a typical conversation.

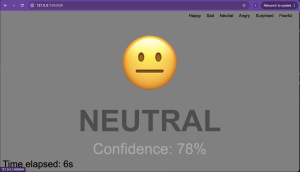

- Increased the threshold to present an emotion.

- Assume a neutral state if not confident in the emotion detected.

- Added a function to output a new state indicating if a face is even present within the bounds.

- Updates website to indicate if no face is found.

- Also helps for debugging purposes.

- Updates website to indicate if no face is found.

- Did some more research on how to move our model to Onyx & Tensorflow which might speed up computation and allow us to load a more complex model.

- During the break, I spoke to a few of my autistic brother’s teachers from my highschool regarding what they thought about the project and what could be better.

- Overall, they really liked and appreciated the project and liked the easy integration with the IPads already present in the school

I am ahead of schedule, and my part of the project is done for the most part. I’ve hit MVP and will continue making slight improvements where I see fit.

Goals for next week:

- Add averaging of the detected emotions over the past 3 seconds which should increase the confidence in our predictions.

- Keep looking into compiling the CV model onto Onyx – a Jetson-specific way of running models – which would lower latency and allow us to use our slightly better model.

- Help Mason conduct some more user tests and get feedback so that we can make small changes.

- Writing the final report and getting ready for the final presentation.Additional Question: What new tools or new knowledge did you find it necessary to learn to be able to accomplish these tasks? What learning strategies did you use to acquire this new knowledge?

- The main tools I needed to learn for this project were Pytorch, CUDA, Onyx, and the Jetson.

- I already had a lot of experience in Pytorch; however, each new model I make teaches me new things. For emotion recognition models, I watched a few YouTube videos to get a sense of how existing models already work.

- Additionally, I read a few research papers to get a sense of what top-of-the-line models are doing.

- For CUDA and Jetson, I relied mostly on the existing Nvidia and Jetson documentation as well as ChatGPT to help me pick compatible softwares.

- Public forums are also super helpful here.

- Learning Onyx has been much more difficult and I’ve mainly looked at the existing documentation. I imagine that ChatGPT and YouTube might be pretty helpful here going forward.

- I already had a lot of experience in Pytorch; however, each new model I make teaches me new things. For emotion recognition models, I watched a few YouTube videos to get a sense of how existing models already work.

Team’s Status Report 11/16

Team’s Status Report 11/16

1. What are the most significant risks that could jeopardize the success of the project?

- Jetson Latency: Once the model hit our accuracy threshold of 70%, we had planned to continue doing improvement as time allowed. However, upon system integration, we have recognized that the Jetson cannot handle a larger model due to latency concerns. This is fine; however, does limit the potential of our project while running on this Jetson model.

- Campus network connectivity: We identified that the Adafruit Feather connects reliably on most WiFi networks but encounters issues on CMU’s network due to its security. We still need to tackle this issue, as we are currently relying on a wired connection between the Jetson and braclet.

2. Were any changes made to the existing design of the system?

- We have decided to remove surprise and disgust from the emotion detection model as replicating a disgusted or surprised face has proven to be too much of a challenge. We had considered this at the beginning of the project given that they emotions were the 2 least important; however, it was only with user testing that we recognized that they are too inaccurate.

3. Provide an updated schedule if changes have occurred:

We remain on schedule and were able to overcome any challenges allowing us to be ready for a successful demo.

Documentation:

- We don’t have pictures of our full system running; however, this can be seen in our demos this week. We will include pictures next week when we have cleaned up the system and integration.

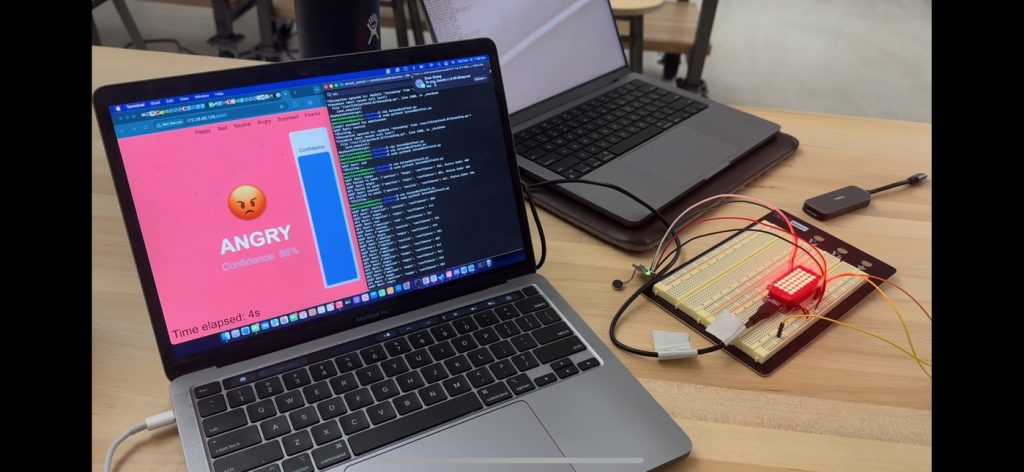

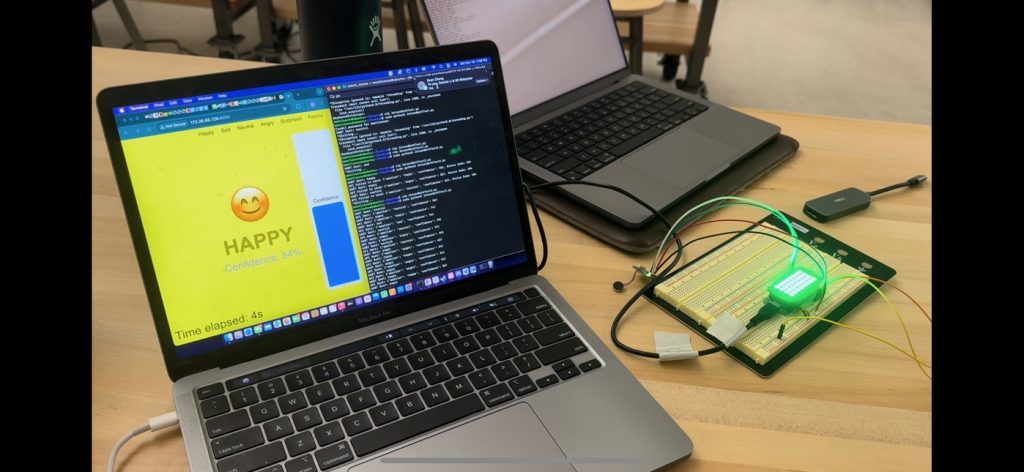

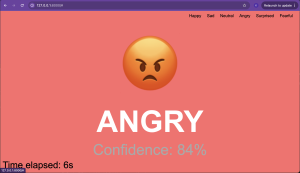

Model output coming from jetson to both Adafruit and Webserver:

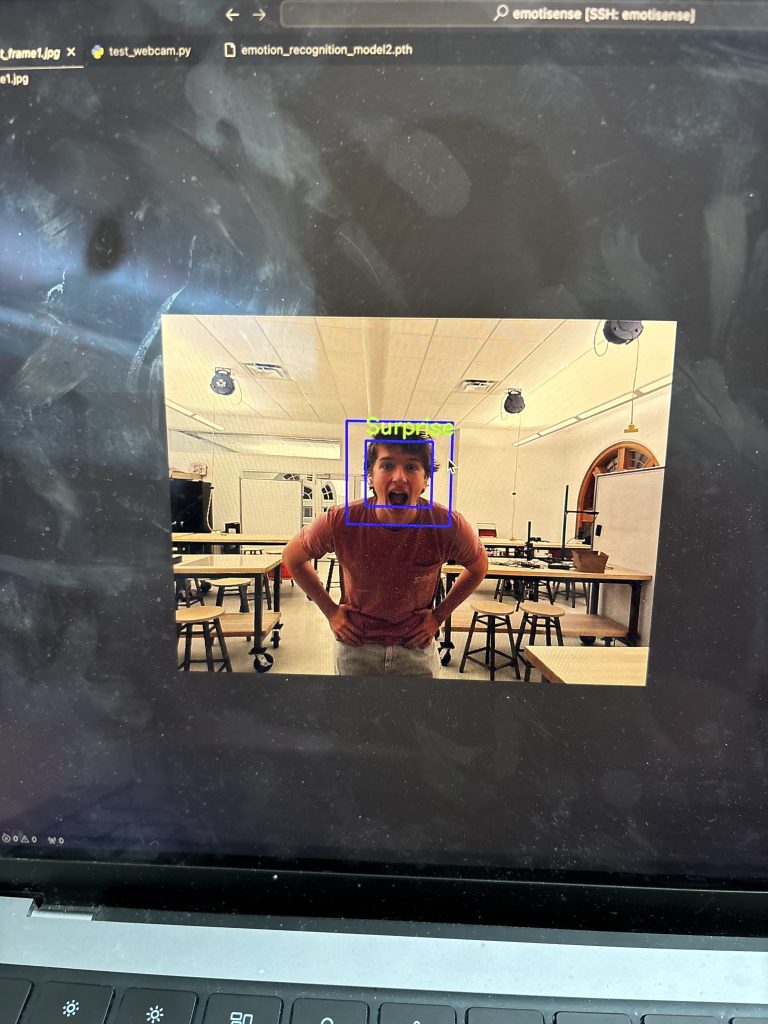

Model output running on Jetson (shown via SSH):

Noah’s Status Report for 11/16

This week, I focused on getting the computer vision model running on the Jetson and integrating the webcam instead of my local computer’s native components. It went pretty well, and here is where I am at now.

- Used SSH to load my model and the facial recognition model onto the Jetson

- Configured the Jetson with a virtual environment that would allow my model to run. This was the complicated part of integration.

- The Jetson’s native software is slightly old, so finding compatible packages is quite the challenge.

- Sourced the appropriate Pytorch, Torchvision, and OpenCV packages

- Transitioned the model to run on the Jetson GPUs

- This requires a lot of configuration on the Jetson including downloading the correct CUDA drivers and ensuring compatibility with our chosen version of Pytorch

- Worked on the output of the model so that it would send requests to both the web server and bracelet with proper formatting.

I am ahead of schedule, and my part of the project is done for the most part. I’ve hit MVP and will be making slight improvements where I see fit.

Goals for next week:

- Calibrate the model such that it assumes a neutral state when it is not confident in the detected emotion.

- Add averaging of the detected emotions over the past 3 seconds which should increase the confidence in our predictions.

- Add an additional output to indicate if a face is even present.

- Look into compiling the CV model onto onyx – a Jetson specific way of running models – so that there will be lower latency.

Noah’s Status Report for 11/9

This week was mostly focused on getting prepared for integration of my CV component into the larger system as a whole. Here are some of the tasks we completed this week:

- Spent some more time doing a randomized grid search to determine the best hyperparameters for our model.

- Made the model slightly better up to 70% accuracy which was our goal; however, it doesn’t translate super well to real-time facial recognition.

- Might need to work on some calibration or use a different dataset

- Conducted research on the capabilities of the Jetson we chose and how to load our model onto that Jetson so that it would properly utilize the GPU’s

- I have a pretty good idea of how this is done and will work on it this week once we are done configuring the SSH on the Jetson.

I am on schedule and ready to continue working on system integration this upcoming week!

Goals for next week:

- Start testing my model using a different dataset which closer mimics the resolution we can get from our webcam.

- If this isn’t promising, we might advice adding some calibration to each individual person’s face.

- Download the model to the Jetson and use the webcam / Jetson to run the model instead of my computer

- This will fulfill my portion of the system integration

- I would like to transmit the outfit from my model to Mason’s website in order to ensure that we are getting reasonable metrics.

Noah’s Status Report for 11/2

I wanted to fully integrate all the computer vision components this week which was mostly a success! Here are some of the following tasks I completed:

- Continued to create and test my new facial recognition model

- This has been kind of a side project to increase the accuracy of the emotion detector in the long run.

- It shows significant benefits, but I’ve decided to hold off for now until we can start with system integration

- Trained a new model with over 200 epochs and 5 convolution layers

- This model reaches an accuracy of 68.8% which is so close to the threshold we set out in the design report.

- I believe we can make it to 70%, but I’ll need to dedicate an instance to training for over 6 hours likely.

- Also need to reduce overfitting again

- Integrated the model to produce labels in real-time

- I’ve included some pictures below to show that it is working!

- It has a noticeable bias to say that you are sad which is a bit of an issue

- I’m still working to discover why this is.

Being mostly done with the model, I am on schedule and ready to work on system integration this upcoming week!

Goals for next week:

- Continue to update the hyperparameters of the model to obtain the best accuracy as possible.

- Download the model to the Jetson and use the webcam / Jetson to run the model instead of my computer

- This will fulfill my portion of the system integration

- I would like to transmit the outfit from my model to Mason’s website in order to ensure that we are getting reasonable metrics.

Team Status Report for 10/26

Project Risks and Management

The most significant risk currently is achieving real-time emotion recognition accuracy on the Nvidia Jetson without overloading the hardware or draining battery life excessively. To manage this, Noah is testing different facial recognition models to strike a balance between speed/complexity and accuracy. Noah has begun working on a custom model based on ResNet and a few custom feature extraction layers, aims to optimize performance.

Another risk involves ensuring reliable integration between our hardware components, particularly for haptic feedback on the bracelet. Kapil is managing this by running initial tests on a breadboard setup to ensure all components communicate smoothly with the microcontroller.

Design Changes

We’ve moved away from using the Haar-Cascade facial recognition model, opting instead for a custom ResNet-based model. This change was necessary as Haar-Cascade, while lightweight, wasn’t providing the reliability needed for consistent emotion detection. The cost here involves additional training time, but Noah has addressed this by setting up an AWS instance for faster model training.

For hardware, Kapil is experimenting with two Neopixel configurations to optimize power consumption for the bracelet’s display. Testing both options allows us to select the most efficient display with minimal impact on battery life.

Updated Schedule

Our schedule is on track with components like the website and computer vision model being ahead of schedule.

Progress Highlights

- Model Development: Noah has enhanced image preprocessing, improving our model’s resistance to overfitting. Preliminary testing of the ResNet-based model shows promising results for accuracy and efficiency.

- Website Interface: Mason has made significant strides in developing an intuitive layout with interactive features.

- Hardware Setup: Kapil received all necessary hardware components and is now running integration tests on the breadboard. He’s also drafting a 3D enclosure design, ensuring the secure placement of components for final assembly.

Photos and Updates

Adafruit code for individual components

Training of the new facial recognition model based on ResNet:

Website Initial Designs:

Noah’s Status Report for 10/26

Realistically, this was a very busy week for me which meant that I didn’t make much progress on the ML component of our project. Knowing that I wouldn’t have much time this past week, I overloaded a lot of work during the fall break so I am still ahead of schedule. These are some of the minor things I did:

- Significantly improved image preprocessing with more transformations which have kept our model from overfitting.

- Testing a transition away from the Haar-Cascade facial recognition model.

- I realized that while lightweight, this model in more very good or reliable.

- I have been working on creating our own model using Resnet as well as multiple components that I have built on top.

- Set up AWS instances to train our model in a much more efficient and faster way.

I am still ahead of schedule given that we have a usable emotion recognition model much before the deadline.

Goals for next week:

- Continue to update the hyperparameters of the model to obtain the best accuracy as possible.

- Download the model to my local machine which should allow me to integrate with OpenCV and test the recognition capabilities in real-time.

- Then, Download our model onto the Nvidia Jetson to ensure that it will run in real-time as quick as we want it.

- After this, I want to experiment with some other facial recognition models and boundary boxes that might make our system more versatile.

Noah’s Status Report for 10/20

These past 2 weeks, I focused heavily on advancing the facial recognition and emotion detection components of the project. Given that we decided to create our own emotion detection model, I wanted to get a head start on this task to ensure that we could reach accuracy numbers that are high enough for our project to be successful. My primary accomplishments these couple weeks included:

- Leveraged the Haar-Cascade facial recognition model to act as a lightweight solution to detect faces in real-time

- Integrated with open-CV to allow for real-time processing and emotion recognition in the near future

- Created the first iterations of the emotion detection model

- Started testing with the FER-2013 dataset to classify emotions based on facial features.

- Created a training loop using PyTorch. Learning about and implementing features like cyclic learning rates and gradient clipping to stabilize training and prevent overfitting.

- The model is starting to show improvements reaching a test accuracy of over 65%. This is already at the acceptable range for our project; however, I think we have enough time to improve the model up to 70%.

- The model is still pretty lightweight using 5 convolution layers; however, I am considering simplifying a little bit to keep it very lightweight.

- Significantly improved image preprocessing with various transformations which have kept our model from overfitting.

I am now ahead of schedule given that we have a usable emotion recognition model much before the deadline.

Goals for next week:

- Continue to update the hyperparameters of the model to obtain the best accuracy as possible.

- Download the model to my local machine which should allow me to integrate with OpenCV and test the recognition capabilities in real-time.

- Then, Download our model onto the Nvidia Jetson to ensure that it will run in real-time as quick as we want it.

- After this, I want to experiment with some other facial recognition models and boundary boxes that might make our system more versatile.