This week, I focused on preparation for the final presentation, testing, and output control ideation. Here’s what I accomplished:

This week’s progress:

- Testing

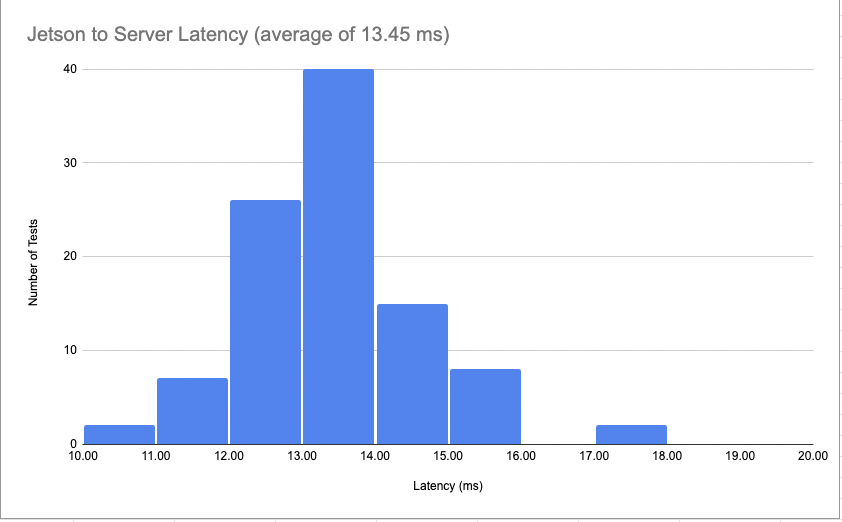

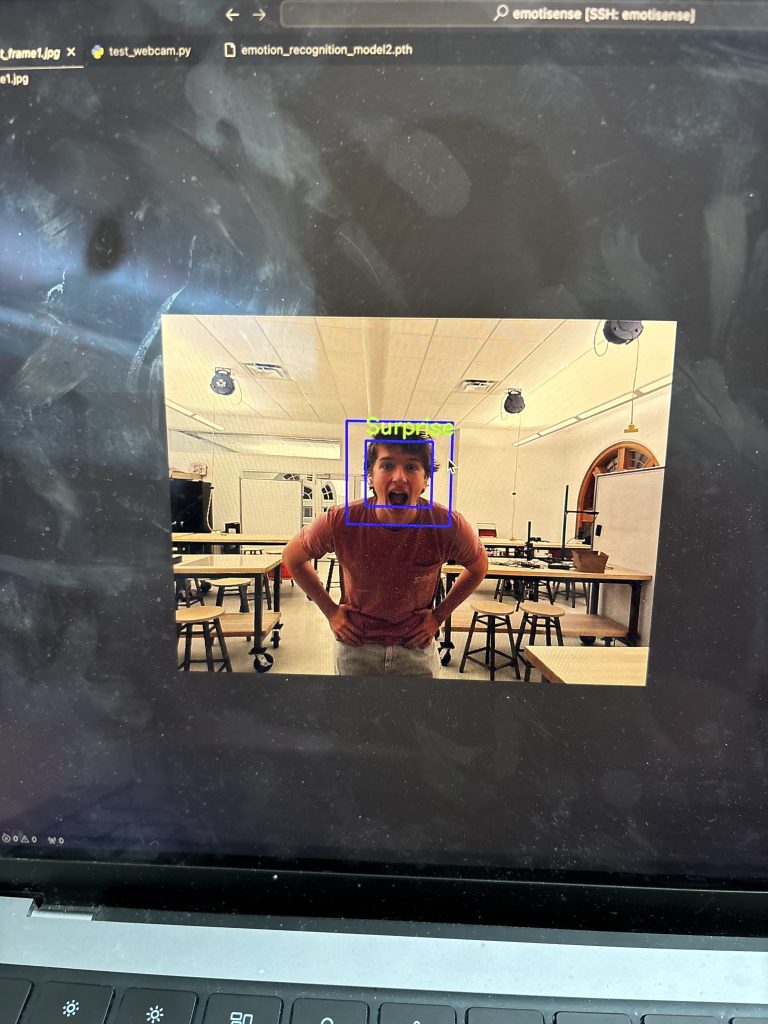

- Ran tests on API latency using python test automation.

- Found that the API speed is well within our latency requirement (in good network conditions).

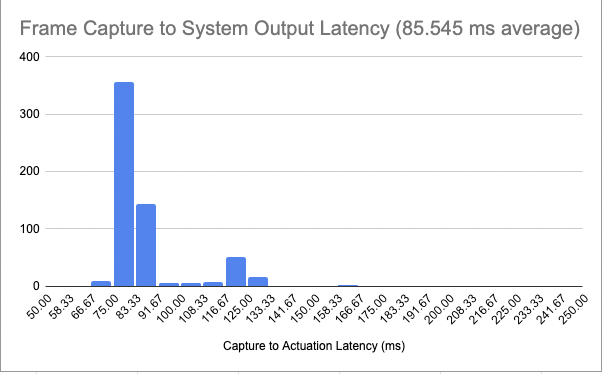

- Ran latency tests on the integrated system, capturing the time between image capture and system output.

- Found that our overall system is also well within our latency requirement of 350 ms, in fact operating consistently under 200 ms.

- Wrote out user testing feedback form for user testing next week.

- Final presentation

- Worked on our final presentation, particularly the testing, tradeoff, and system solution sections.

- Wrote notes and rehearsed the presentation in lead up to class.

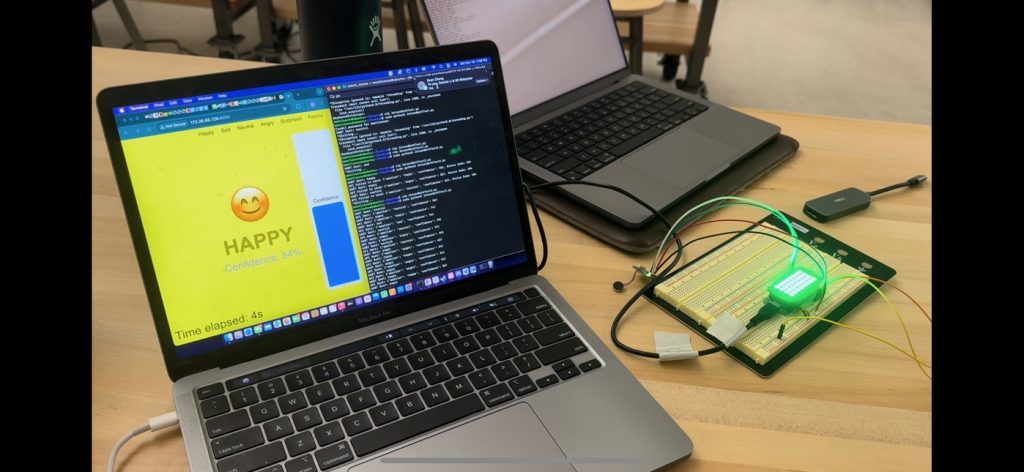

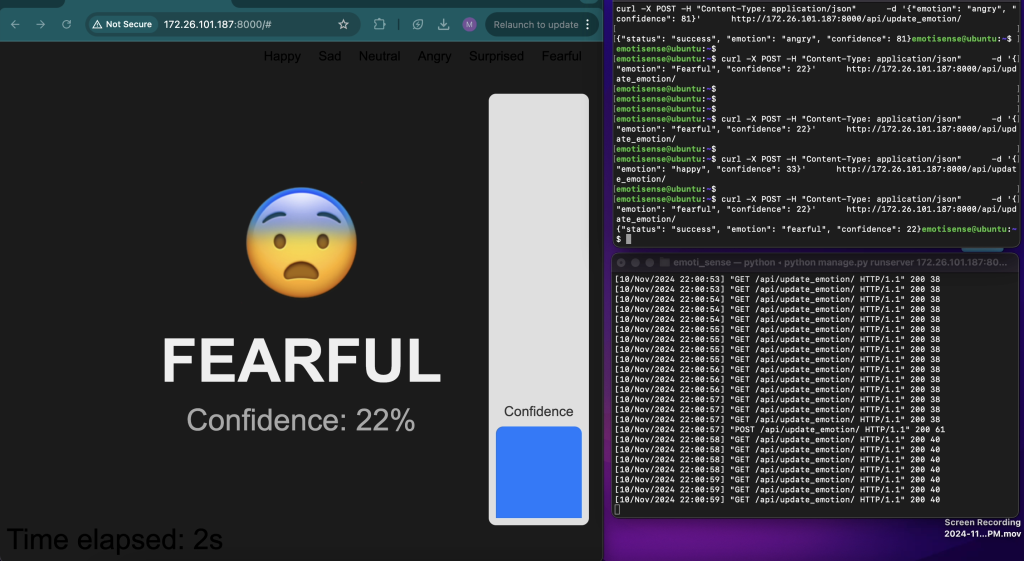

- Control, confidence score Ideation

- In an effort to enhance our systems confidence score efficacy, I decided to integrate a control system.

- I plan to use a Kalman filter to regulate the display of the system output in order for account for the noise present in the system output.

- By using the model output probabilistic weights, I will try to analyze the output and make a noise adjusted likelihood estimate.

- I will implement this with numpy on the Jetson side of the system and update the API in conjuction with this.

Goals for Next Week:

- Implement kalman filter on Jetson.

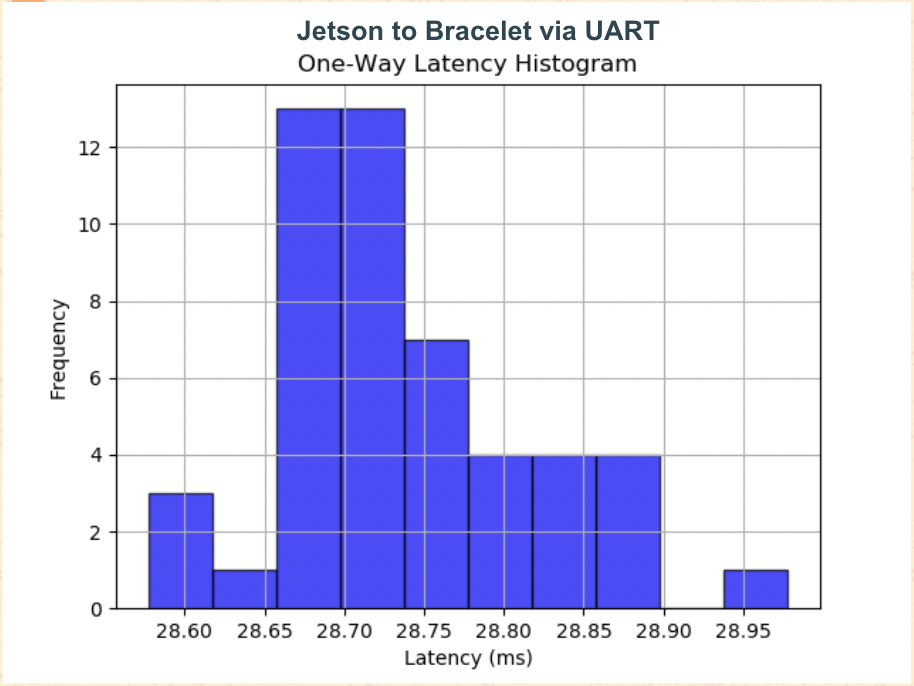

- Assist Kapil with BLE Jetson integration for the Adafruit bracelet.

- Continue user testing and integrate changes into UI in conjunction with feedback.

- Work on poster and finalize system presentation for final demo.

I’d say we’re on track for final demo and final assignments.

As you’ve designed, implemented and debugged your project, what new tools or new knowledge did you find it necessary to learn to be able to accomplish these tasks? What learning strategies did you use to acquire this new knowledge?

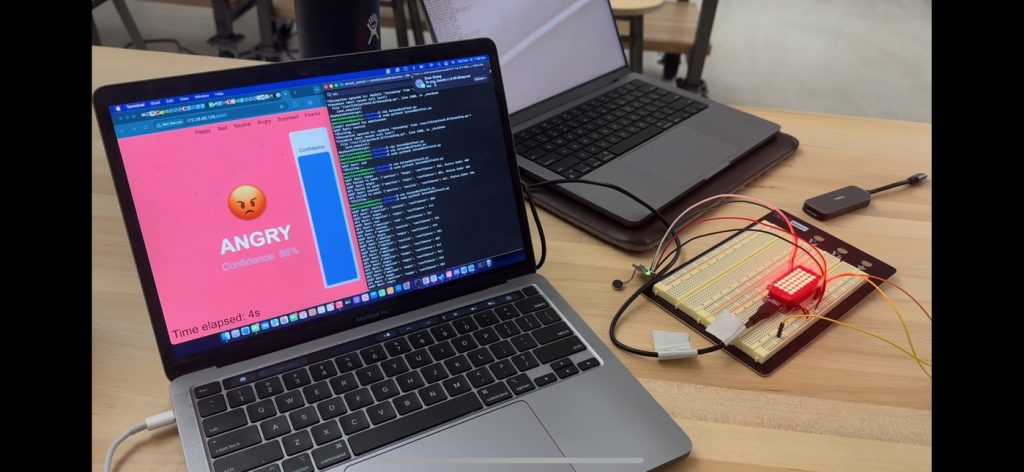

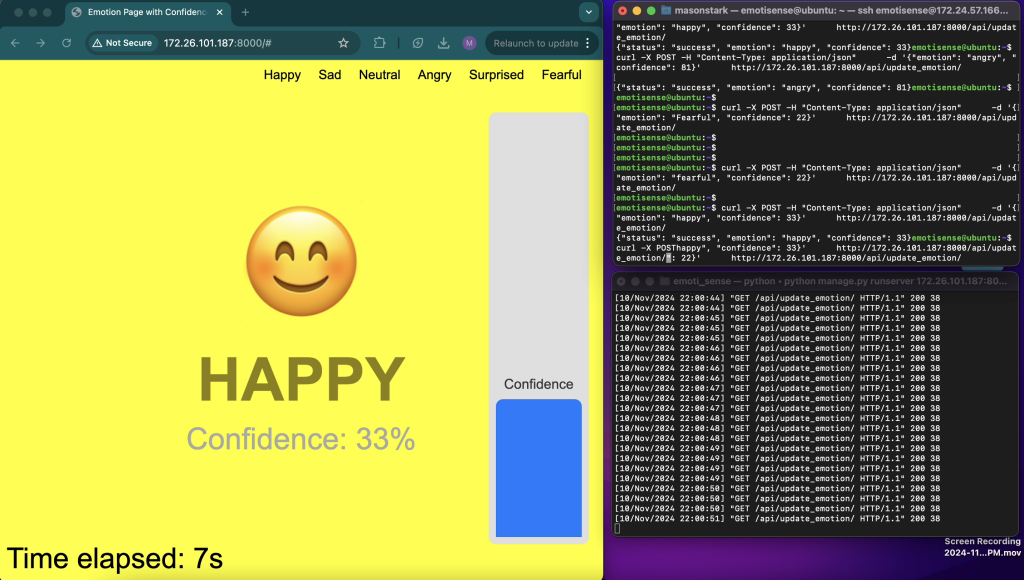

For the web app development, I’ve had to learn better how to optimize UIs for fast latency times, reading the Jquery Docs to figure out how to best integrate AJAX to dynamically update the system output. I had to make a lightweight interface, and build an API that can run fast enough to have real time feedback, for which I read the requests (HTTP for humans) docs. I read some forum postings on fast APIs in python, as well as watched a couple development videos on people building fast apis for their own applications in python. For the system integration and Jetson configuration, I watched multiple videos on flashing the OS, and read forum posts and docs from NVIDIA. I also consulted LLMs on how to handle bugs with our system integrations and communication protocols. The main strategies and technologies I used were forums (stack overflow, NVIDIA), Docs (Requests, jQuery, NVIDIA), and Youtube videos (independent content creators).

Images: