Project Risks and Management

The most significant risk currently is achieving real-time emotion recognition accuracy on the Nvidia Jetson without overloading the hardware or draining battery life excessively. To manage this, Noah is testing different facial recognition models to strike a balance between speed/complexity and accuracy. Noah has begun working on a custom model based on ResNet and a few custom feature extraction layers, aims to optimize performance.

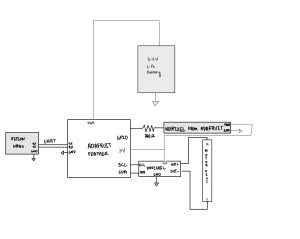

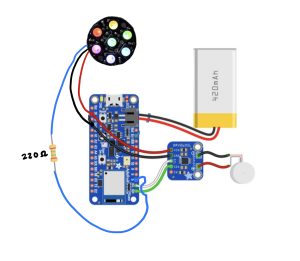

Another risk involves ensuring reliable integration between our hardware components, particularly for haptic feedback on the bracelet. Kapil is managing this by running initial tests on a breadboard setup to ensure all components communicate smoothly with the microcontroller.

Design Changes

We’ve moved away from using the Haar-Cascade facial recognition model, opting instead for a custom ResNet-based model. This change was necessary as Haar-Cascade, while lightweight, wasn’t providing the reliability needed for consistent emotion detection. The cost here involves additional training time, but Noah has addressed this by setting up an AWS instance for faster model training.

For hardware, Kapil is experimenting with two Neopixel configurations to optimize power consumption for the bracelet’s display. Testing both options allows us to select the most efficient display with minimal impact on battery life.

Updated Schedule

Our schedule is on track with components like the website and computer vision model being ahead of schedule.

Progress Highlights

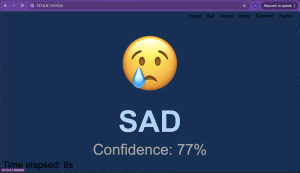

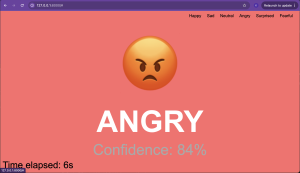

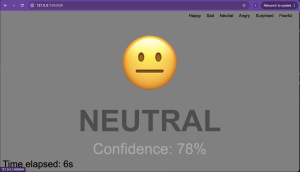

- Model Development: Noah has enhanced image preprocessing, improving our model’s resistance to overfitting. Preliminary testing of the ResNet-based model shows promising results for accuracy and efficiency.

- Website Interface: Mason has made significant strides in developing an intuitive layout with interactive features.

- Hardware Setup: Kapil received all necessary hardware components and is now running integration tests on the breadboard. He’s also drafting a 3D enclosure design, ensuring the secure placement of components for final assembly.

Photos and Updates

Adafruit code for individual components

Training of the new facial recognition model based on ResNet:

Website Initial Designs: