Sept 21

This week, I researched existing boxing pose databases for use with MediaPipe. After some exploration, I found limited publicly available datasets specifically designed for boxing pose recognition. Given this, I started to explore the possibility of training my own boxing pose classifier.

To address the lack of existing data, I considered a systematic approach, starting with the creation of a custom dataset using MediaPipe’s Pose solution. This involves capturing videos of different boxing actions (e.g., jabs, hooks, uppercuts) using a webcam, extracting key pose landmarks, and annotating them. I wrote some preliminary code to collect data and extract landmarks. Following this, I planned to split the data into training, validation, and testing sets, and possibly train machine learning models such as SVMs or neural networks to classify boxing poses in real-time.

Additionally, I studied the MediaPipe Unity Plugin. I downloaded the plugin package and reviewed its documentation, which will help integrate with the gaming design.

My progross is on schedule, and for next week, I am aiming to start with the pose annotation and present some experimentation results gained by using the current method.

Sept 28

This week, I intended to train a model to classify various boxing poses using machine learning techniques initially. However, after exploring with the available data and attempting to build a dataset, I encountered a significant roadblock: the lack of sufficient and diverse pose data for training the model. This lack of data posed a challenge for accurately classifying dynamic and nuanced boxing poses, leading me to reevaluate the approach.

Given the limited dataset, I shifted my focus from training a machine learning model to directly mapping the landmarks detected by MediaPipe’s pose detection model to control the game character’s movements. This approach allowed me to bypass the need for complex classification models and instead leverage the pose landmarks, such as hand, elbow, and shoulder positions, to drive the avatar’s movements in real time.

To implement this, I wrote Python code that captures real-time body pose landmarks using MediaPipe. The code processes video input from a webcam and extracts the x, y, and z coordinates of key body landmarks, such as wrists and shoulders. These landmarks were then formatted into strings of data that could be easily transmitted. I set up UDP communication to send these formatted pose data packets from Python to Unity. This involved encoding the landmark data into a string format and using Python’s socket library to send the data via UDP to a specific IP address and port where Unity would receive the information.

On the Unity side, I had C# code to receive the landmark data sent over UDP. I created a UDP listener that runs in a background thread, receiving data continuously from Python. The data was then decoded and parsed to extract the landmark positions, which would be used to control the movements of the avatar in real time. I tested the communication and it worked as expected.

My progress is genrally on schedule, and for next step, I am aiming to build game objects(33 spheres corresponding to the 33 landmarks) and C# motion script to implement the mapping.

OCT 5

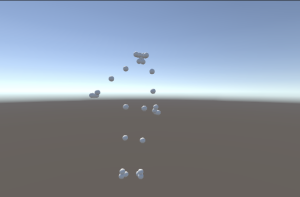

This week, I focused on implementing the action code in Unity to enable real-time body tracking using UDP data from Mediapipe. The action code involves setting up spheres in Unity to represent body keypoints and using UDP to transmit coordinates from external sources. These spheres are updated based on the received data to simulate human body movement. Each keypoint’s position is parsed from the UDP stream and assigned to the corresponding sphere’s transform, creating a real-time visualization of the body in 3D.

Additionally, I did research on applying filters to improve the tracking accuracy. I explored Kalman filter to smooth out any jitter caused by noisy pose data . The filter will help reduce the abrupt changes in the position of the body points, improving the visual quality of the motion representation.

I am currently on schedule. And for next week, I plan to further test and fine-tune these filters to ensure the system responds smoothly and accurately during rapid body movements in the next phase.

OCT 11

This week, I focused on implementing a Kalman filter to enhance the stability of landmark data in our computer vision system. The Kalman filter was integrated into the Python code to predict and correct landmark positions by continuously estimating the state of each joint. It uses a recursive algorithm that refines predictions based on the previous state and the measurement noise, significantly reducing jitter and ensuring smoother real-time tracking for fast-moving gestures.

While the video capture is running:

Read each frame from the video

If the frame is unavailable, reset the video capture

Use PoseDetector to find and extract landmarks from the frameIf landmarks are detected:

Initialize a string to store landmark data

Apply Kalman filter to smooth landmark positions:

For each joint:

Update Kalman gain

Compute the corrected position

Update landmark states with filtered dataApply low-pass filter to further refine data

Format and prepare the filtered landmark data for transmissionTransmit the processed landmark data over the UDP socket

Display the processed frame in a window

I am on schedule this week. And for next week, I aim to focus on the integration of this refined pose data with the gaming environment in Unity, working closely with Shithe. This step will involve mapping the filtered landmark data to Unity’s avatar movements, ensuring precise synchronization between real-world gestures and in-game actions. Our goal is to create a seamless interaction experience that fully utilizes the stability improvements from the Kalman filter implementation.

OCT 26

This week, I focused on integrating the computer vision component with the game model, collaborating closely with Shithe to streamline the process. The UDP communication was consistent, however, I encountered challenges with scaling the landmarks accurately. Although the data was transmitted correctly, the avatar’s movements did not align with the expected physical proportions, causing the avatar’s poses to appear distorted. Despite several attempts to adjust for the model’s coordinate system, I couldn’t achieve the correct scaling.

Despite the setbacks, I am not far behind the schedule. Next week, I plan to focus on refining the scaling approach. I intend to experiment with different coordinate transformations. Shithe and I will schedule more sync sessions to align our adjustments and verify results incrementally, ensuring we are on the right track moving forward.

NOV 2

This week, the main focus was mapping MediaPipe coordinates to the avatar’s skeletal structure in Unity. One of the most significant difficulties encountered was ensuring that each joint accurately aligns with the avatar’s bones and maintains realistic, fluid movement. Handling the complex transformations and orientation requirements for each bone, relative to others, proved more challenging than expected, necessitating further adjustments and testing.

In order to solve the issues, I researched on VNectModel class. The PositionIndex enum establishes specific body points, such as the shoulders, arms, and legs, providing an organized way to manage each joint. JointPoint, another critical class, represents each skeletal point, holding its 2D and 3D coordinates and handling the bone transformations needed for accurate movement. It also includes properties for visibility and inverse rotations, which are essential for ensuring that bones move naturally relative to one another.

Additionally, the Skeleton class uses LineRenderer components to visualize the connections between joints, bringing the skeletal structure to life. The PoseUpdate method calculates distances between bones to derive realistic z-axis movement, updating the avatar’s position based on the latest joint positions provided by MediaPipe. This function only applies transformations when joints are visible, which helps reduce flickering or abrupt changes in the avatar’s movement.

Looking ahead, the priority is to finalize the joint mapping and ensure fluid, realistic movements. Improving the IK settings and refining joint visibility rules will be essential steps in reducing any jitter or unnatural positioning. I aim to continue working on the mapping process in the coming week to help get the project back on track.

NOV 9

This week, I implemented the MediaPipe landmark-to-Unity game avatar algorithm, achieving a milestone. The core logic of my implementation involves mapping key joint landmarks captured via MediaPipe to the corresponding parts of the Unity avatar. Key components of the code include a dictionary mapping MediaPipe joints to their respective Unity counterparts, ensuring each body part’s real-world movements accurately influence the virtual avatar’s positioning. The code also dynamically adjusts joint rotations using quaternions. Specifically, the quaternion inversions and transformations enable realistic and smooth rotations in Unity, based on the calculated orientations of each joint in 3D space. The code applies a vector lerping method to smooth transitions between the avatar’s movements, enhancing visual fluidity and reducing jitter.

Next week, I plan to focus on tuning the scaling factor and improving the lerp smoothing factor further. These adjustments aim to optimize the avatar’s responsiveness and provide a more accurate and smoother user experience during gameplay.

Check out the link below for a short demo.

https://drive.google.com/file/d/12WlJTbA2-HPqiBN8q2q5dFiIJFpqSk_l/view?usp=drive_link

NOV 16

NOV 30

Over the past two weeks, I have concentrated on the integration and testing phase of the computer vision boxing project, working closely with my teammates. A key focus has been ensuring that the mapping of ground truth body positions to the game avatar achieves high accuracy. To test this, I implemented a methodology that compares the arm rotation angles from three different perspectives—top, side, and front views. By analyzing these views, we were able to cover all degrees of freedom for the arm, which provided a comprehensive assessment of the mapping accuracy. This approach allowed us to identify and address inconsistencies in how the game avatar mirrors real-world movements.

As I progressed through the testing stages I realized the necessity of gaining a deeper understanding of quaternion transformations and their projection onto the X, Y, and Z planes. This knowledge was essential to extrcat the game avatar’s arm rotational values. To acquire this new knowledge, I read some papers to review the mathematical principles of quaternion transformations and their relevance to 3D applications. Simultaneously, I watched YouTube tutorials to observe practical implementations in Unity.

The project is currently on schedule, and my plan for the upcoming week involves further integration and debugging.

DEC 7

This past, I successfully practiced and delivered the final presentation. One of the major technical accomplishments this week was implementing a new version of the Kalman filter. This update improved tracking accuracy and stability by providing smoother transitions and better handling of noise in input data. The Kalman filter showed clear advantages over the previous linear interpolation (lerping) approach, which, while faster, was less resilient under fluctuating conditions. A detailed analysis of trade-offs between the two methods was documented to support the system’s final optimization.

The project remains on schedule. For the coming week, I will work with the team to perform final testing under various real-world conditions, such as different lighting and movement scenarios. Any remaining bugs or issues will be addressed to ensure the system is reliable.