This week, our focus was on advancing multiple aspects of the smart charging table project, including both hardware and software developments, while ensuring that we remain on schedule. A key milestone was the finalization of our design review report, where each team member contributed significantly to different sections. For example, In the related work, we examined a previous team’s similar project, identified their challenges, and integrated improvements into our design. This reflection on prior work led to several modifications to our original design, particularly in how we will combine the gantry system with the robotic control ideas.

On the hardware side, we received and began working with the Nvidia Jetson Orin Nano, which will serve as the central control unit for our project. Our efforts this week were focused on familiarizing ourselves with its interface, setting up the initial programming environment, and conducting basic circuit tests in Techspark. We set up the Jetson Orin Nano using a DisplayPort connector with a keyboard and monitor, successfully gathering information about the GPIO header pinout from resources online. We used this pinout to test basic functionalities, such as powering an LED and a motor driver through breadboard circuits. We also explored communication protocols such as UART, SPI, and I2C, which will be critical for integrating various sensors and components moving forward.

In parallel, we continued testing the camera system, despite not yet receiving the stereo camera we ordered. Using the available camera, we implemented basic image filtering tests, simulating real-world conditions by positioning the camera to capture electronics on a table. We are still refining these tests, taking into account environmental factors like lighting and object positioning. Once the stereo camera arrives, we plan to compare its performance with the Nvidia Jetson Nano’s built-in camera to determine which setup is more efficient for our application.

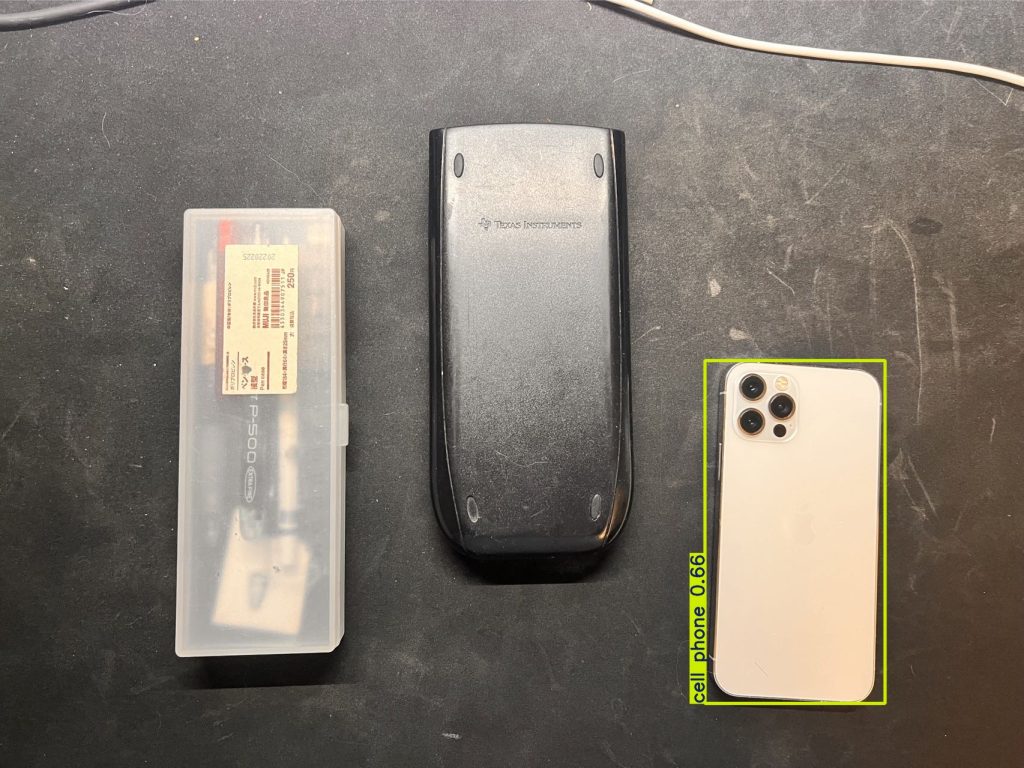

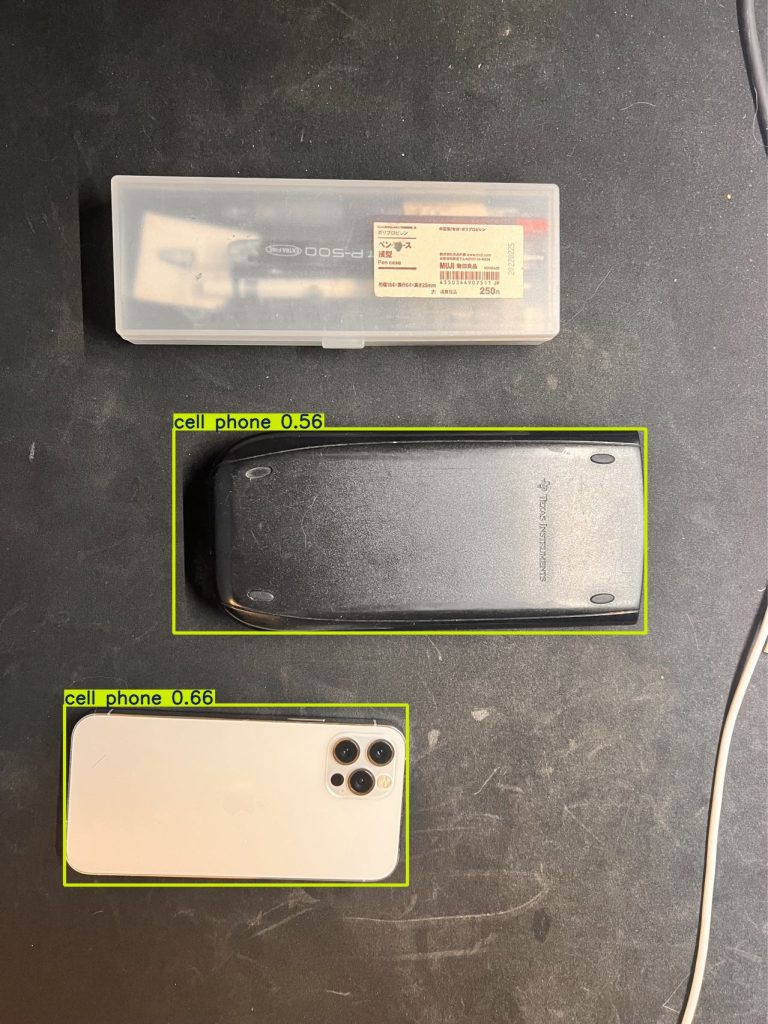

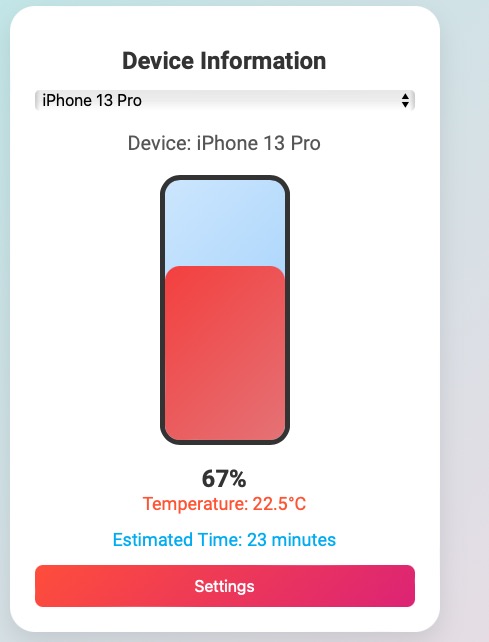

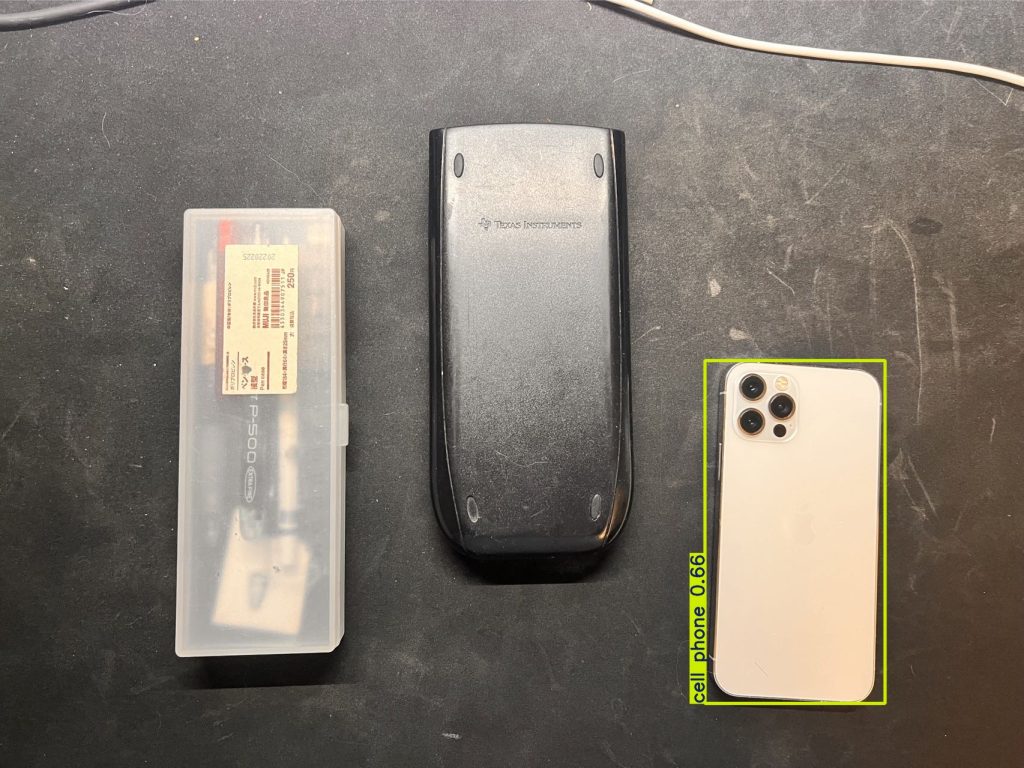

Regarding the vision system, we made significant progress by implementing the YOLO object detection algorithm. The first version of our system is now capable of detecting phones on the table with over 90% accuracy, even when the phone is facedown, simulating the real charging scenario. The algorithm was trained on a custom dataset to reflect the specific conditions we anticipate in real use, such as varied lighting and phone orientations. While this initial implementation is promising, We are working to further optimize the system by reducing latency and improving performance in edge cases, such as low light conditions or when the phone is obscured.

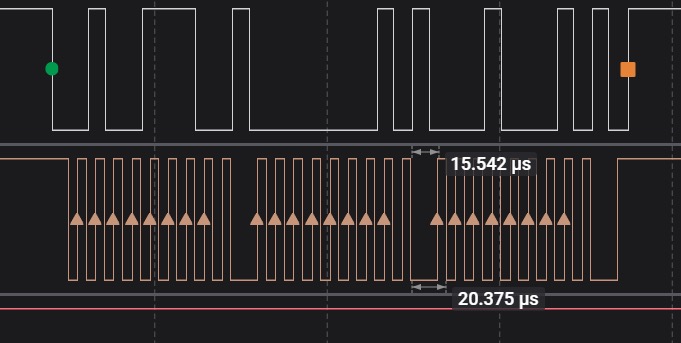

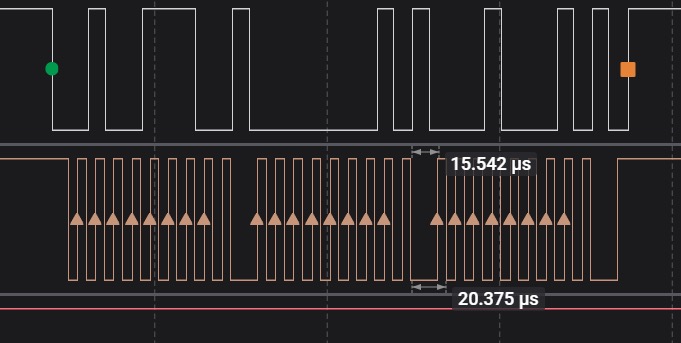

For the gantry system, we are developing the motor control system using TB6600 motor controllers. This week, we wrote the initial control code using the Jetson.GPIO library and successfully tested it on the Jetson Orin Nano. The next step is to integrate the motor controllers with the actual motors, once they arrive, and begin full power load testing with a second controller for more complex gantry operations.

Consideration of Global Factors

The smart charging table we are designing addresses a global need for efficient, multi-device wireless charging in a variety of settings, from personal homes to commercial spaces. With the growing number of mobile devices worldwide, especially in fast-growing markets, there is a clear need for versatile and reliable charging solutions that can cater to different phone models and device types. Our system, leveraging the Nvidia Jetson Orin Nano’s processing power and the precision of our gantry system, is capable of identifying and charging multiple devices simultaneously and automatically, making it applicable to global consumers who demand flexibility and efficiency in their device management. By designing a system that can adapt to varying environmental conditions and device types, we are catering to a broad audience beyond just technologically advanced or academic environments. What’s more, our product is fully automatic, which ensures that the system can be deployed in any region, helping users efficiently manage their devices without requiring advanced technical knowledge.

Consideration of Cultural Factors

Our product design takes into account the diverse ways in which people across different cultures use and interact with technology. For example, in regions where multiple device ownership is common, our system’s ability to detect and charge several devices at once offers a significant advantage. We have also considered user interface design, ensuring that the system is intuitive and easy to use for people with varying levels of technological expertise. The seamless operation of our gantry and vision systems ensures that the system can be used by anyone, regardless of their background or experience with similar products. Additionally, we have taken care to ensure that the design is adaptable to different environments and cultural contexts, whether it is being used in a high-tech office or a traditional household. Lastly, our software is going to support multiple languages, which would make people that do not understand English use the product easily.

Consideration of Environmental Factors

Our design incorporates environmental sustainability by focusing on energy efficiency and reducing material waste. The gantry system has been optimized to minimize power consumption without sacrificing performance, and the use of 3D-printed components allows us to limit material waste during production. Moreover, by encouraging users to centralize their device charging in one place, we are promoting more efficient energy usage compared to having multiple chargers in various locations. The smart charging table’s longevity and adaptability also reduce electronic waste, as the system is designed for durability and can accommodate future technology updates, extending its usable life. These considerations ensure that our system is not only practical and efficient but also environmentally responsible.

(A was written by Steven Zhang, B was written by Bruce Cheng, C was written by Harry Huang)

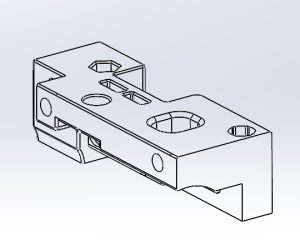

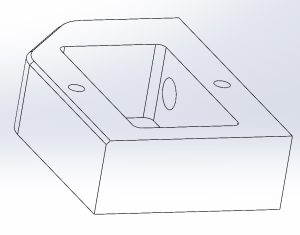

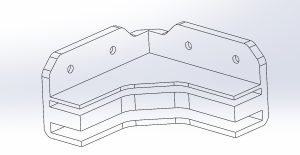

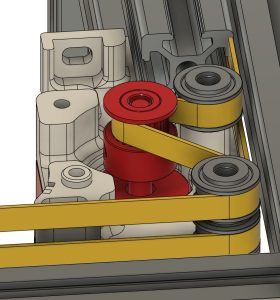

My first priority this week was to complete the mechanical modeling simulation and part selection for the Gantry system. For the power configuration, I chose a common NEMA motor to power the system, a set of two motors to power the horizontal and vertical movement of the entire system, and a separate motor to control the arm up and down to grab the charging pads for movement. Since I hadn’t touched or designed a gantry system before, I researched and borrowed some designs from cheaper 3D printers, and used a combination of two sets of moving and fixed pulleys and 6mm belts for the drive, as well as a set of MGN9 rails for the limit. I finished picking and filling out the drivetrain parts on the purchase list and we’ve submitted it to the TA for review. Since most of the parts are being purchased using Amazon, we hope to complete the development of the motor controller drives and the basic Gantry build over fall break and the following week.

My first priority this week was to complete the mechanical modeling simulation and part selection for the Gantry system. For the power configuration, I chose a common NEMA motor to power the system, a set of two motors to power the horizontal and vertical movement of the entire system, and a separate motor to control the arm up and down to grab the charging pads for movement. Since I hadn’t touched or designed a gantry system before, I researched and borrowed some designs from cheaper 3D printers, and used a combination of two sets of moving and fixed pulleys and 6mm belts for the drive, as well as a set of MGN9 rails for the limit. I finished picking and filling out the drivetrain parts on the purchase list and we’ve submitted it to the TA for review. Since most of the parts are being purchased using Amazon, we hope to complete the development of the motor controller drives and the basic Gantry build over fall break and the following week.