Team Status Report for 4/26

This past week we finished up testing.

This week we will be working on the poster, report, and demo.

We performed unit tests on the 4 different models we had selected: llama3-8b, falcon-7b, qwen2.5-7b, and vicuna-7b. Testing and results can be found here: https://github.com/jankrom/Voice-Vault/tree/main/server/model-testing. This involved setting up the tests for each model, making a python script, and saving the results in a png for each model. I found that llama3 had the best accuracy at 100%, while qwen and vicuna both did around 90%. Falcon actually had a score of 0% accuracy which was very surprising. I looked into it more and it could be because it is optimized for code, as I saw a lot of the responses the model was giving was in javascript and such, despite the model saying it is optimized for conversations. These results cause me to remove falcon from our options and now we will only offer the other 3 models to pick from. This resulted in me having to modify our website to only include the other 3.

We performed unit tests on the system prompts to test out multiple different system prompt and find the best one. The best one we found gave us 100% accuracy in selecting if it is an alarm request, music request, or LLM request.

We performed many e2e tests just by interacting with the system and we did not find any errors when doing so.

David’s Status Report for 4/26

This week I mainly just did some user testing with Kemdi and Justin where we say how long it took for them to set up the product along with asking some validation questions like if they like it or not and what would be better if it was changed. I also had to deal with some hiccups in the 3d printing process as two weeks ago I had given a usb to the fbs center as their website was down. However early this week I checked on the progress and apparently it was never started, so unfortunately I had to resubmit. I believe it should be done by the demo, however I am not sure. There is nothing left to be done anymore for the project itself, only the report, prep for demo, and poster.

Final Presentation

Team Status Report for 4/19

There are currently no risks to our project as we are essentially done with design. We are currently working on the testing that we outlined in the design report. This week we did the user testing together with 10 users.

No changes were made to the design as we are just testing, except for a little bit of the 3d model which was outlined in David’s status report. This change is really minute and just requires a little bit of tape.

We are still on schedule, and just have to test the product.

David’s Status Report for 4/19

This week I mainly was designing the final 3d print. It took me some time to figure out how to put a sliding lid on the bottom. I also decided to not put a cover on the top as it could potentially block out the sound or the mic. Instead I am thinking of maybe using tape to hold it in. If it prints how I want, which it might not due to the sliding door needing very specific resolution which can vary from printer to printer, then we should be good for the model. If not I will have to submit another design. The rest of the week I spent with my group helping to user test our project. We met with 10 friends who we gave the product, and let them set it up, and afterwards we asked them questions which the specifics will be covered more in the report. I am definitely on schedule, and next week I will finalize all the testing like the latencies for both end to end and component wise.

For this week’s question, I definitely learned a ton working on this project as I kind of bounced between tasks. I initially learned how to set up the raspberry pi and configure its wifi, data/time, and other settings. To create the server, I learned flask and how endpoints work along with the ollama app for the model. I also learned how to create a VM using google cloud platform. I have never worked with docker before, so I also learned how to containerize a server. Lastly, I learned how to 3d design using fusion 360 to create the physical casing. To learn all these topics I did a lot of research online as well as talking to my group (if one of them was familiar with it).

Team Status Report for 4/12

This week we have been working on streaming for both server and board models. We have been able to stream responses end to end however they are pretty choppy. We will try to fix it next week by increasing the amount of words in each send, so that the speech to text model has a little more to work with.

We currently do not have any big risks as our project is mostly integrated. We have not changed any part of the design and are on schedule. We plan to do the testing and verification soon as we have finally finished the parts that deal with latency as we obviously couldn’t have done any useful testing until that was finished.

David’s Status Report for 4/12

This week I worked on two main things: server side streaming and 3d printing. For server side streaming, we were worried about end to end latency as current testing seemed to be around 8 seconds. To eliminate this, we decided to implement streaming for both the server/model and the on board speech/text models. I did the server side and modified the code to allow the server to return words as they are generated which massively increases the speed. This makes the speech a little choppy due to it receiving one word at a time, so next week I might try to change the granularity to maybe 5-10 words. I also have done some work on improving the 3d model. The first iteration came out pretty well, but I want to add some more components to make it complete like a sliding bottom and a sliding top to prevent the speaker from falling out if moved upside down. I am hoping this is the final iteration, but if it doesn’t come out according to plan then I might have to do one more print. I am on schedule, and along with the aforementioned things I will also try to do some testing/verification for next week.

David’s Status Report for 3/29

This week I worked on Docker and some VM stuff. Throughout the week I tried to fix the docker container to run Ollama inside, but to no avail. Me and Justin also tried working on it together, but we weren’t able to fully finish it. Justin was able to fix it later, and I was also able to make a working version as well, although we are going to use Justin’s version as the final. The main issue for my version was that my gpu wasn’t running with the model in the container. I fixed this by not scripting ollama serve in the Dockerfile initially, and just downloading Ollama first. Then I would be able to Docker run with all gpus to start my container. After that I could pull the models and also run Ollama serve to have a fully functional local docker container working. If we were to have used this version I could script the pulling and running of Ollama serve to occur after running Docker. Earlier in the week I also tried to get a vm with a T4 GPU on gcp. However, after multiple tries across servers, I was not able to successfully acquire one. Me, Kemdi, and Justin also met together at the end of the week to flesh out the demo which is basically fully working. I am on schedule, and my main goal for next week is to get a 3d printed container for the board and speaker/mic through FBS.

David’s Status Report for 3/22

This week I worked on the model/server code. From last week, I was initially trying to get the downloaded model from hugging face running locally. However there were many issues, dependencies, and OS problems. After trying many things, I did some more research into hosting models. I came across a software named Ollama, which allowed for easy use of a local model. I coded some python to create a server which took in requests to run through the model and then return to the endpoint.

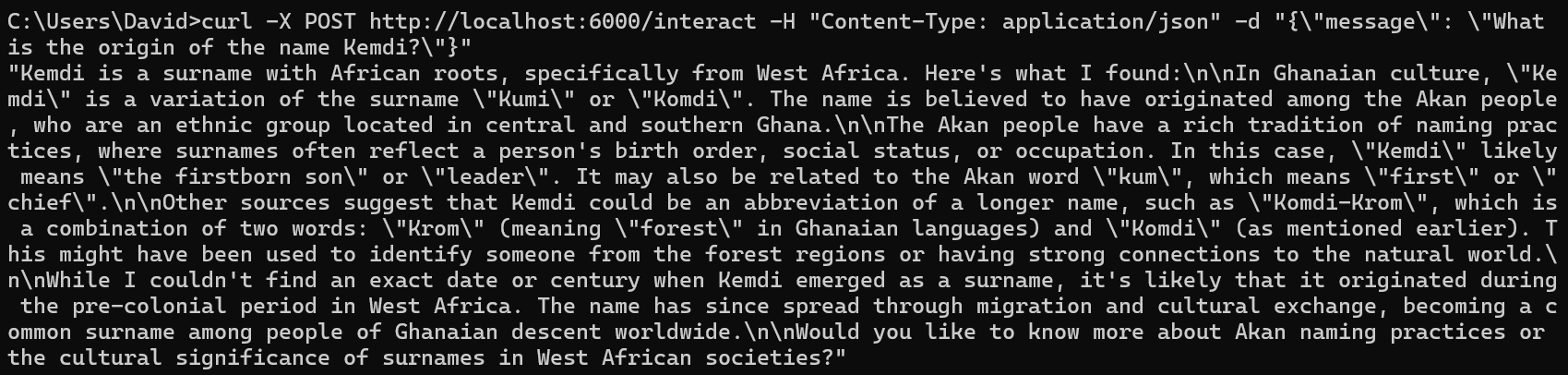

As seen here, we can simply curl a request in which will then be parsed into the model and returned. I then tried to look into dockerization of this code. I was able to build a container and curl into its exposed port, yet the trouble I come across is that the Ollama code inside does not seem to be running. I think it stems from the fact that to run Ollama in python, you need two things (more like three things): Ollama package, Ollama App and related files, and an Ollama model. Currently, the Ollama model and App are on my pc somewhere, so when I initially tried to containerize the code the model and app were not included, only the Ollama package (which is useless by itself). I then tried pasting those folders into the folder before building the Docker image, and they were still not running. I have played around with mounting images, and other suggested solutions online, but they do not work. I am still researching into fixing it, but there are few resources that pertain to my exact situation as well as my OS(windows). We are currently on schedule.

As seen here, we can simply curl a request in which will then be parsed into the model and returned. I then tried to look into dockerization of this code. I was able to build a container and curl into its exposed port, yet the trouble I come across is that the Ollama code inside does not seem to be running. I think it stems from the fact that to run Ollama in python, you need two things (more like three things): Ollama package, Ollama App and related files, and an Ollama model. Currently, the Ollama model and App are on my pc somewhere, so when I initially tried to containerize the code the model and app were not included, only the Ollama package (which is useless by itself). I then tried pasting those folders into the folder before building the Docker image, and they were still not running. I have played around with mounting images, and other suggested solutions online, but they do not work. I am still researching into fixing it, but there are few resources that pertain to my exact situation as well as my OS(windows). We are currently on schedule.