The most significant risk is the electroglottograph breaking down so that we can’t use it in our live demo, but luckily we already have plenty of recordings of us using it so our final demo will not be affected either way.

A calibration test we ran for our electroglottograph is using a larynx simulator to ensure that we could read CQ data properly so that way when we put on the electrodes we could guarantee the EGG was working properly. Since our project metrics are mostly based on feel and not quantitative metrics, we ran several surveys for different users on whether or not the EGG sensors were comfortable, whether or not our application was easy to use, whether or not our tutorial page was effective, and what parts were difficult to use.

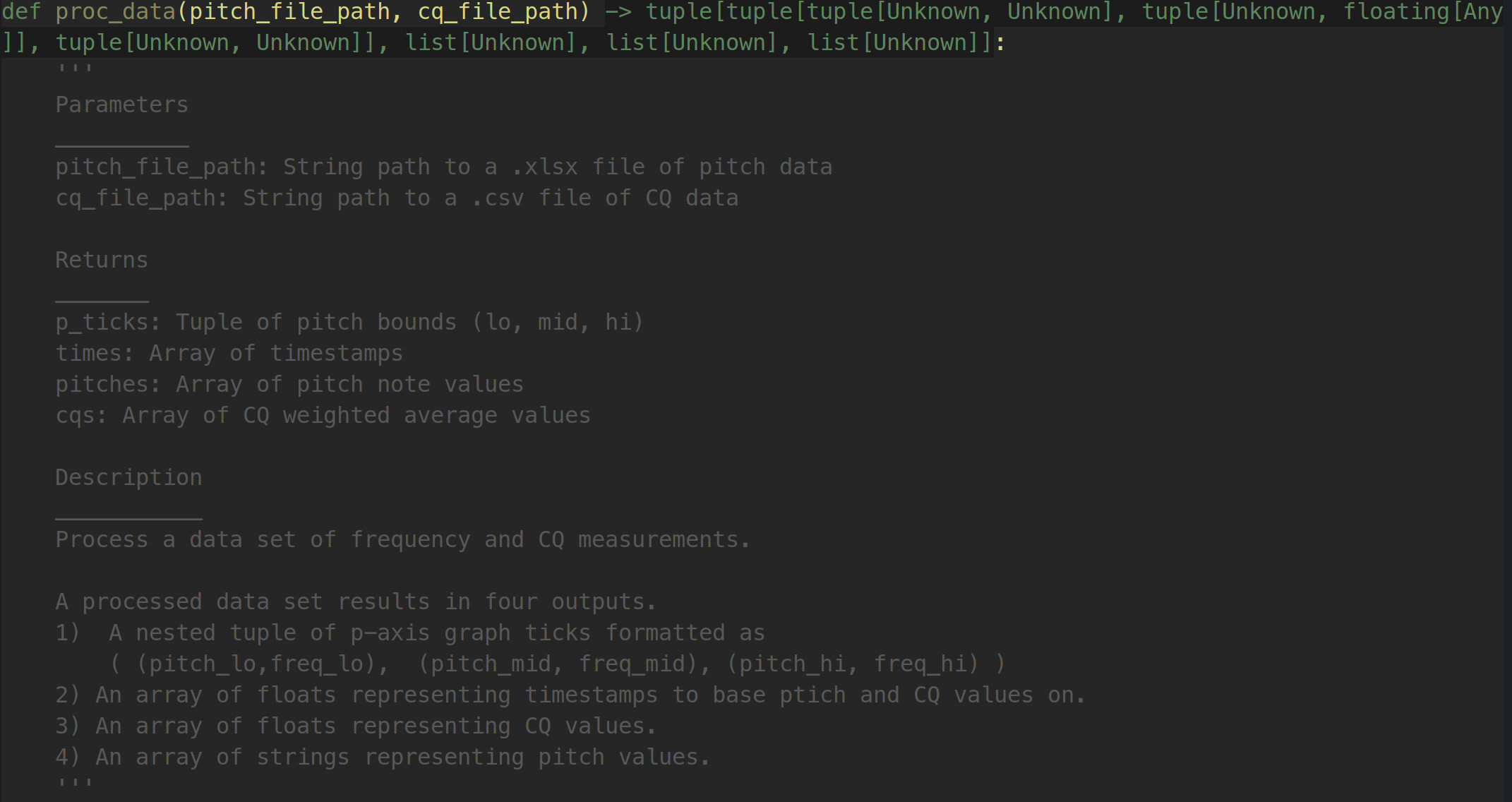

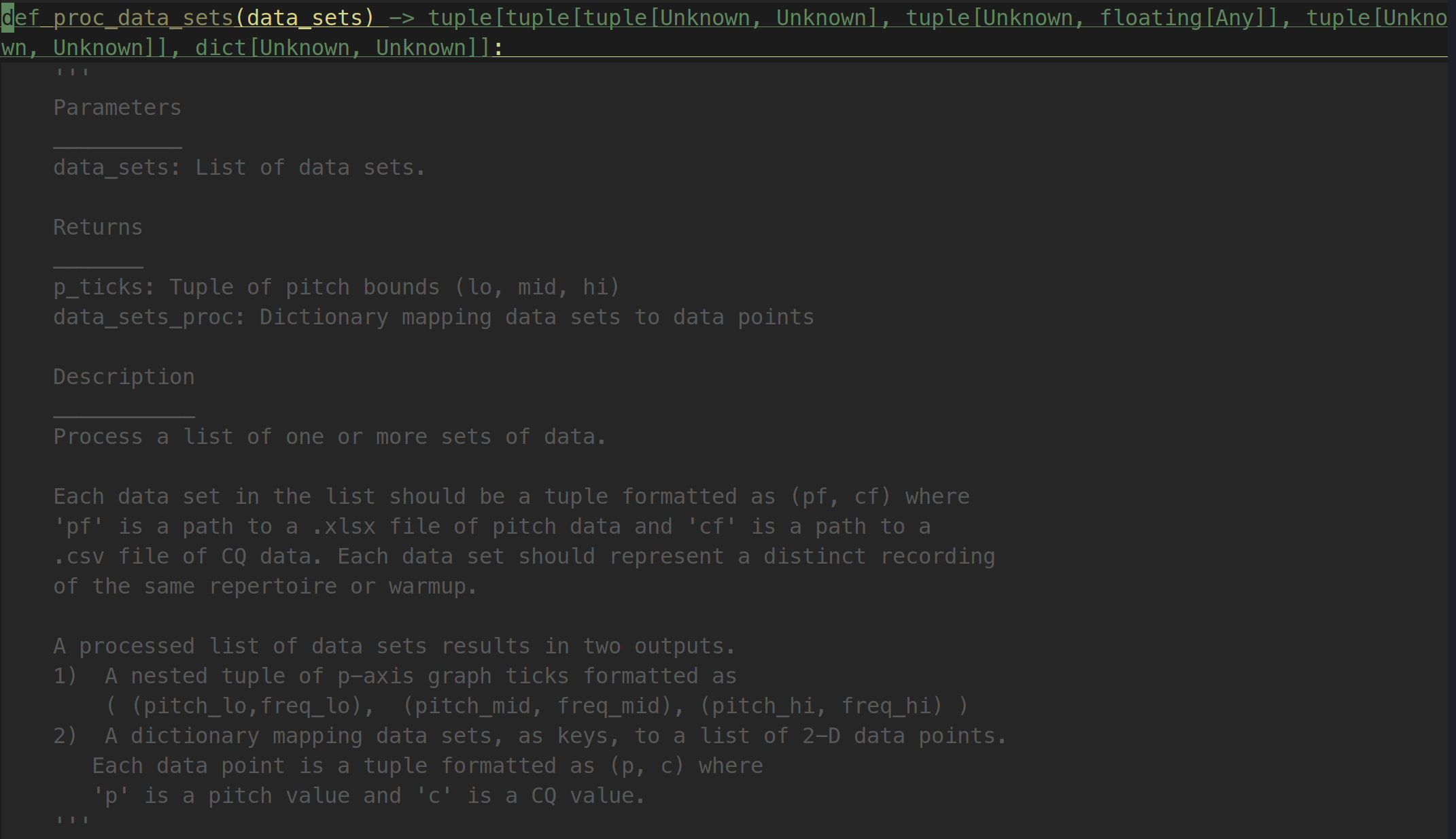

The backend has have two main unit tests

- Pitch Analysis Unit Test

- Tests the pitch detection algorithm for 8 distinct recordings of octave scales

- Includes keys: C4 Major, G4 Major, A2 Major

- Tests the pitch detection algorithm for 4 distinct recordings of “Happy Birthday”

- Includes keys: B4 Major, C4 Major, C4 Major (harmony), F2 Major

- Tests the pitch detection algorithm for 8 distinct recordings of octave scales

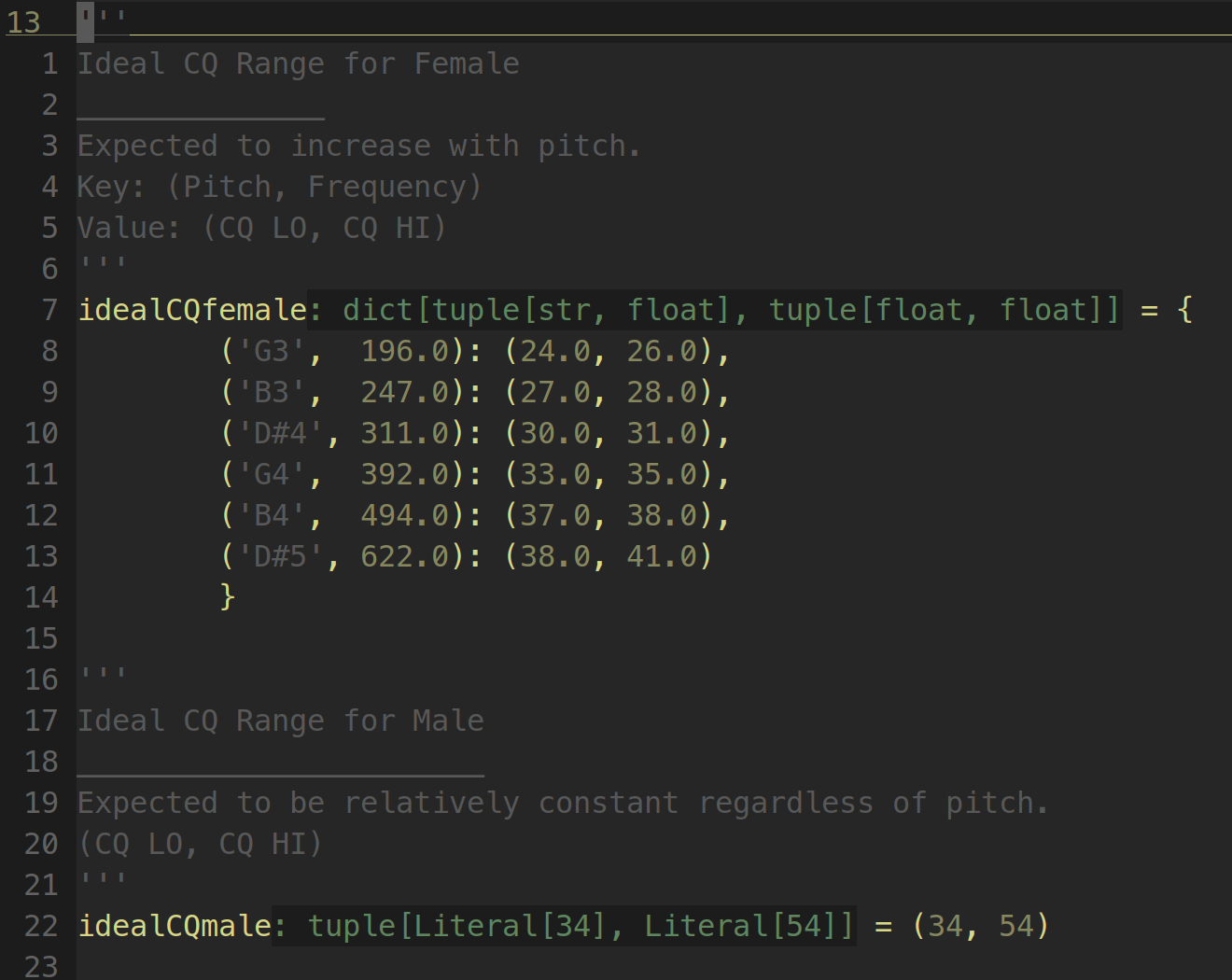

- CQ Analysis

- Verify that the CQ graph output from a octave scale recording of both opera singers is reasonably within the ideal CQ range. This is largely an informal test since the intent is to check that the graph is just displayed as intended, rather than trying to verify that a CQ recording is “accurate”. We might still want to consider adding this in the final report as part of our testing section? If this is the case, maybe a “passing” test is that 50% of the measurements appear within the ideal CQ range since we expect trained opera singers to have CQs that are “near” the data for trained singers reported in David Howard’s research paper.

No changes were made to the design. No changes were made to the schedule.