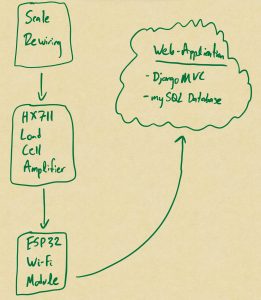

This week, our efforts were focused towards completely integrating one main subsystem for the interim demo that will be happening next week. Since our main goal is to integrate correct food classifications using ML into the website, I focused on our shift towards TensorFlow for OCR that seems to have a differing approach from traditional OCR libraries in which we were previously using the ChatGPT API. This approach had a lot more configuration issues that Steven and Surya encountered but would ultimately be more beneficial for classification and detection for our MVP in the end. The connection between their work and my role is to experiment with how the TensorFlow model could be integrated into our web application for proof of concept.

After the model selection, I set up a Python environment along with some necessary libraries for our web server for something that best suits our needs. I created the user interface where files are uploaded to the server from the camera, but some more work needs to be done involving image compatibility and resizing and resolution considerations to maximize user experience on the globals page. The good thing about the TensorFlow Python model is that it is able to extract text from the user scanned image that can be converted into a readable format. This text is saved into the format we want that would be displayed under posts once that functionality can work. This should also be saved into the database for the food inventory. Further testing with the OCR process will need to be done to improve performance and scalability and ensure it works as expected under various different conditions such as errors and edge cases.

In addition to TensorFlow integration into our web application, I was able to tackle some challenges in relation to GitHub version control. We encountered some merge conflicts involving branch pulling and pushing that temporarily hindered us from making quick progress. After going through Git’s version control features and going through each of our branch changes, these issues were resolved efficiently to address merge conflicts.

Looking ahead despite encountering various challenges, I will focus on refining our TensorFlow integration into the web application and tinkering the other features we were planning to focus more towards in consideration to the previous ethics discussion that happened in class. The integration of TensorFlow will help enhance our functionality and perform more advanced and high performance image analysis.