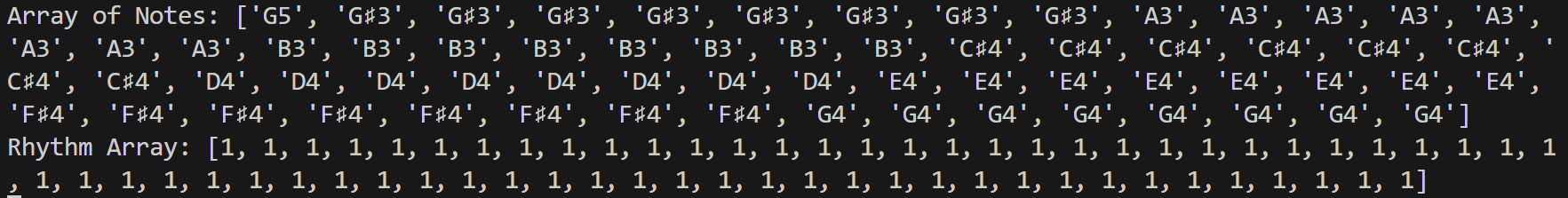

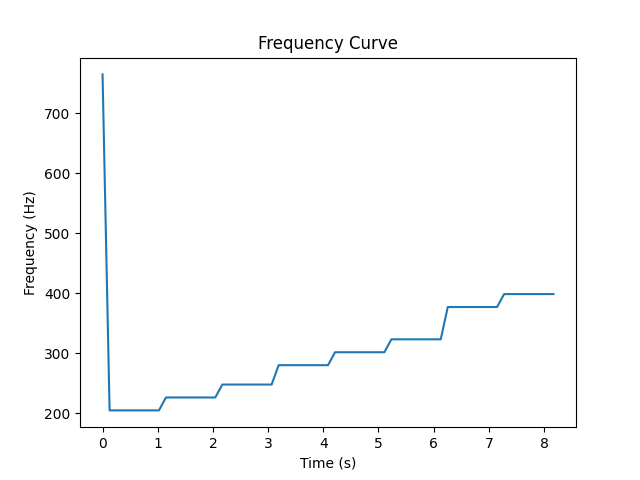

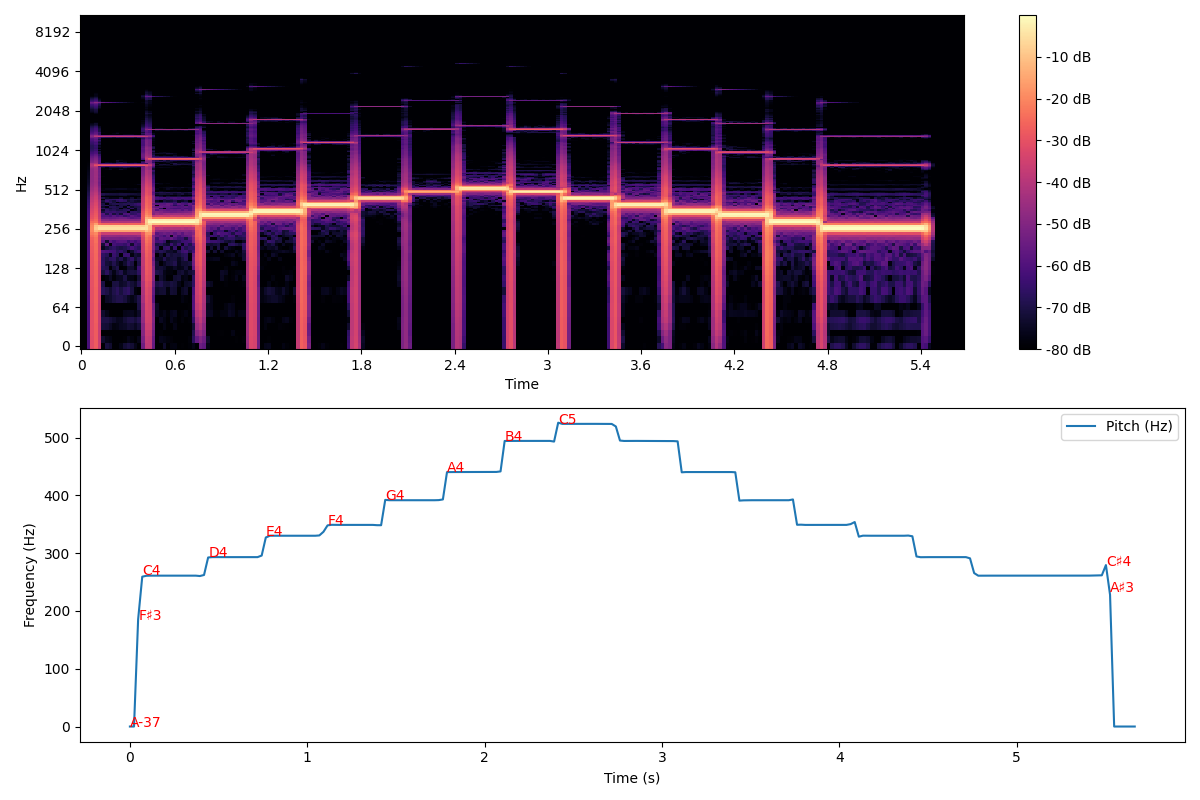

This week I worked on two main goals: improving the accuracy of the audio detection, and finalizing the integration of the whole system. For the audio detection, I modified the pitch detection algorithm so that it now outputs ‘rest’ notes. This can help align the audio output with the fingering output. I tested the audio “Mary had a little lamb” with a lower tempo, and the result gets better. However, the system still has some unresolved issues. For example, if the reference note is [A3: 2s], and the audio processor’s outputs [A3: 1s, R: 0.2s, A3:1s], it’s hard for the integration system to decide whether this note is being played correctly or not. And for the integration of the whole system, I and Junrui redesigned the implementation logic of the synchronization for fingering and audio.

Next week, I’ll keep working to solve the issue of the audio processor. I think the current pitch detection algorithm works fine, but I should add a function to process the detected note and note length. I’m mostly on track, but I hope I can find a way to improve the accuracy of pitch detection and raise the system’s error tolerance.