The biggest risk we face for now is the accuracy of the audio processor and the integration of the whole system. The accuracy of the audio processor still hasn’t reached the metrics we set before, and this could further affect the accuracy of the whole system. Lin’s currently working on improving the logic of the audio detection. If she fails to improve the accuracy, we’ll switch to modify the integration logic to increase the mismatch tolerance rate. And for the integration, we are still writing the code since the implementation logic changed. We plan to finish the implementation by Sunday so we have two days before the poster due to test the system.

There is no change to the existing schedule. All we need to do is try our best to improve our current system and finalize the implementation.

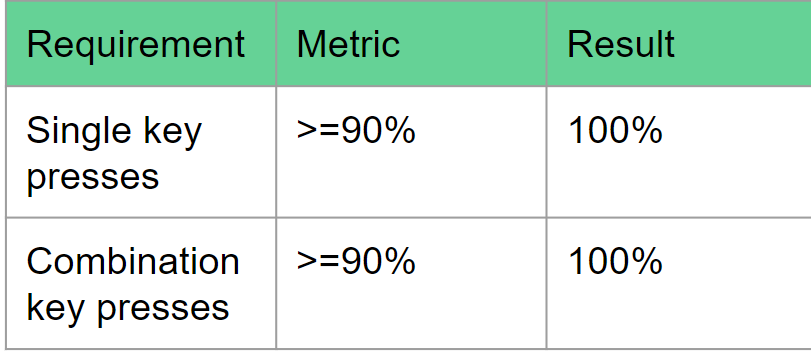

Test result for fingering detection:

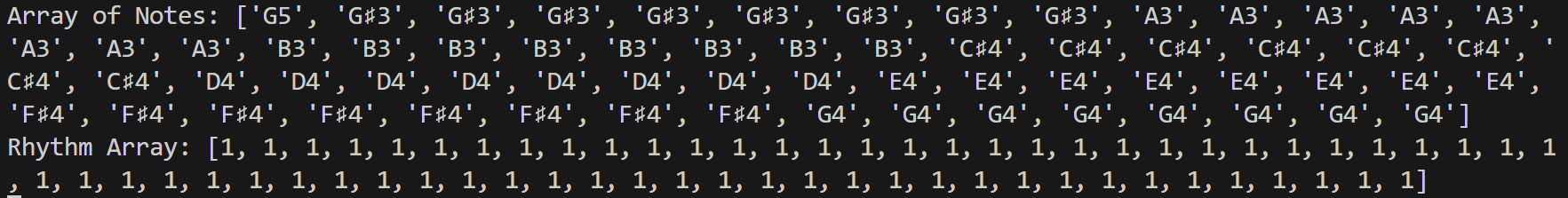

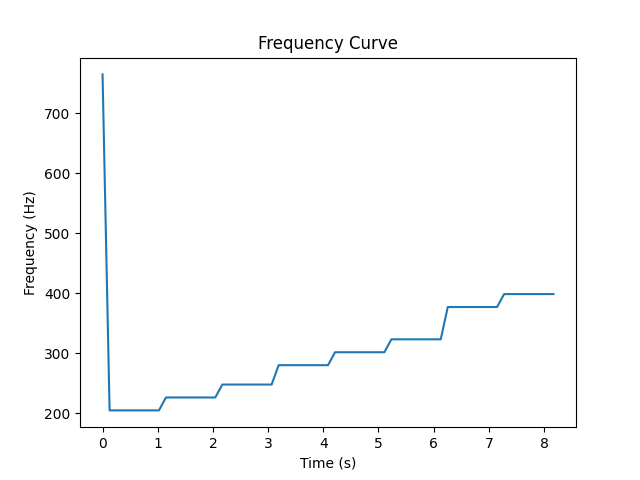

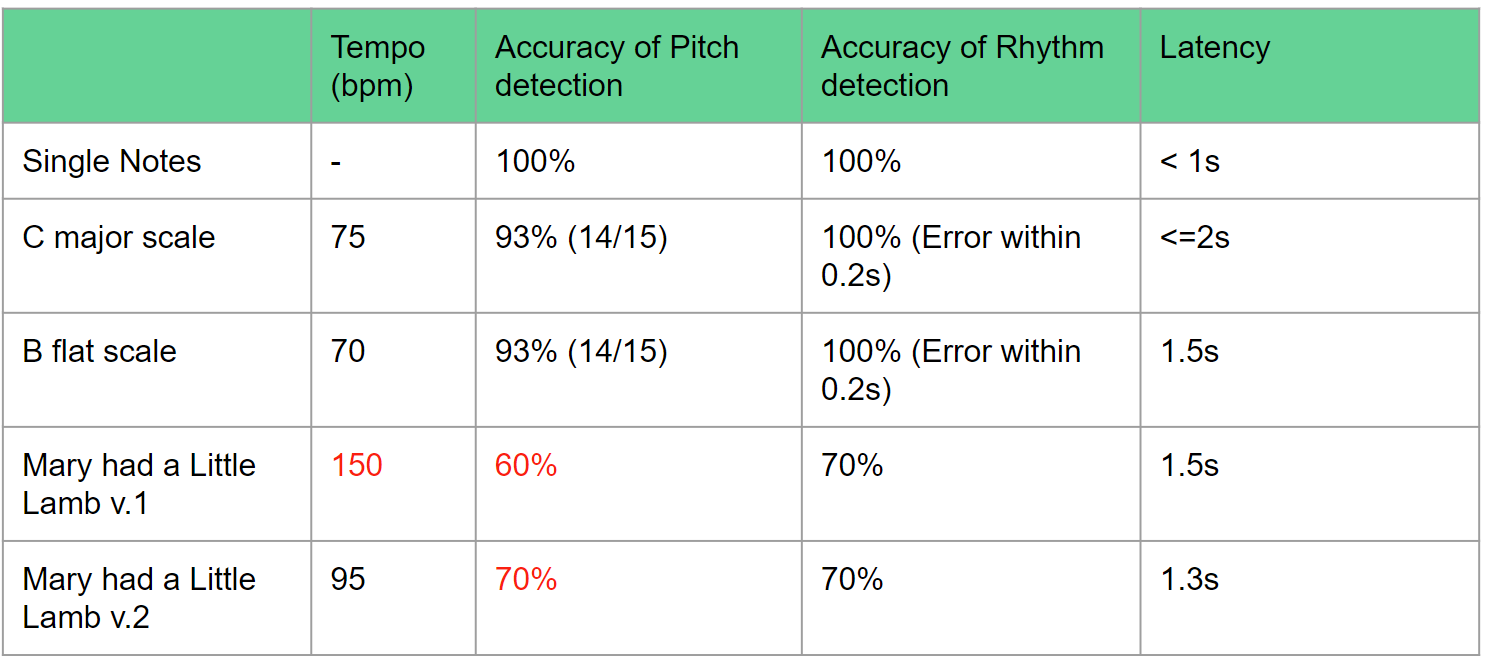

Test result for Audio Detection. Based on the result , we found that tempo range can make the accuracy of pitch detection worse. The slower and clearer the user plays the notes, the more accurate the result will be. We will keep working to improve the accuracy before the final.

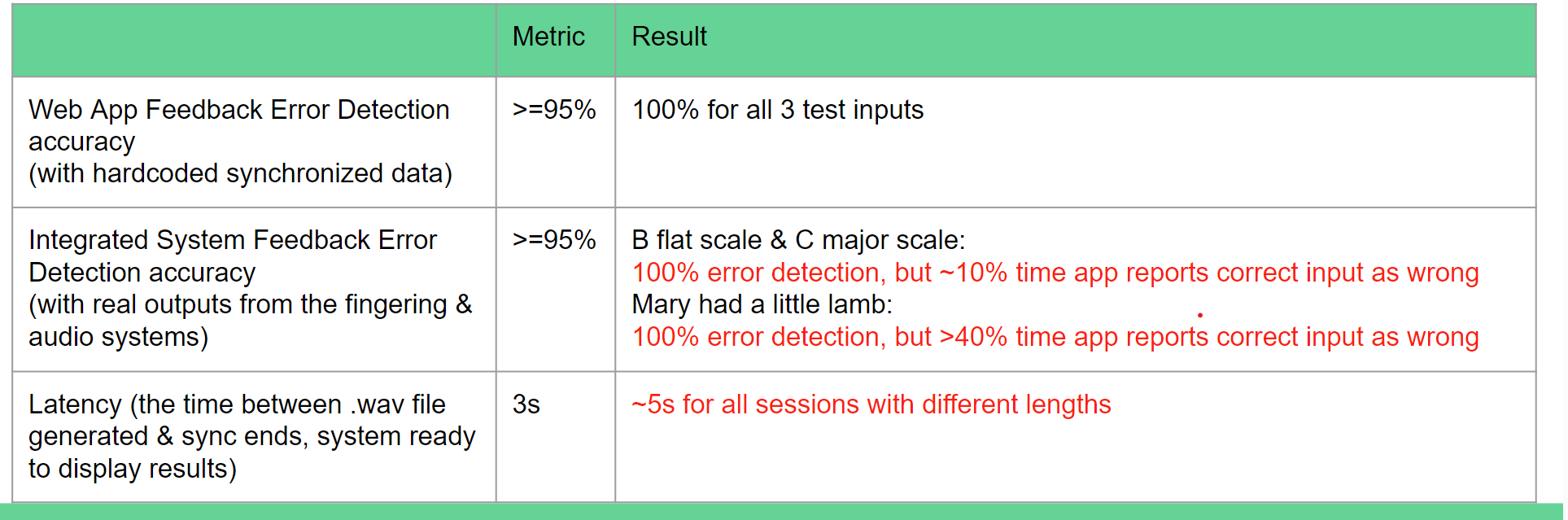

Test result for Web App (not finalized yet). We will keep working on the testing for the web app before the final.