Since I am the presenter for our team’s final presentation, this week I spent the first three days preparing for the final presentation. Then I discussed with Lin and modified our system’s integration logic. Initially when the system looped through the fingering data, it would take ‘slices’ of multiple lines and calculated the most common line, and that made the code inefficient. Now this is removed and new mistake tracking logic for mismatch tolerance is decided, which should be able to improve the latency. However, since I have some final homework deadlines this week, I didn’t have time to conduct new tests on the new implementation. I will continue to work on that with Lin on Sunday.

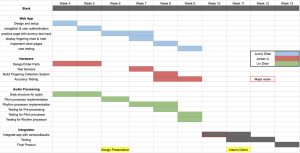

I am almost on track with the schedule in the integration phase, but the schedule is quite tight as the final report, demo, video deadlines are approaching. Next week I plan to finish the integration, conduct more tests with real inputs from users, and also start working on the report and video.