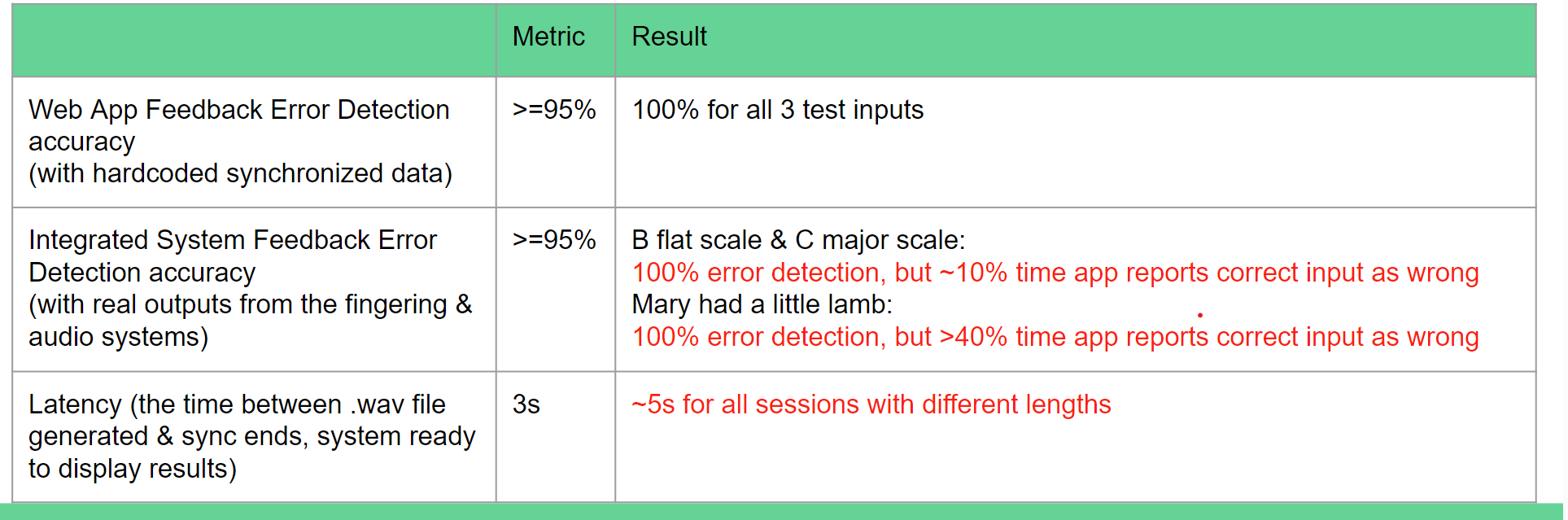

During the past two weeks, I have been working on the integration of the web app and the other 2 systems. I managed to write to the serial to notify the start to the fingering detection system, and read from the serial to get the real-time fingering info. Then the backend stores the fingering info in a buffer and generate the relative timestamp according to a line’s position in the buffer. For the audio detection system’s integration part, I managed to send a request to a new api endpoint to trigger the audio detection script in the backend. In addition to that, I modified the logic of the practice page and added start, end, replay button to only allow a user see the performance after their entire practice is recorded, since the audio detection process is not real-time.

I am currently on schedule. However, since the time generated for the fingering data are not so accurate, the synchronized result is not ideal most of the time. It’s hard for me to conduct integration tests and get results with moderate accuracy by this point. Next week, I plan to think of some ways to improve the situation, better synchronize all 3 parts, and try to get better results for tests.

Extra question:

To successfully implement and debug my project, I had to learn JavaScript for dynamic client-side interactions in HTML. Since most of the functions that can be done by Django are static, JS sections in HTML helps me a lot in constructing the web app. I also learned the Web Serial API for integrating the web app and the fingering detection system, and SVG for dynamic diagram coloring. I utilized interactive tutorials, official documentation, online discussion forum like Stack Overflow, as well as hands-on experiments to acquire those new knowledge and deepen my understanding.