This past week I have been working on the final steps of integration for the dirt detection subsystem. This included both speeding up the transmission interval between BLE messages, and fixing the way the data was represented on the AR application once the dirt detection data was successfully transmitted from the Jetson to the iPhone.

The BLE transmission was initially incredibly slow, but last week, I managed to increase the transmission rate significantly. By this time last week, we had messages sending at around every 5.5 seconds, and I had conducted a series of tests to determine what the statistical average was for this delay. Our group, however, had known that the capability of BLE transmission far exceeded the results that we were getting. This week, I timed the speed of the different components within the BLE script. The script which runs on the Jetson can be broken down into two parts: 1) the dirt detection component, and 2) the actual message serialization and transmission. The dirt detection component was tricky to integrate into the BLE script because each script relies on a different Python version. Since the dependencies for these script did not match (and I was unable to resolve these dependency issues after two weeks of research and testing), I had resorted to running one script as a subprocess within the other. After timing the subcomponents within the overall script, I found that the dirt detection was the component which was causing the longest delay. I had also discovered that sending the data over BLE to the iPhone took just over a millisecond. I continued by timing each separate component within the dirt detection script. At first glance, there was no issue, as the script ran pretty quickly when started from the command line, but the delay in opening the camera was what caused the script to be running incredibly slow. I tried to mitigate this issue by returning an object in the script to the outer process which was calling it, but this did not make sense as the data could only be read as serial data, and the dependencies would not have matched to be able to handle an object of that type. Harshul actually came up with an incredibly clever solution—he proposed use the command line to pipe in an argument instead. Since the subprocess function from Python effectively takes in command line arguments and executes them, we would pipe in a newline character each time we wanted to query another image from the script. This took very little refactoring on my end, and we have now sped up the script to be able to send images as fast as we would need. Now, the bottleneck is the frame rate of the CSI camera, which is only 30FPS, but our script can now (in theory) handle sending around 250 messages per second.

Something else I worked on in this past week was allowing the dirt detection data to be successfully rendered on the user’s side. Nathalie created a basic list data structure which stored timestamps along with some world coordinate. I added logic which sequentially iterated through this list, checking whether the Jetson’s timestamps matched the timestamp from within the script, and then displaying the respective colors on the screen depending on what the Jetson communicated to the iPhone. This iterative search was also destructive (elements were being popped off the list from the front, as in a queue data structure). This is because both the timestamps in the queue and the timestamps received from the Jetson are monotonically increasing, so we never have to worry about matching a timestamp with something that was in the past. In the state that I left the system in, we were able to draw small segments depending on the Jetson’s data, but Harshul and I are still working together to make sure that the area which is displayed on the AR application correctly reflects the camera’s view. As a group, we have conducted experiments to find out what the correct transformation matrix would be for this situation, and it now needs to be integrated. Harshul has already written some logic for this, and I simply need to tie his logic to the algorithm that I have been using. I do not expect this to take very long.

I have also updated the timestamps on the Jetson’s side and the iPhone side to be interpreted as the data type Double. This is because we are able to achieve much lower granularity and send a higher volume of data. I have reduced the granularity to 10 messages per second, which is an incredible improvement from one message every 5.5 seconds from before. If we wish to increase granularity, the refactoring process would be very short. Again, the bottleneck is now the camera’s frame rate, rather than any of the software/scripts we are running.

Earlier in the week, I spent some time with Nathalie mounting the camera and the Jetson. I mounted the camera to the vacuum at the angle which I tested for, and Nathalie helped secure the rest of the system. Harshul designed and cut out the actual hardware components, and Nathalie and I worked together to mount the Jetson’s system to the vacuum. Harshul handled the image tracking side of things. We now only need to mount the active illumination in the following week.

One consideration I had though of in order to refactor/reduce network traffic is to simply not send any bluetooth message from the Jetson to the iPhone in the case that something was clean. This at first seems like a good idea, but this was something I ended up scrapping. Consider the case where a location was initially flagged as dirty. If a user runs the vacuum back over this same location and cleans it, they should recognize that the floor is now clean. Implementing this change would cause the user to never know whether their floor had been cleaned properly.

As for next steps, we have just a little more to do for mounting, and the bulk of the next week will presumably be documentation and testing. I do not think we have any large blockers left, and feel like our group is in much better shape for the final demo.

Erin’s Status Report for 4/20

Over this past week, I have been working on the bluetooth transmission system between the Jetson and the iPhone, as well as mounting the entire dirt detection system. I have also been working on tuning the dirt detection algorithm, as the algorithm I previously selected operated solely on static images. When working with the entire end-to-end system, the inputs to the Jetson camera are different than the inputs I used to test my algorithms. This is because the Jetson camera is limited in terms of photo quality, and it is not able to capture certain nuances in the images when the vacuum is in motion, whereas these details may have been picked up when the system was at rest. Moreover, the bluetooth transmission system is now fully functional. I obtained a 800% speedup; I previously was working with a 45 second average delay between messages. After calibrating the Jetson’s peripheral script, I was able to reduce the delay between messages to be just 5.5 seconds (on average). This is still not as fast as we had initially hoped, but I recognize that running the dirt detection script will incur a delay, and that it may not be able to granulize this window any further. I am still looking to see if there are any remaining ways to speed up this part of the system, but this is no longer the main area of concern which I am working with.

Additionally, prior to this past week, the BLE system would often cause Segmentation Faults on the Jetson’s side when terminating the script. This was a rather pressing issue, as recurring Segmentation Faults would cause the Bluetooth system to require reboots at a regular rate. This is not maintainable, nor is it desired behavior for our system. I was able to mitigate this issue by refactoring the iPhone’s bluetooth connection script. Prior to this week, I had not worked too extensively with the Swift side of things; I was focused more on the Python scripting and the Jetson. This week, I helped Nathalie with her portion of the project, as she had heavy involvement with the data which the Jetson was transmitting to the iPhone. After a short onboarding session with my group, I was able to make the cleanliness data visible from the AR mapping functions, rather than only within scope for the BLE connection. Nathalie now has access to all the data which the Jetson sends to the user, and will be able to fully test and tune the parameters for the mapping. We no longer have any necessity for backup Bluetooth options.

Beyond the software, I worked closely with my group to mount the hardware into the vacuum system. Since I was the one who performed the tests which determined the optimal angles for which to mount the camera, I was the one who ultimately put the system together. Currently, I have fully mounted the Jetson camera to the vacuum. We will need to affix the active illumination system alongside the Jetson camera which I am not too worried about. The new battery pack we purchased for the Jetson has arrived, and once we finish installing WiFi capabilities onto the Jetson, we will be able to attach both the battery system and the Jetson itself to the vacuum system.

I have since started running some tests on the end-to-end system; I am currently trying to figure out the bounding boxes of the Jetson camera. Since the camera lends itself to a bit of a “fisheye” shape, the image that we retrieve from the CSI camera may need to be slightly cropped. This is easily configurable, and will simply require more testing.

Over the next week, I intend to wrap up some testing with the dirt detection system. The computer vision algorithm may need a little bit more of slight tuning, as the context in which we are running the system can highly change the performance of the algorithm. Moreover, I hope to get WiFi installed onto the Jetson by tomorrow. This is causing issues with the BLE system, as we are relying on UNIX timestamps to match location data from the AR app with the Jetson’s output feed. Without a stable WiFi connection, the clock on the Jetson is constantly out of sync. This is not a hard task, and I do not foresee this causing any issues in the upcoming days. I also plan to aid my group members in any aspect of their tasks which they may be struggling with.

Erin’s Status Report for 4/6

This past week, I have been working on further integration of BLE (Bluetooth Low Energy) into our existing system. Last Monday, I had produced a fully functional system after resolving a few Python dependency issues. The Jetson was able to establish an ongoing BLE connection with my iPhone, and I had integrated the dirt detection Python script into the Bluetooth pipeline as well. There were a couple issues that I had encountered when trying to perform this integration:

- Dependency issues: The Python version which is required to run the dirt detection algorithm is different from the version which supports the necessary packages for BLE. In particular, the package I have been using (and the package which most Nvidia forums suggest) has a dependency on Python3.6. I was unable to get the same package working with Python3.8 as the active Python version. Unfortunately, the bless package which spearheads the BLE connection from the Jetson to the iPhone is not compatible with Python3.6—I had resolved this issue by running Python3.8 instead. Since we need one end-to-end script running which queries results from the dirt detection module and send data via BLE using the bless Bluetooth script, I needed to keep two conflicting Python versions active at the same time, and run one script which is able to utilize both of them.

- Speed constraints: While I was able to get the entire dirt detection system running, the granularity at which the iPhone is able to receive data is incredibly low. Currently, I have only been able to get the Jetson to send one message about every 25-30 seconds, which is not nearly enough with respect to what our use-case requirements have declared. This issue is further exaggerated by the constraints of BLE. The system is incredibly limited on the amount of data it is able to send at once. In other words, I am unable to batch multiple runs of the dirt detection algorithm and send them to the iPhone as a single message, as that message length would exceed the capacity that the BLE connection is able to uphold. One thought that I had was to send a singular timestamp, and then send over a number of binary values, denoting whether the floor was clean or dirty within next couple seconds (or milliseconds) following the original timestamp. This implementation has not yet been tested, but I plan to create and run this script within the week.

- Jetson compatibility: On Monday, the script was fully functional, as shown by the images I have attached below. However, after refactoring the BLE script and running tests for about three hours, the systemd-networkd system daemon on the Jetson errored out, and the bluetooth connection began failing consistently upon reruns of the BLE script. After spending an entire day trying to patch this system problem, I had no choice but to completely reflash the Jetson’s operating system. This resulted in a number of blockers which have taken me multiple days to fix, and some are still currently being patched. The Jetson does not come with built in WiFi support, and lacks the necessary drivers for native support of our WiFi dongle. Upon reflashing the Jetson’s OS, I was unable to install, patch, download, or upgrade anything on the Jetson, as I was unable to connect the machine to the internet. Eventually, I was able to get ahold of an Ethernet dongle, and I have since fixed the WiFi issue. The Jetson now no longer relies on a WiFi dongle to operate, although now I am able to easily set that up, if necessary. In addition, while trying to restore the Jetson to its previous state (before the system daemon crashed), I ran into many issues while trying to install the correct OpenCV version. It turns out that the built-in Linux package for OpenCV does not contain the necessary packages for the Jetson’s CSI camera. As such, the dirt detection script that I had was rendered useless due to a version compatibility issue. I was unable to install the gstreamer package; I had tried everything from Linux command line installation to a manual build of OpenCV with the necessary build flags. After being blocked for a day, I ended up reflashing the OS, yet again.

This week has honestly felt rather unproductive even though I have put in many hours; I have not dealt with so many dependency conflicts before. Moving forward, my main goal is to restore the dirt detection and BLE system to its previous state. I have the old, fully function code committed to Github, and the only thing left to do is to set up the environment on the Jetson, which has proven to be anything but trivial. Once I get this set up, I am hoping to significantly speed up the BLE transmission. If this is not possible, I will look into further ways to ensure that our AR system is not missing data from the Jetson.

Furthermore, I have devised tests for the BLE and dirt detection subsystems. They are as follows:

- BLE Connection: Try to send dirty/clean messages thirty times. Find delay between each test. Consider the summary statistics of this data which we collect. Assuming the standard deviation is not large, we can focus more on the mean value. We hope for the mean value to be around 0.1s, which gives us fps=10. This may not be achievable given the constraints of BLE, but with these tests, we will be able to determine what our system can achieve.

- Dirt Detection

- Take ten photos each of:

- Clean floor

- Unclean floor, sparse dirt

- Unclean floor, heavy dirt

- Assert that the dirt detection module classifies all ten images of the clean floor as “clean”, and all twenty images of the unclean floor as “dirty”. If this is not met, we will need to further tune the thresholds of the dirt detection algorithm.

- Take ten photos each of:

Erin’s Status Report for 3/30

This week, I worked primarily on trying to get our Jetson hardware components configured so that they could run the software that we have been developing. Per our design, the Jetson is meant to run a Python script with a continuous video feed coming from the camera which is attached to the device. The Python script’s input is the aforementioned video feed from the Jetson camera, and the output is a timestamp along with a single boolean, detailing whether dirt has been detected on the image. The defined inputs and outputs of this file have changed since last week; originally I had planned to output a list of coordinates where dirt has been detected, but upon additional thought, mapping the coordinates from the Jetson camera may be too difficult a task, let alone the specific pixels highlighted by the dirt detection script. Rather, we have opted to simply send a single boolean, and narrow the window of the image where the dirt detection script is concerned. This decision was made for two reasons: 1) mapping specific pixels from the Jetson to the AR application in Swift may not be feasible with the limited resources we have, and 2) there is a range for which the active illumination works best, and where the camera is able to collect the cleanest images. I have decided to focus on that region of the camera’s input when processing the images through the dirt detection script.

Getting the Jetson camera fully set up was not as seamless as it had originally seemed. I did not have any prior trouble with the camera until recently, when I started seeing errors in the Jetson’s terminal claiming that there was no camera connected. In addition, the device failed to show up when I listed the camera sources, and if I tried to run any command (or scripts) which queried from the camera’s input, the output would declare the image’s width and height dimensions were both zero. This confused me, as this issue had not previously presented itself in any earlier testing I had done. After some digging, I found out that this problem typically arises when the Jetson is booted up without the camera inserted. In other words, in order for the CSI camera to be properly read as a camera input, it must be connected before the Jetson has been turned on. If the Jetson is unable to detect the camera, a restart may be necessary.

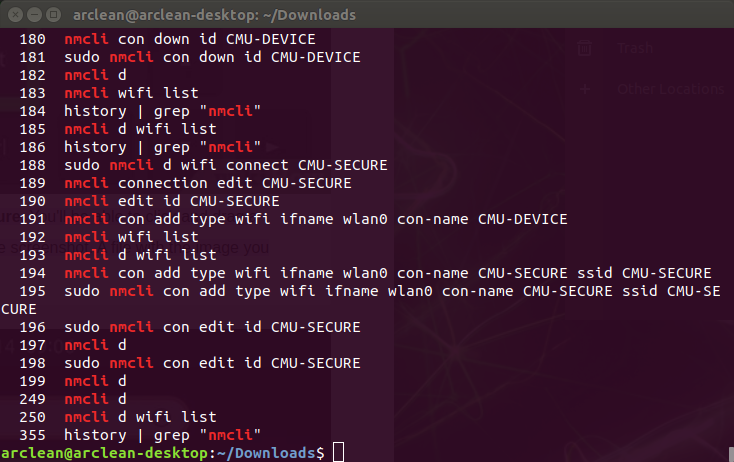

Another roadblock I had run into this week was trying to develop on the Jetson without an external monitor. I have not been developing directly on the Jetson device, since it has not been strictly necessary for the dirt detection algorithms to work. However, I am currently trying to get the data serialization to work, which requires extensive interaction with the Jetson. Since I have been moving around, carrying a monitor around with me is simply impractical. As such, I have been working with the Jetson connected to my laptop, rather than an external monitor with a USB mouse and keyboard. I used the `sudo screen` command in order to see the terminal of the Jetson, which is technically enough to get our project working, but I encountered many setbacks. For once, I was unable to view image outputs via the command line. When I was on campus, the process to getting the WiFi system set up on the Jetson was also incredibly long and annoying, since I only had access to command line arguments. I ended up using the command line tools from `nmcli` to connect to CMU-SECURE, and only then was I able to fetch the necessary files from Github. Since getting back home, I have been able to get a lot more done, since I have access to the GUI.

I am currently working on trying to get the Jetson to connect to an iPhone via a Bluetooth connection. To start, getting Bluetooth set up on the Jetson was honestly a bit of a pain. We are using a Kinivo BT-400 dongle, which is compatible with Linux. However, the device was not locatable by the Jetson when I first tried plugging it in, and there were continued issues with Bluetooth setup. Eventually, I found out that there were issues with the drivers, and I had to completely wipe and restore the hardware configuration files on the Jetson. The Bluetooth dongle seems to have started working after the driver update and a restart. I have also found a command line argument (bluetooth-sendto –device=[MAC_ADDRESS] file_path) which can be used to send files from the Jetson to another device. I have already written a bash script which can run this command, but sadly, this may not be usable. Unfortunately, Apple seems to have placed certain security measures on their devices, and I am not sure that I will find a way to circumvent those within the remaining time we have in the semester (if at all). An alternative option which Apple does allow is BLE, which stands for Bluetooth Low Energy. This is a technology which is used by CoreBluetooth, a framework which can be used in Swift. The next steps for me are to create a dummy app which uses the CoreBluetooth framework, and show that I am able to receive data from the Jetson. Note that this communication does not have to be two-way; the Jetson does not need to be able to receive any data from the iPhone; the iPhone simply needs to be able to read the serialized data from the Python script. If I am unable to get the Bluetooth connection established, at worst case, I am planning to have the Jetson continuously push data to either some website, or even a Github repository, which can then be read by the AR application. Obviously, doing this would incur higher delays and is not scalable, but this is a last resort. Regardless, the Bluetooth connection process is something I am still working on, and this is my priority for the foreseeable future.

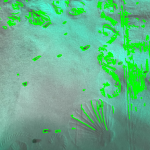

Erin’s Status Report for 3/23

This week, I continued to try and improve the existing dirt detection model that we have. I also tried to start designing the mounting units for the Jetson camera and computer, but I realized I was only able to draw basic sketches of what I imagined the components to look like, as I have no experience with CAD and 3D modeling. I have voiced my concerns about this with my group, and we have planned to sync on this topic. Regarding the dirt detection, I had a long discussion with a friend who specializes in machine learning. We discussed the tradeoffs of the current approach that I am using. The old script, which produced the results shown in last week’s status report, relies heavily on Canny edge detection when classifying pixels to be either clean or dirty. The alternative approach my friend suggested was to use color to my advantage. Something I hadn’t realized earlier was that our use-case constraints give me the ability to use color to my advantage. Since our use-case confines the flooring to be white and patternless, I am able to assume that anything “white” is clean, assuming that the camera is able to capture dirt which is visible to the human eye from a five foot distance. Moreover, we are using active illumination. In theory, since our LED is green, I can simply try to threshold all values between certain shades of green to be “clean”, and any darker colors, or colors with grayer tones, to be dirt. This is because (in theory), the dirt that encounter will have height component. As such, when the light from the LED is shined onto the particle, the particle will cast a shadow behind it, which will be picked up by the camera. With this approach, I would only need to look for shadows, rather than look for the actual dirt in the image frame. Unfortunately, my first few attempts at working with this approach did not produce satisfactory results, but this would be a lot less computationally expensive than the current script that I am running for dirt detection, as it relies heavily on the CPU intensive package, OpenCV.

I also intend to get the AR development set up fully on my end soon. Since Nathalie and Harshul have been busy, they have not had the time to sync with me and get my development environment fully set up, although I have been caught up to speed regarding the capabilities and restrictions of the current working model.

My next steps as of this moment are to figure out the serialization of the data from the Jetson to the iPhone. While the dirt detection script is still imperfect, this is a nonblocking issue. I currently am in possession of most of our hardware, and I intend on getting the data serialization via Bluetooth done within the next week. This also will allow me to start benchmarking the delay that it will take for data to get from the Jetson to the iPhone, which is one of the components of delay we are worried about with regard to our use case requirements. We have shuffled around the tasks slightly, and so I will not be integrating the dirt right now; I will simply be working on data serialization. The underlying idea here is that even if I am serializing garbage data, that is fine; we simply need to be able to gauge how well the Bluetooth data transmission is. If we need to figure out a more efficient method, I can look into removing image metadata, which would reduce the size of the packets during the data transfer.

Erin’s Status Report for 3/16

The focus of my work this week was calibrating the height and angle at which we would mount the Jetson camera. In the past two weeks, I spent a sizable amount of my time creating a procedure any member of my group could easily follow to determine the best configuration for the Jetson camera. This week, I performed the tests, and have produced a preliminary result: I believe that mounting the camera at four inches above floor level and a forty-five degree angle will produce the highest quality results for our dirt detection component. Note that these results are subject to change, as our group could potentially conduct further testing at a higher granularity. In addition, I still have to sync with the rest of my group members in person to discuss matters further.

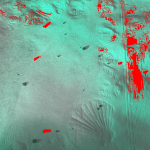

Aside from running the actual experiments to determine the optimal height and angle for the Jetson camera, I also included our active illumination into the dirt detection module. We previously received our LED component, but we had run the dirt detection algorithms without it. Incorporating this element into the dirt detection system gives us a more holistic understanding of how well our computer vision algorithm performs with respect to our use case. As shown by the images I used for the testing inputs, my setup wasn’t perfect—the “background”, or “flooring” is not an untextured, patternless white surface. I was unable to perfectly mimic our use case scenario, as we have not yet purchased the boards that we intend to use to demonstrate the dirt detection component of our product. Instead, I used paper napkins to simulate the white flooring that we required in our use case constraints. While imperfect, this setup configuration suffices for our testing.

Prior to running the Jetson camera mount experiment, I had been operating under the assumption that the output of this experiment would depend heavily on the outputs that the computer vision script generated. However, I realized that for certain input images, running the computer vision script was wholly unnecessary; the input image itself did not meet our standards, and that camera configuration should not have be considered, regardless of the performance of the computer vision script. For example, at a height of two inches and an angle of zero degrees, the camera was barely able to capture an image of any worthy substance. This is shown by Figure 1 (below). There is far too little workable data within the frame; it does not capture the true essence of the flooring over which the vacuum had just passed. As such, this input image alone rules out this height and angle as a candidate for our Jetson camera mount.

I also spent a considerable amount of time refactoring and rewriting the computer vision script that I was using for dirt detection. I have produced a second algorithm which relies more heavily on OpenCV’s built in functions, rather than preprocessing the inputs myself. While the output of my test image (the chosen image from the Jetson camera mount experiment) against this new algorithm does appear to be slightly more noisy than we would like, I did not consider this an incredibly substantial issue. This is because the input image itself was noisy; our use case encompasses patternless, white flooring, but the napkins in the image were highly textured. In this scenario, I believe that the fact that algorithm detected the napkin patterning is actually beneficial to our testing, which is a factor that I failed to consider the last couple of times I had tried to re-tune the computer vision script.

I am slightly behind schedule with regard to the plan described by our Gantt chart. However, this issue can (and will) be mitigated by syncing with the rest of my group. In order to perform the ARKit Dirt Integration step of our scheduled plan, Nathalie and Harshul will need to have the AR component working in terms of real-time updates and localization.

Within the next week, I hope to help Nathalie and Harshul with any areas of concern in the AR component. In addition, I plan to start designing the camera mount, and place an order for the Jetson camera extension cord, as we have decided that the Jetson will not be mounted very close to the camera.

Erin’s Status Report for 3/9

I spent the majority of my time this week writing the design report. I worked primarily on the testing and verification section of the design document. Our group had thought about the testing protocols for multiple use-case requirements, but there were scenarios I found we failed to consider. For example, I added the section regarding the positioning of the camera. In addition, much of the content that we had before for testing and verification had to be refined; the granularity at which we had specified our tests were not clear enough. I redesigned multiple of the testing methods, and added a couple more which we had not previously covered in depth in our presentations.

The Jetson Camera Mount test was a new component of our Testing and Verification section that we had not considered before. I designed the entirety of this section this week, and have started to execute the plan itself. We had briefly discussed how to mount the camera, but our group had never gotten into the nitty-gritty details of the design. While creating the testing plan, I realized that there would be additional costs associated with the camera component as well, and realized that mounting the camera could introduce many other complications, which caused me to brainstorm additional contingency plans. For example, the camera would be mounted separately from the Jetson computer itself. If we were to mount the computer higher, with respect to the camera, we would need a longer wire. The reasoning behind this is to combat overheating, as well as to separate the device from any external sources of interference. Moreover, I designed the actual testing plan for mounting the camera from scratch. We need to find the optimal angle for the camera so it can capture the entire span of space that is covered by the vacuum, and we also need to tune the height of the camera such that there is not an excessive amount of interference from dirt particles or vibrations from the vacuum itself. To account for all of these factors, I created a testing plan which accommodated for eight different camera angles, as well as three different height configurations. The choices for the number of configurations I chose was determined by a couple of simple placement tests I conducted with my group using the hardware that we already had. The next step, which I hope to have completed by the end of the week, would be to execute this test and to begin to integrate the camera hardware into the existing vacuum system. If a gimbal or specialized 3D printed mount is needed, I plan to reach out to Harshul to design it, as he has more experience in this field than the rest of us.

Our group is on pace with our schedule, although the ordering of some components of our project have been switched around. Additionally, after having accounted for the slack time, we are in good shape to produce a decently demonstrable product by the demo deadline, which is coming up in about a month. I would like to have some of the hardware components pieced together sooner rather than later though, as I can foresee the Jetson mounts causing some issues in the future. I hope to get these nuances sorted out while we still have the time to experiment. I also plan to get the AR system set up to test on my device. One thing that has changed from before is that Nathalie and Harshul discovered that LiDAR is not strictly necessary to run all of the required AR scripts that we plan to integrate into our project; the LiDAR scanner simply makes the technology work better in terms of speed and accuracy. We would thus not use my phone to demonstrate our project, but with this knowledge, I could help them more with the development process. I also hope to get this fully set up within the week, although I do not think this is going to be a blocker for anyone in our group. I recently created a private Github repository, and I have pushed all our existing dirt detection code to the remote server so everyone is able to access it. When Harshul has refined some of his code for the AR component of our project, I will be able to seamlessly pull the code he has and try to run it on my own device. Our group has also discussed our Github etiquette—once software development ramps up, we plan on using pull requests and pushing from our own individual branches before merging our project into the main branch. For now, we plan to operate slowly, as we are working on non-intersecting codebases.

Erin’s Status Report for 2/24

This week I worked primarily on actually implementing the software component of our dirt detection. We had ordered the hardware in the previous week, but since we designed our workflow to be streamlined in a parallel sense, I was able to get started with developing a computer vision algorithm for our dirt detection even though Harshul was still working with the Jetson. Initially, I had thought that I would be using one of Apple’s native machine learning models to solve this detection problem, and I had planned on testing multiple different models (as mentioned in last week’s status report) against a number of toy inputs. However, I had overlooked the hardware that we were using to solve this specific problem—the camera that we would be using was one that we bought which was meant to be compatible with the Jetson. As such, I ended up opting for a different particle detection algorithm. The algorithm that I used was written in Python, and I drew a lot of inspiration from a particle detection algorithm I found online. I have been working with the NumPy and OpenCV packages extensively, and so I was able to better tune the existing code to our use case. I tested the script on a couple of sample images of dirt and fuzz against a white napkin. Although this did not perfectly simulate the use case that we had described/have decided to go with, it was sufficient for determining whether this algorithm was a good enough fit. I ended up realizing that the algorithm could only be tuned so much with its existing parameters, and then experimented with a number of options to preprocess the image. I ended up tuning the contrast and the brightness of the input images and I found a general threshold that allowed for a low false negative rate which was still able to filter out a significant amount of noise. Here are the results that my algorithm produced:

Beyond the parameter tuning and the image preprocessing, I had tried numerous other algorithms for our dirt detection. I had run separate scripts and tried tuning methods for each of them. Most of them completely did not fit our use case, as they picked up far too much noise or were unable to terminate within a reasonable amount of time, indicating that the computational complexity was likely far too high to be compatible with our project.

I have also started working on the edge detection, although I have not finished as much as I would have liked at this current moment in time.

I am currently a little bit behind of schedule, as I have not entirely figured out a way to run an edge detection algorithm for our room mapping. This delay was in part due to our faulty Jetson, which Harshul has since replaced. I plan to work a little extra this week, and maybe put in some extra time over the upcoming break to make up for some of the action items I am missing.

Within the next week, I hope to be able to get a large part of the edge detection working. My hope is that I will be able to run a small test application from either Harshul or Nathalie’s phones (or their iPads), as my phone does not have the hardware required to run test this module. I will have to find some extra time when we are all available to sync on this topic.

Erin’s Status Report for 2/17

This week I mainly worked on getting started with the dirt detection, and figuring out what materials to order. Initially, our group had wanted to use an LED for active illumination, and then run our dirt detection module on the resulting illuminated imagery, but I found some existing technology that we ended up purchasing which would take care of this for us. The issue with the original design was that we had to be careful about whether the LEDs that we were using were vision safe, thus bringing ethics into question as well as design facets that my group and I do not know enough about. Moreover, I have started working a little with Swift and Xcode. There were a few Swift tutorials that I have watched over the week, and I toyed around with some of the syntax in my local development environment. I have also started doing research on model selection for our dirt detection problem. This is an incredibly crucial component of our end-to-end system, as it plays a large part in how easily we would be able to achieve our use case goals. I have looked into the Apple Live Capture feature, as this is one route that I am exploring for dirt detection. The benefit of this is that it is native to Apple Vision, and so I should have no issue integrating this into our existing system. However, the downside is that this model is often used for object detection rather than dirt detection, and the granularity might be too small for the model to work. Another option I am currently considering is DeeplabV3 model. This model specializes in segmenting pixels within an image into different objects. For our use case, we just need to differentiate between the floor and essentially anything which is not the floor. If we are able to detect small particles as “different objects” than what is on the floor, we could move forward with some simple casing on the size of these such objects for our dirt detection. The aim is to experiment will all these models over the couple days, and settle on a model of choice by the end of the week.

We are mostly on schedule, but we ran into some technical difficulties with the Jetson. We initially did not plan on using a Jetson; rather, this was a quick change of plans when we were choosing hardware, as the department was low in stock on Raspberry Pis and high in stock on Jetsons. The Jetson also has CUDA acceleration, which is good for our use case, since we are working with a processing intensive project. Our issue with the Jetson may cause delays on the side of end-to-end integration and is stopping Harshul from performing certain initial tests he wanted to run, but I am able to experiment with my modules independently from the Jetson, and I am currently not blocked. In addition, since we have replaced the active illumination with an existing product component, we are ahead of schedule on that front!

In the next week, I (again) hope to have a model selected for dirt detection. I also plan to help my group mates writing the design document, which I foresee to be a pretty time consuming part of my tasks for next week.

Erin’s Status Report for 2/10

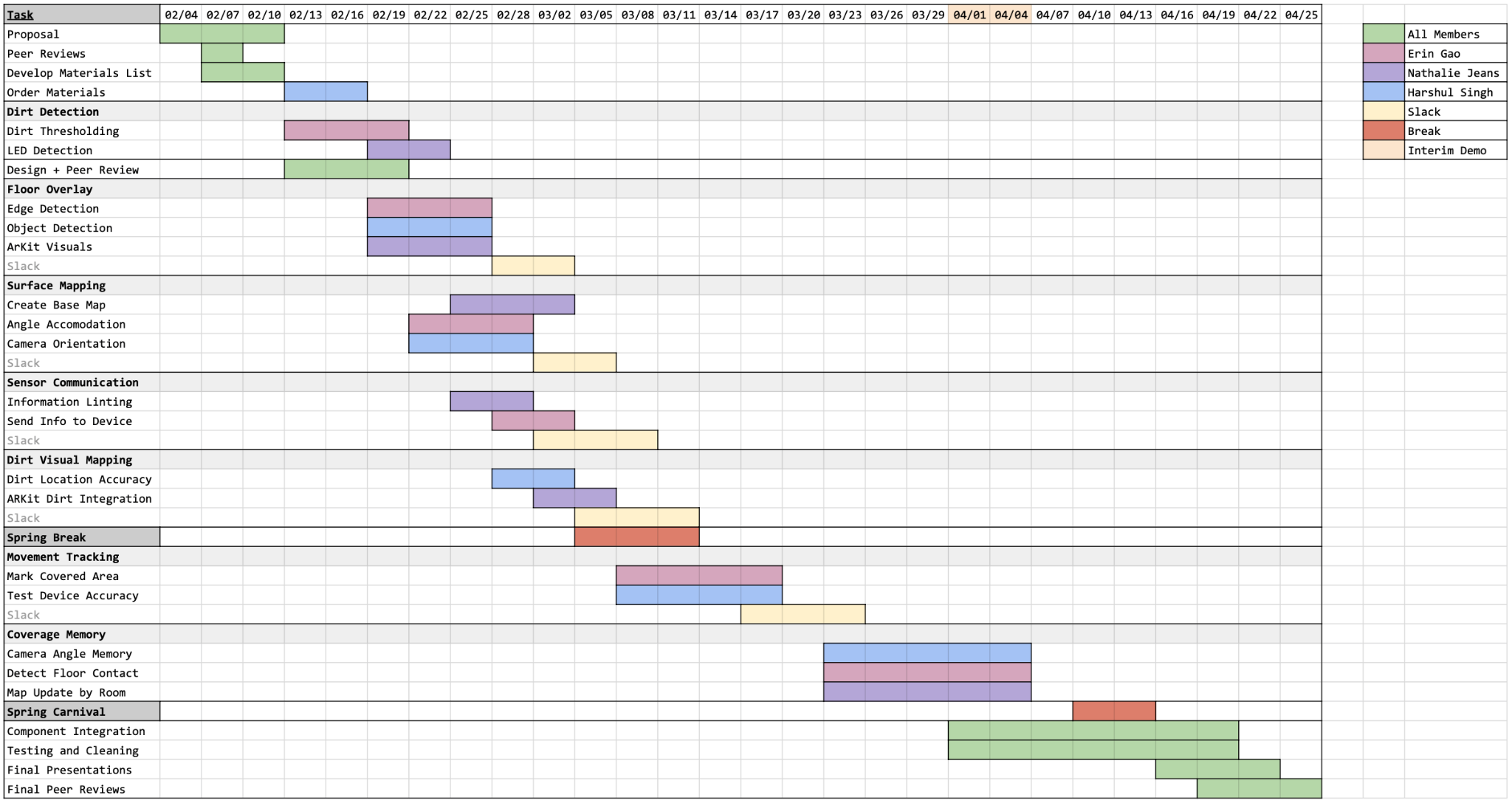

This week I worked primarily on the proposal presentation that I gave in class. I spent time practicing and thinking about how to structure the presentation to make it more palatable. Additionally, prior to my Monday presentation, I spent a good amount of time trying to justify the error values that we were considering for our use case requirements. I also worked on narrowing the scope of our project—I tried to calibrate the amount of work our group would have to do to something I believe would be more achievable during the semester. Initially, we had wanted to our project to work on all types of flooring, but I realized that the amount of noise that could get picked up on surfaces such as carpet or wood may make our project too difficult. I also spent some time looking at existing vacuum products so that our use case requirements would make sense with the current state of the products which are on the market. I worked on devising a gantt chart (shown below) for our group as well. The gantt chart was aligned with the schedule of tasks that Nathalie created, and it also showed the planned progression of our project through the course of the fourteen week semester. Finally, I also looked into some of the existing computer vision libraries and read up on ARKit and RealityKit to familiarize myself with the technologies that our group will be working with in the near future.

Our group is on track with respect to our gantt chart, although it is my wish that we stay slightly ahead of schedule. We plan on syncing soon and meeting up to figure out exactly what hardware we need to order.

Within the next week, I hope to have an answer/better response to all the questions that were revealed to our group in response to our initial presentation. Furthermore, I hope to make headway on dirt detection, as that is the next planned task that I am running point on. This would start with getting the materials ordered, figuring out how our component may fit on a vacuum, and brainstorming any backup plan in case our initial plan for the dirt detection LED falls through.