Challenges and Risk Mitigation:

- Scheduling

- Our schedules result in there being some challenges in finding times when we are all free. In order to mitigate this we are planning on setting up an additional meeting outside of class at a time that we are free to ensure that we have some time to sync and have cross collaboration time.

- Technical Expertise with Swift

- None of us have direct programming experience with Swift, so there is a concern that this may impact development velocity. To mitigate this we plan to work on the software immediately to ensure that we develop familiarity with the Swift language and Apple APIs immediately

- Estimation of technical complexity

- This space doesn’t have very many existing projects and products within it which makes it challenging to estimate the technical complexity of some of our core features. To mitigate this we have defined our testing environment to minimize variability by having a monochrome floor with a smooth surface to minimize challenges with noise and tracking along with leveraging existing tooling like ARKit to offload some of the technical complexity that is beyond the scope of our 14 week project.

- Hardware Tooling

- Active illumination is something that we haven’t worked with previously. To mitigate this we plan on drawing on Dyson ’s existing approach of an ‘eye safe green laser’ being projected onto the floor and scaling back to a basic LED light/buying an off the market illumination device that illuminates the floor as an alternative. We have also formulated our use-case requirements to focus on particles visible to the human eye to reduce the dependency on active illumination to identify dirt.

- Professor Kim brought up the fact that our rear-facing camera may get dirty. We do not know how much this will impact our project or how likely we are to encounter issues with this piece of hardware until we start to prototype and test the actual module.

Changes to the existing system:

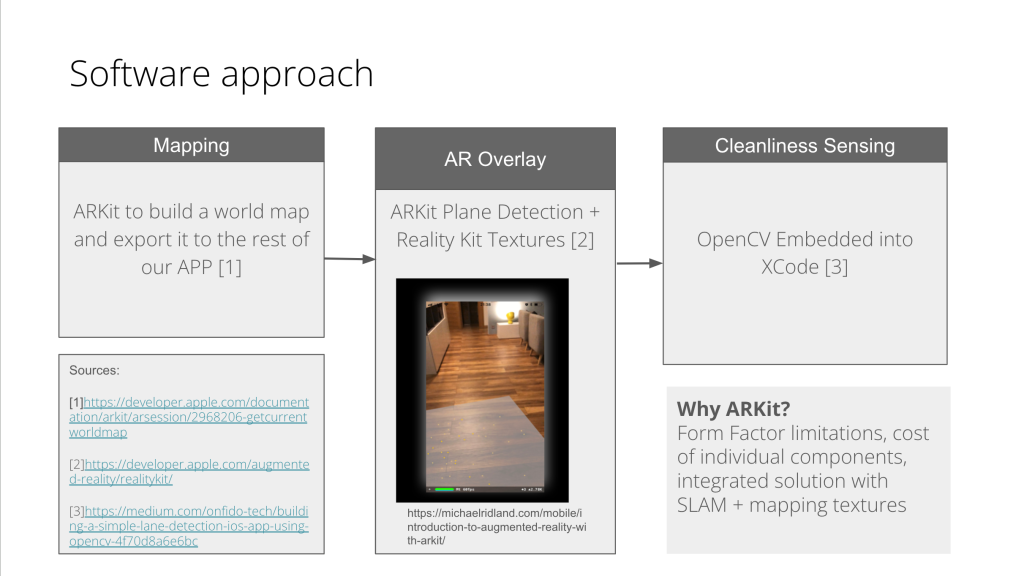

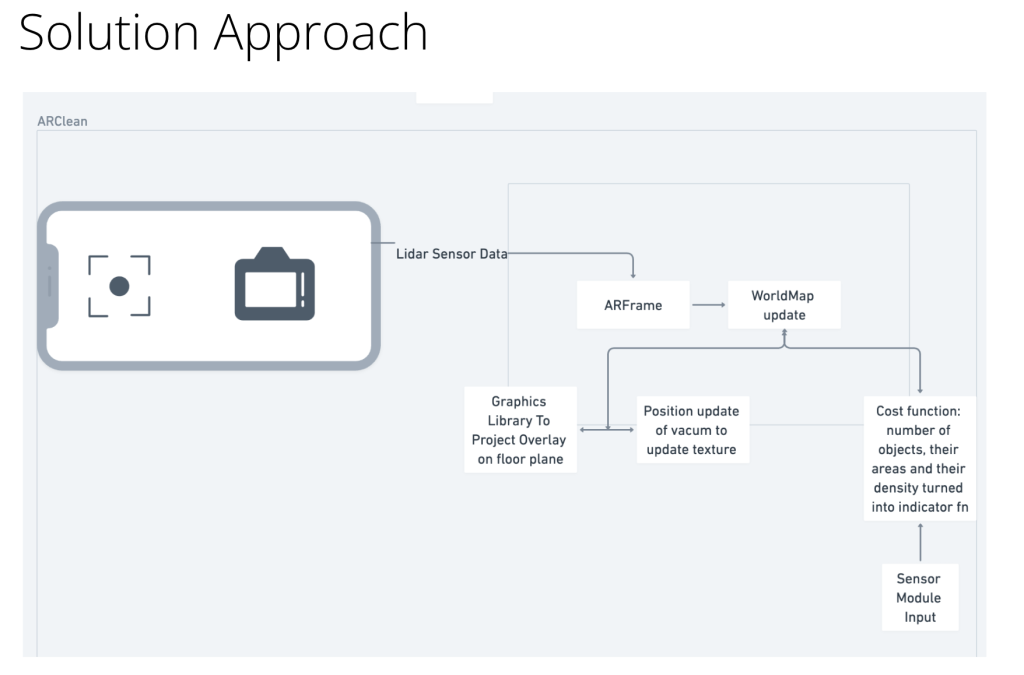

From iterating on our initial idea we decided to decouple the computer vision and cleanliness detection component into a separate module to improve the separation of concerns of our system. This incurs an additional cost of purchasing hardware and some nominal compute to transmit this data to the phone, but it improves the feasibility of detecting cleanliness by separating this system from the LiDAR and camera of the phone which is mounted with a much higher vertical footprint off of the floor. By having this module we can place it directly behind the vacuum head and have a more consistent observation point.

Scheduling

Schedule is currently unchanged however based on our bill of materials that we form and timelines on ordering those parts we are prepared to reorder tasks on our gantt chart to mitigate downtime when waiting for hardware components