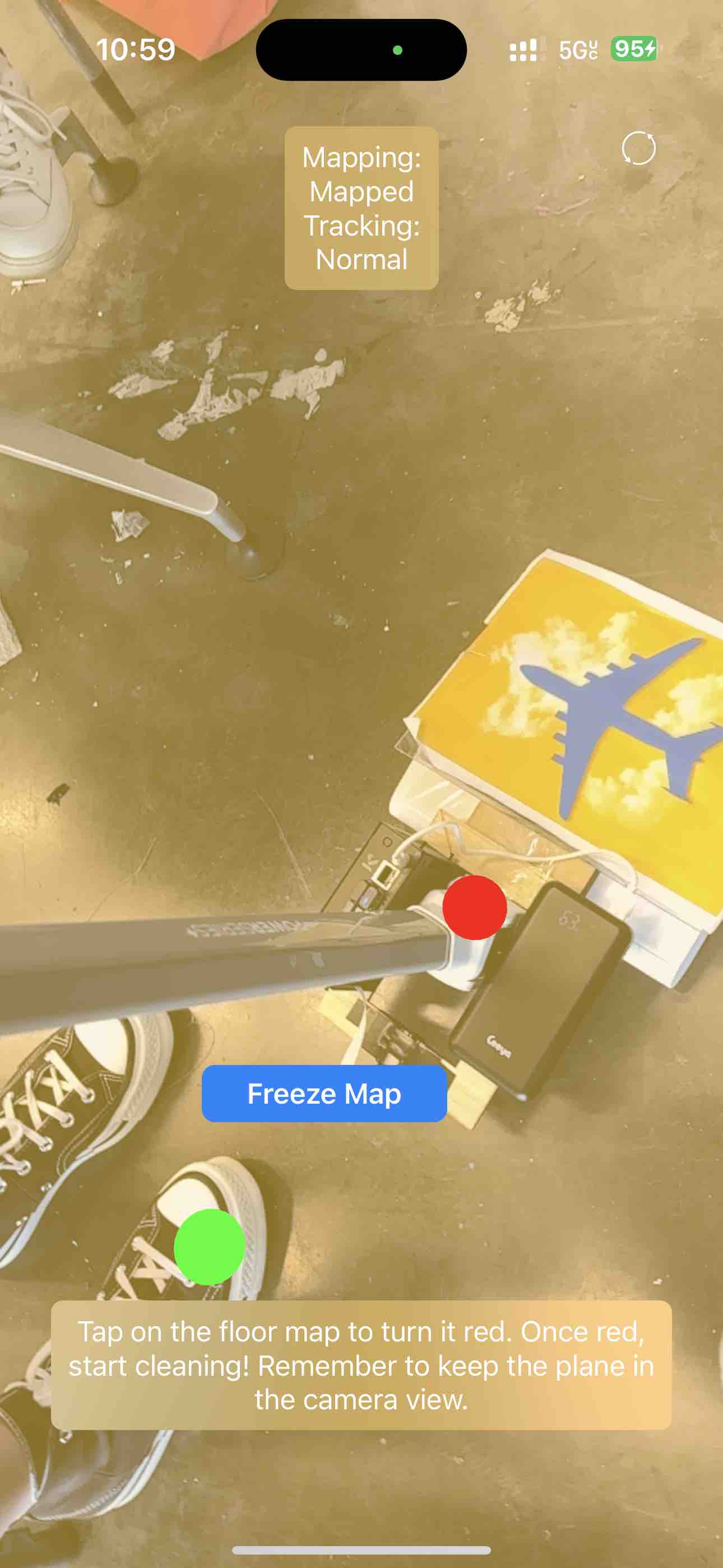

We met for a long meeting this week to work on integrating all of the components of our system and test the end to end functionality of the sensor messages being transmitted to the iPhone. Using the offsets that Nathalie measured from the bounding box that was constructed I created an offset node. This node serves as a coordinate reference point to draw a segment marked as dirty based on the location of the jetson camera view at a specific timestep.

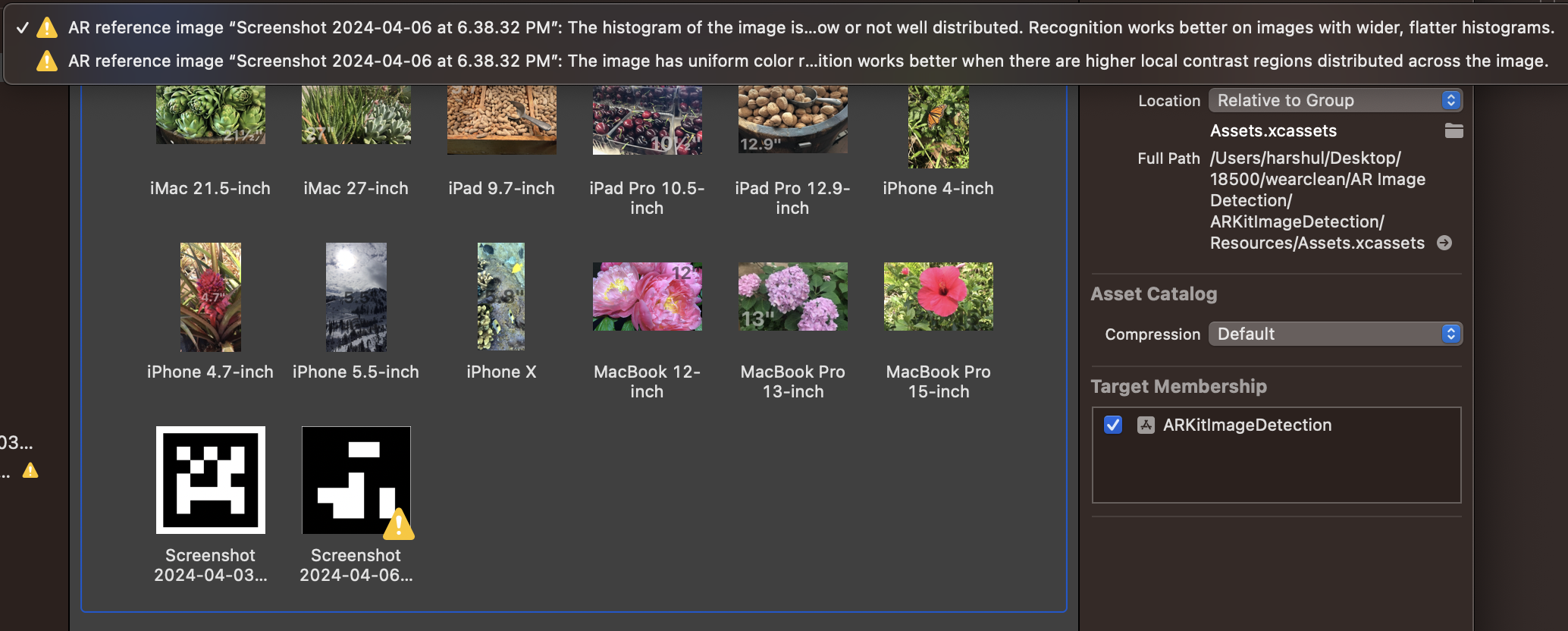

I worked on mounting the acrylic back plate for the tracking image onto the vacuum with hot-glue to provide a good orientation and easy replacement of the tracking image. Consideration was given to making it laminated but the team outlined concerns over glare which could compromise the integrity of the tracking. Plates for the active illumination mounts are also ready to be mounted.

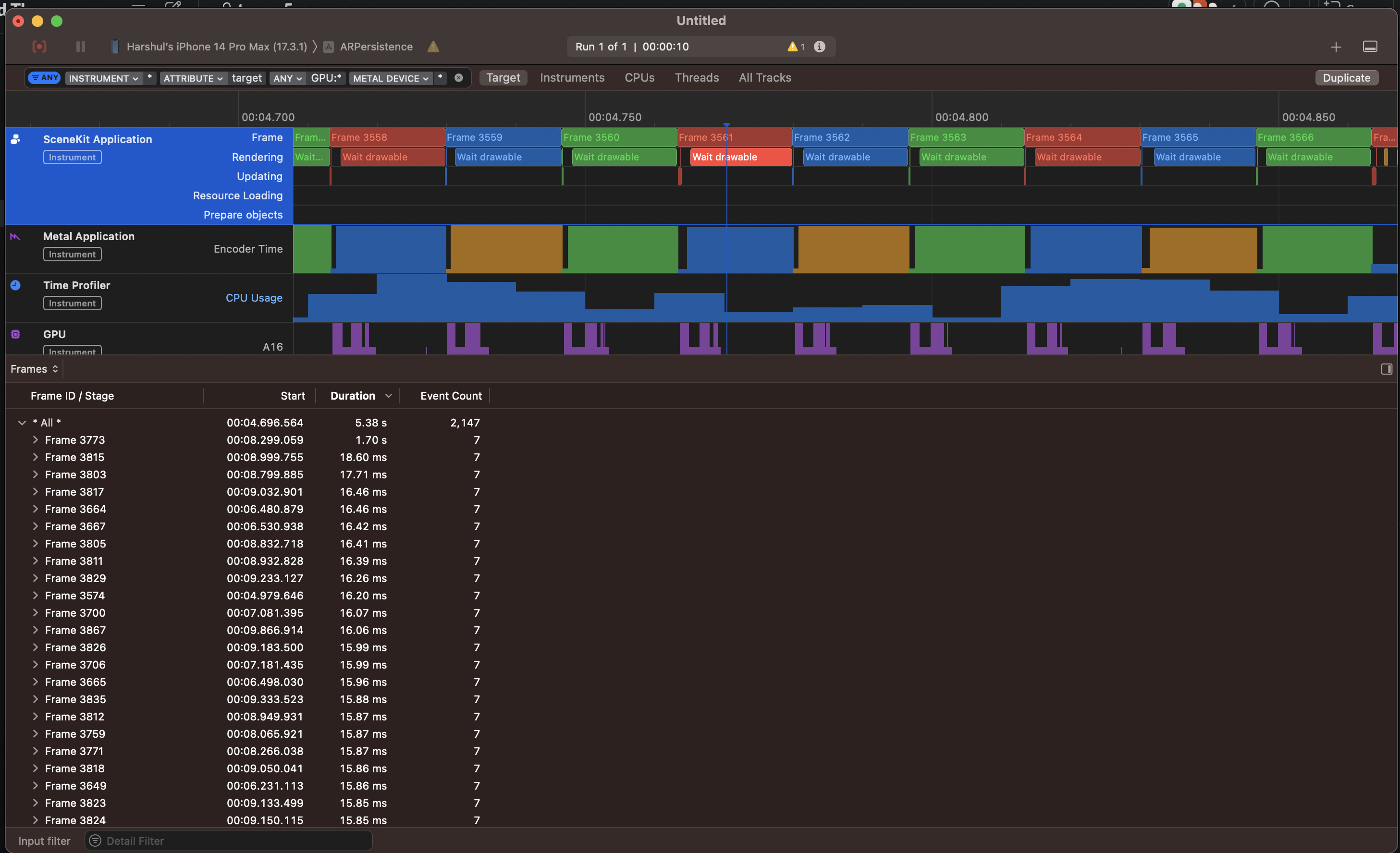

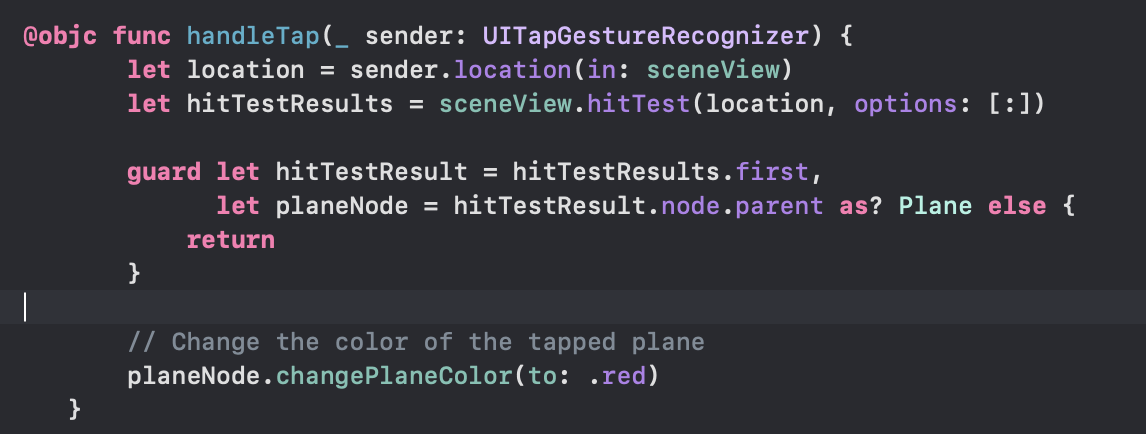

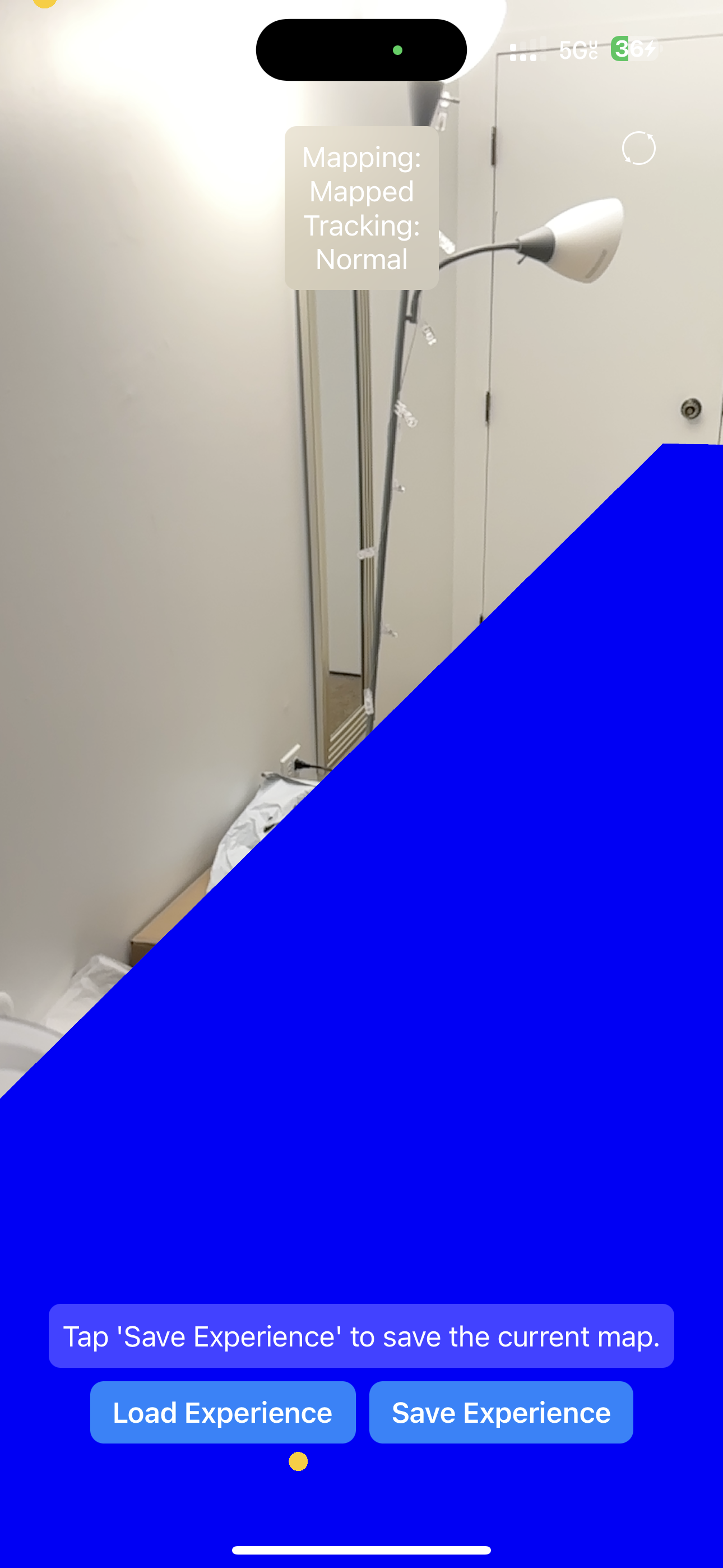

One issue with the existing design of how we were coloring in dirty segments was a temporal relience on a previous point in the queue which made segments have inconsistent sixes depending on the motion of the vacuum over time. This resulted in narrow and also large slices which aren’t ideal. We also cannot offset these points arbitrarily as the position of the offset+sphere nodes in the world change over time. To amend this I’ve been working on redesigning the queue structure to store a segment instead of a specific position to instead have the queue node contain a start and end coordinate encompassing the camera bounding box that Nathalie measured out to allow for a more consistent recoloring. This redesign was made possible as a result of the performance improvements we unlocked.

In our integration meeting we sat down to profile the python code on the jetson and discovered a result contrary to our working hypothesis surrounding the latency of the BLE messages. Bluetooth was running at millisecond frequency, but the dirt detection algorithm was taking a lot of time. This was due to the camera library taking multiple seconds to instantiate and sever the camera connection. This was happening every time we tried to send a message as the subprocesssing script was executing the python file repeatedly every iteration adding process overhead as well. The reason we needed this subprocess separation was because the camera needed to run in a python3.6 unlike to the BLE server which depended on asyncio in 3.8. I proposed a solution of using stdin with an input command to block until receiving input to stdin to allow for the camera to be instantiated only once instead of every-time we transmitted a message. We worked together to implement this range and it led to a considerable speedup from 5 seconds per message down to ~0.1-0.2s per message.

Next steps involve collaborating with Nathalie and Erin on testing the new queue and fine tuning the camera parameters. Big picture wise structuring our poster, collecting footage and testing on our poster board will be of high priority.