I spent the majority of my time this week writing the design report. I worked primarily on the testing and verification section of the design document. Our group had thought about the testing protocols for multiple use-case requirements, but there were scenarios I found we failed to consider. For example, I added the section regarding the positioning of the camera. In addition, much of the content that we had before for testing and verification had to be refined; the granularity at which we had specified our tests were not clear enough. I redesigned multiple of the testing methods, and added a couple more which we had not previously covered in depth in our presentations.

The Jetson Camera Mount test was a new component of our Testing and Verification section that we had not considered before. I designed the entirety of this section this week, and have started to execute the plan itself. We had briefly discussed how to mount the camera, but our group had never gotten into the nitty-gritty details of the design. While creating the testing plan, I realized that there would be additional costs associated with the camera component as well, and realized that mounting the camera could introduce many other complications, which caused me to brainstorm additional contingency plans. For example, the camera would be mounted separately from the Jetson computer itself. If we were to mount the computer higher, with respect to the camera, we would need a longer wire. The reasoning behind this is to combat overheating, as well as to separate the device from any external sources of interference. Moreover, I designed the actual testing plan for mounting the camera from scratch. We need to find the optimal angle for the camera so it can capture the entire span of space that is covered by the vacuum, and we also need to tune the height of the camera such that there is not an excessive amount of interference from dirt particles or vibrations from the vacuum itself. To account for all of these factors, I created a testing plan which accommodated for eight different camera angles, as well as three different height configurations. The choices for the number of configurations I chose was determined by a couple of simple placement tests I conducted with my group using the hardware that we already had. The next step, which I hope to have completed by the end of the week, would be to execute this test and to begin to integrate the camera hardware into the existing vacuum system. If a gimbal or specialized 3D printed mount is needed, I plan to reach out to Harshul to design it, as he has more experience in this field than the rest of us.

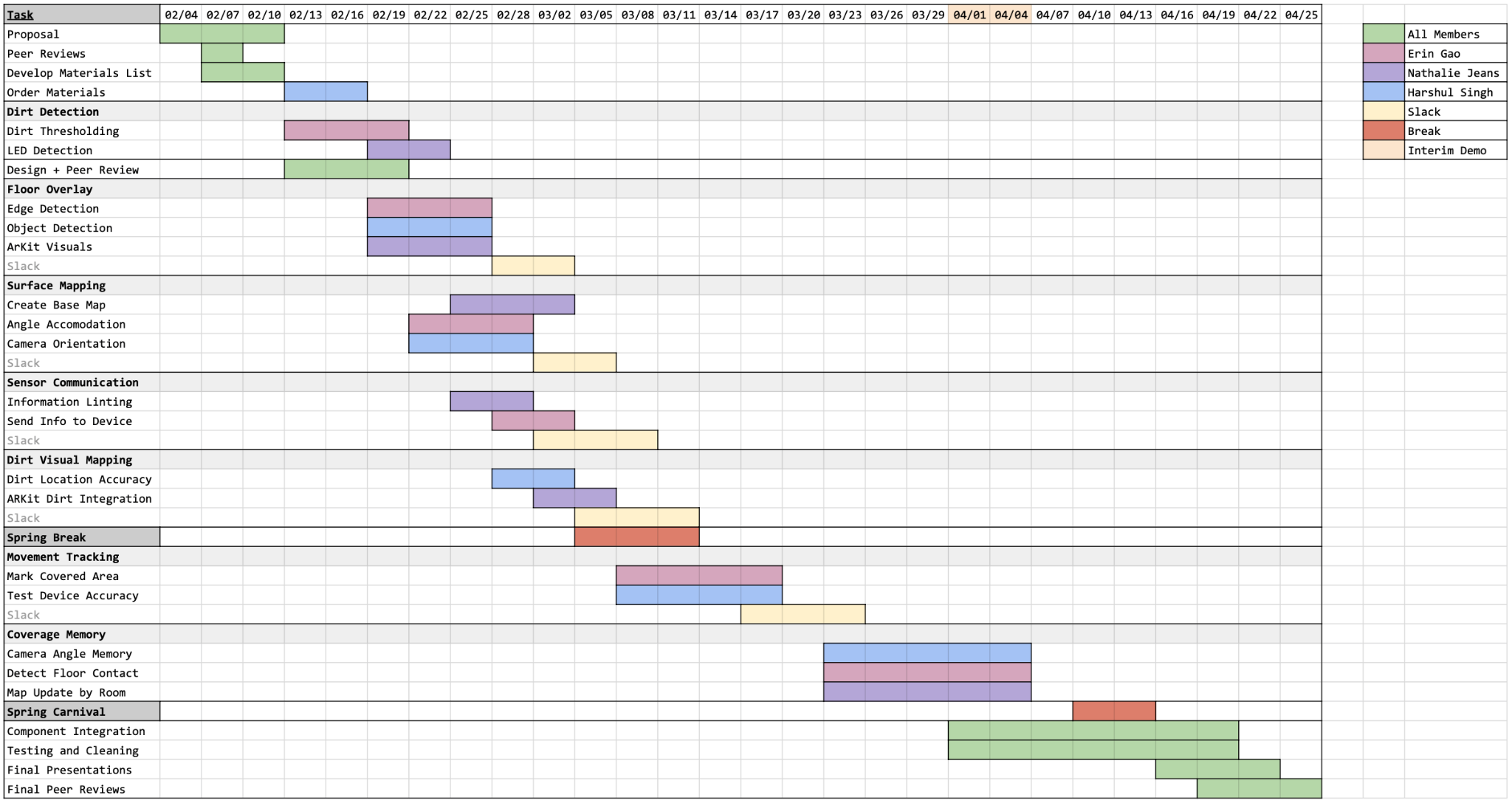

Our group is on pace with our schedule, although the ordering of some components of our project have been switched around. Additionally, after having accounted for the slack time, we are in good shape to produce a decently demonstrable product by the demo deadline, which is coming up in about a month. I would like to have some of the hardware components pieced together sooner rather than later though, as I can foresee the Jetson mounts causing some issues in the future. I hope to get these nuances sorted out while we still have the time to experiment. I also plan to get the AR system set up to test on my device. One thing that has changed from before is that Nathalie and Harshul discovered that LiDAR is not strictly necessary to run all of the required AR scripts that we plan to integrate into our project; the LiDAR scanner simply makes the technology work better in terms of speed and accuracy. We would thus not use my phone to demonstrate our project, but with this knowledge, I could help them more with the development process. I also hope to get this fully set up within the week, although I do not think this is going to be a blocker for anyone in our group. I recently created a private Github repository, and I have pushed all our existing dirt detection code to the remote server so everyone is able to access it. When Harshul has refined some of his code for the AR component of our project, I will be able to seamlessly pull the code he has and try to run it on my own device. Our group has also discussed our Github etiquette—once software development ramps up, we plan on using pull requests and pushing from our own individual branches before merging our project into the main branch. For now, we plan to operate slowly, as we are working on non-intersecting codebases.