Schedule

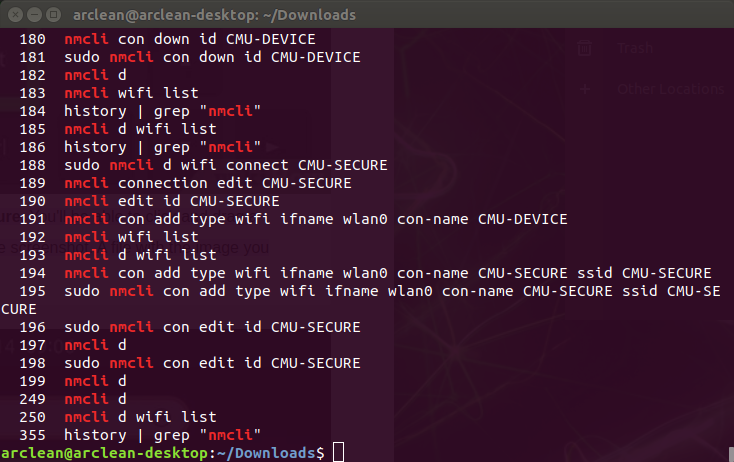

Now that we are in the final stretch, we need to test the full system, and continue working on our tasks that involve drawing the properly accurate rectangular red segments and fine tuning the frequency of our data sending/receiving now that we are not limited by it. We are working to improve the UI so that it is actually instructive and intuitive on demo day. Besides the technical improvements we need to make on our project, we need to start working on our presentation materials which include the final video, poster, and the written report.

Risks & Challenges

We currently have a little work left to accurately reflect the dirty and clean space on the AR side. The bounding boxes do not perfectly align yet, so we are still tuning the values within our AR app to ensure that the camera view matches the response on the iPhone’s app. Erin and Harshul are working to tune these parameters, and Nathalie is working on final touches with the UI. We still have a couple final tests to run, and we need to mount the active illumination to the system. However, we don’t anticipate any of these tasks to be blocking.

Validation:

- Line Tracking Test

Using masking take, create a straight, one meter long zig-zag section of four 90° bends which are each half a meter long. Using four 8.5″x11″ US Letter papers, print out a curved line section of the same width as the masking tape, and assemble to create a contiguous line. Then drive the vacuum over the line and verify that the drawn line tracks the reference lines with smooth connectivity and no deviations of perpendicular distance by more than 5cm.

Results: Verified that we are able to track a range of motions from straight to sharp curves. Instead of mocking this on paper, we used TechSpark’s robot tracking tape path, which had a similar geometry to what was outlined in the test - Technical Requirements Tracking Test

In our design report, we outlined a 5cm radius for the vacuum head’s suction. Upon setting that radius for the SCNLine, we should see that the lines drawn encompass the length of the vacuum head. Additionally, we also outlined a 0.5s latency requirement for the tracking motion. To test this, we move in a straight line for two meters and verify that upon stopping, the entire motion is captured on the AR application within 0.5 seconds and accurately reflects the movement.

Results: Tracking is in real time and the line drawn is continuous with no stuttering. This test informed our decision to move to SCNLine rather than our previous naive implementation of segmented cylinders. - Plane Projection Verification

We will draw an offset at 5cm plus the radius of the SCNLine from the floor plane. Then we will place the phone such that the camera is touching the floor and positioned perpendicular to it. Upon visual inspection, none of the drawn shape should touch or extend past this test plane.

Results: Verified that this projection method works. It is reliable even after changing from a hit-test to a plane projection - BLE Connection:

Try to send dirty/clean messages thirty times. Find delay between each test. Consider the summary statistics of this data which we collect. Assuming the standard deviation is not large, we can focus more on the mean value. We hope for the mean value to be around 0.1s, which gives us fps=10. This may not be achievable given the constraints of BLE, but with these tests, we will be able to determine what our system can achieve

Results: We ran tests and collected over two hundred different sample latency values. We have achieved the desired 0.1s latency. The bottleneck has now become the camera’s frame rate, which is 30fps. - Dirt Detection

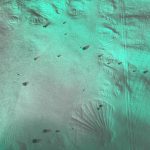

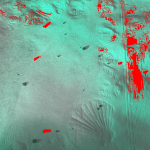

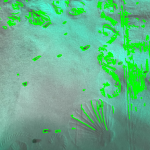

Take ten photos each of: 1) Clean floor, 2) Unclean floor, sparse dirt, and 3) Unclean floor, heavy dirt. Assert that the dirt detection module classifies all ten images of the clean floor as “clean”, and all twenty images of the unclean floor as “dirty”. If this is not met, we will need to further tune the thresholds of the dirt detection algorithm.

Results: After running our script on two different algorithms, we achieved the following results. Based on these values, we have chosen the second script.FPV 81.82% 0.00% FNV 0.00% 9.52% ACC 71.88% 93.75% - Camera Bounding Box Test

Set up the vacuum system on a table in TechSpark and create little red rods to delimit borders of its field of vision. By accurately determining these parameters, we refined the precise cropping and granularity in our image capturing.

Results: We have now determined the correct parameters to use when cropping the camera field of view in our dirt detection algorithm. - Jetson Mounting Tests

Note that these tests were very prototype focused. We created cardboard cut outs with a knife to identify shape and stiffness requirements. We then cut the pieces out of both acrylic and plywood and tested to make sure that there was enough give in the components to be able to still function modularly. We tested our components using tape, hot glue and epoxy for mounting. The tape was flimsy and too vulnerable for jostling our main mount. We opted for hot glue and/or epoxy for mounting fixed components. The initial testing we did with the first mount iterations found that the wheels jostled the mount so we fabricated standoffs to account for this.

Results: Camera configuration cropping and resolution was initially too narrow. We implemented design changes and updated the camera configuration to capture a bounding box more appropriately sized with the width of the vacuum. - Camera Mounting

Tested various camera angle configurations against the amount of dirt captured to identify optimal mounting orientation.

Results: We used this test to select our final mounting orientation for the Jetson camera.

- Battery Life Test

We start the Jetson from a full charge, and run the entire end-to-end system for five minutes. After the five minutes are up, we check the charge on the Jetson. A charge depletion of less than 2.08% would indicate a successful output.

Results: Over a span of thirty minutes, the power bank battery was only depleted around 10% (across four independent trials). This satisfies our requirements for battery life.