This week I mainly worked on the core feature of being able to draw on the floor plane using the position of our phone in the environment as a reference point. SceneKit allows projecting elements from the 2D coordinate frame of the ‘view’, in this case, the screen, into a 3D world plane, using the ‘unprojectOntoPlane’ method My first approach was to take the camera coordinates, convert them into 2d screen coordinates, and then perform the projection. However, there seemed to be a disconnect between the view port and the camera’s coordinates so I pivoted to using the center of the screen as the base point. In order to project onto the plane and also ensure specifically that I’m only projecting onto the desired floor plane, I updated my tap gesture to set variables of projectedPlane and a flag of selectedPlane to only enable the projection logic once a plane was selected and allowed the projection renderer to access the plane object. I then performed a hittest which sends out a ray from that 2d point into the 3d world and returns AR objects that it passes through. In order to make planes uniquely identifiable and comparable I added a uuid field to the class which I then cased on to ensure I’m only drawing on the correct plane. The result of a HitTest returns the world coordinates at which it intersected the plane which I then passed into a function to draw.

Video of Drawing on plane with circles

Video of Drawing on plane with connected cylindrical segments

Functionally this worked, but there was a notable performance drop. I attempted to introduce a time interval to reduce the frequency of the hittests and draw spheres, which are more shader optimized than cylinders, but there is absolutely more work to be done.

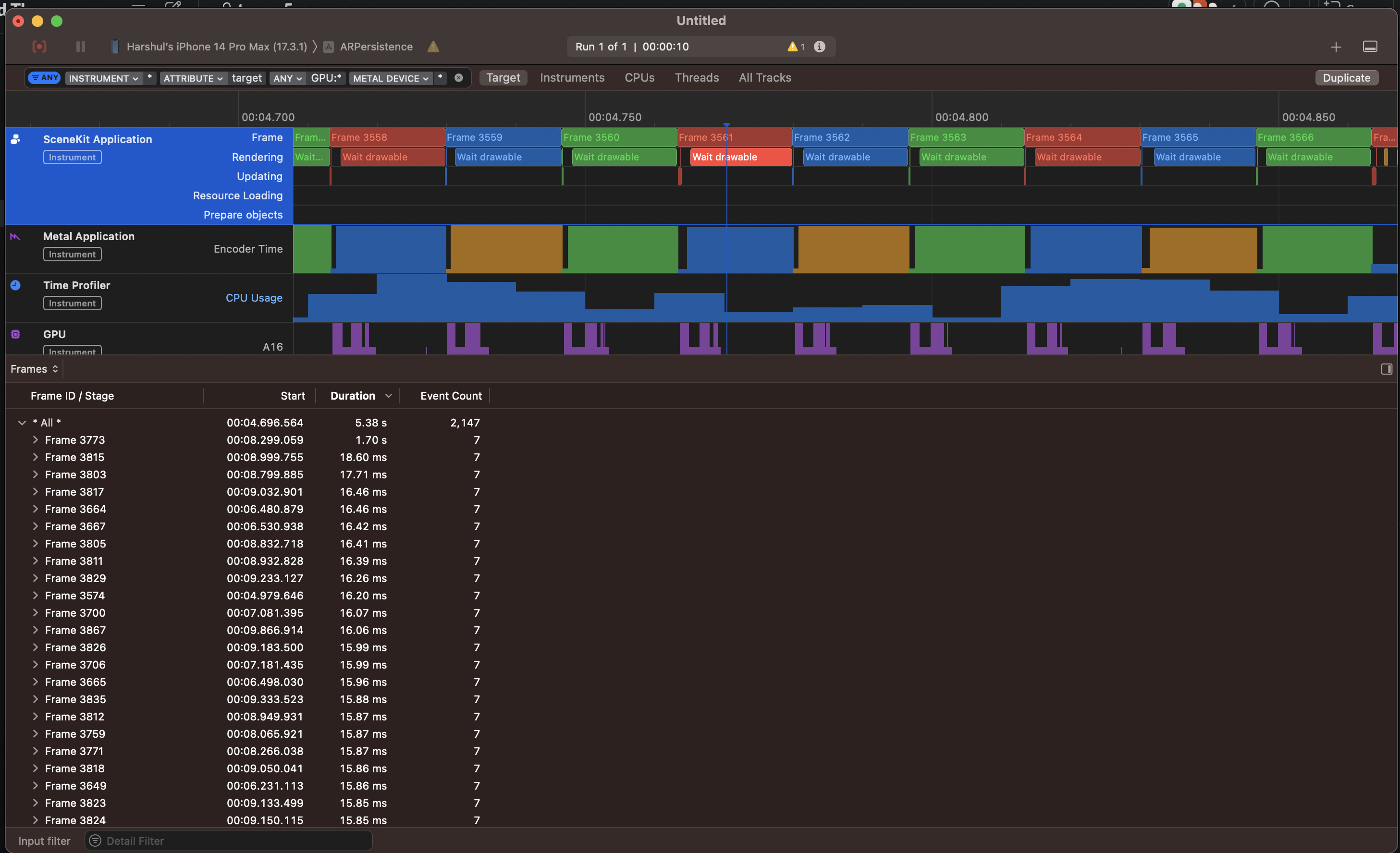

I then profiled the operation of the app and while for some reason debug symbols did not export. This outlined that there was a delay in wait for drawable.

Researching this led me to find out that drawing repeated unique shapes and adding them is not as performant as stitching them together. Additionally, performing physics/mathematics calculations in the renderer (non GPU ones, at least) can cause this performance impact. In discussing these findings with Nathalie, we identified 2 candidate approaches forward to cover our bases on the performance bottleneck that we could work on in parallel. The first approach consists of 2 key changes. Firstly, I’m going to try to move the hittest outside of the renderer. Secondly, Natalie outlined that the child nodes in our initial plane detection did not have this impact on performance so I plan on performing a coordinate transform and drawing the shapes as child nodes to see if that makes load on the renderer better and experiment with flattened clone to coalesce these shapes.

When looking up native parametric line implementations in Xcode I could only find bezier curves in the UI library. We managed to find a package SceneLine that implements parametric lines in sceneKit which is what Nathalie is going to install and experiment with. to see if we unlock a performance speedup by only having one node that we update instead of a new node for every shape.

Next steps involve addressing this performance gap in time for our demo and syncing with Nathalie on our optimization findings. Additionally integrating Nathalie’s freeze frame feature as well as Bluetooth messages is our next highest priority post-demo as Professor Kim outlined that integration would take more time than we anticipate. Eventually we will need to have the line be invisible, but place planes along its coordinates and orient them the correct way which should be unlocked once we have the ability to draw in a performant way.