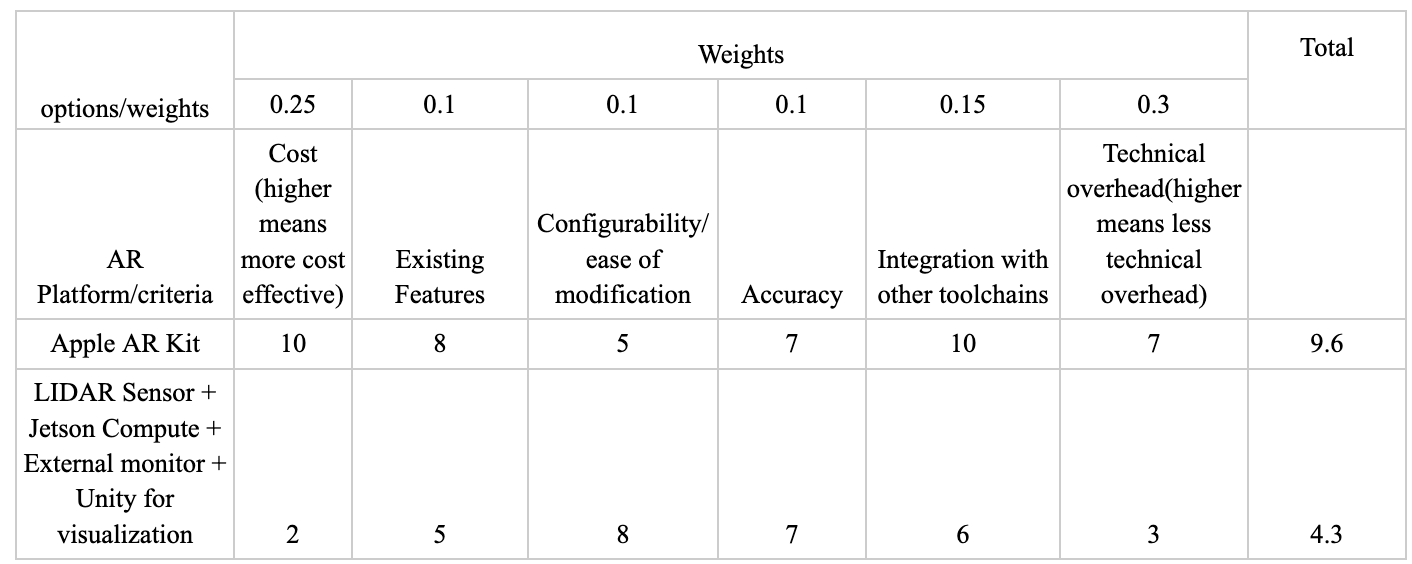

The first part of this week I spent brainstorming and researching the ethical implications of our project at scale. The ethics assignment gave me an opportunity to think about the broader societal implications of the technology that we are building, who hypothetical users could be, and potential safety concerns. The Winner article and the Ad Design paper led me to think about the politics of technologies and who is responsible for the secondary implications that arise. Capstone is a small-scaled version of real world projects from industry: I weighted the ethical implications of developing emerging technologies like autonomous driving, ultimately considering how algorithmic design decisions have serious consequences. In the case of autonomous driving this can involve making decisions about what and who to sacrifice (eg. the driver or the child walking across the street). Personally, I think developers need to take more responsibility for the technical choices that we make, and this has led me to think about what potential misuse or decisions I would be making for this capstone project. In relation to our project, this has led me to consider things I hadn’t previously thought of, like environmental impacts of the materials that we are using. We are choosing to build a mount and 3D print a customized part, and I realized that we never really talked about what we are going to create this out of. I want to create the materials out of recycled biodegradable plastic, because if produced at scale there should be responsibility on us as developers to reduce harmful secondary effects.

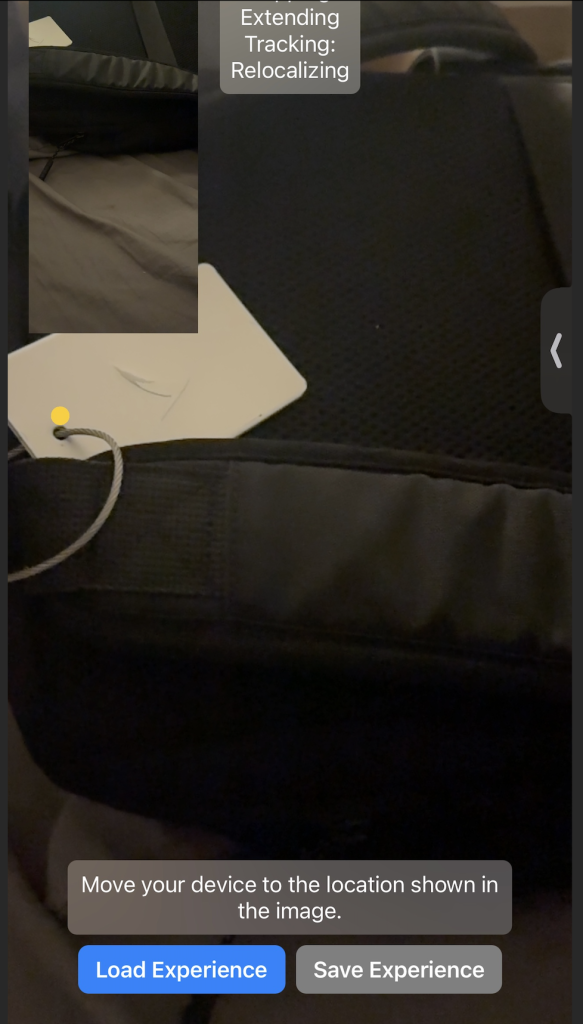

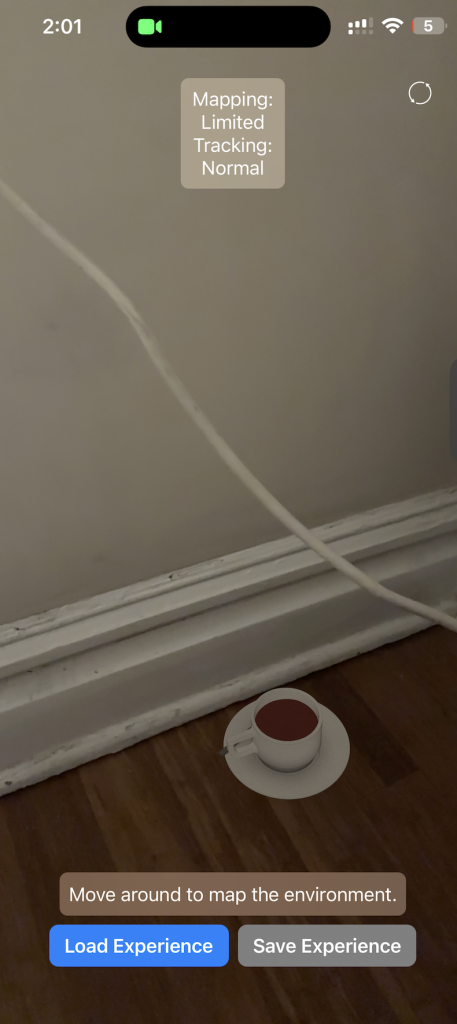

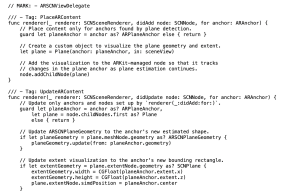

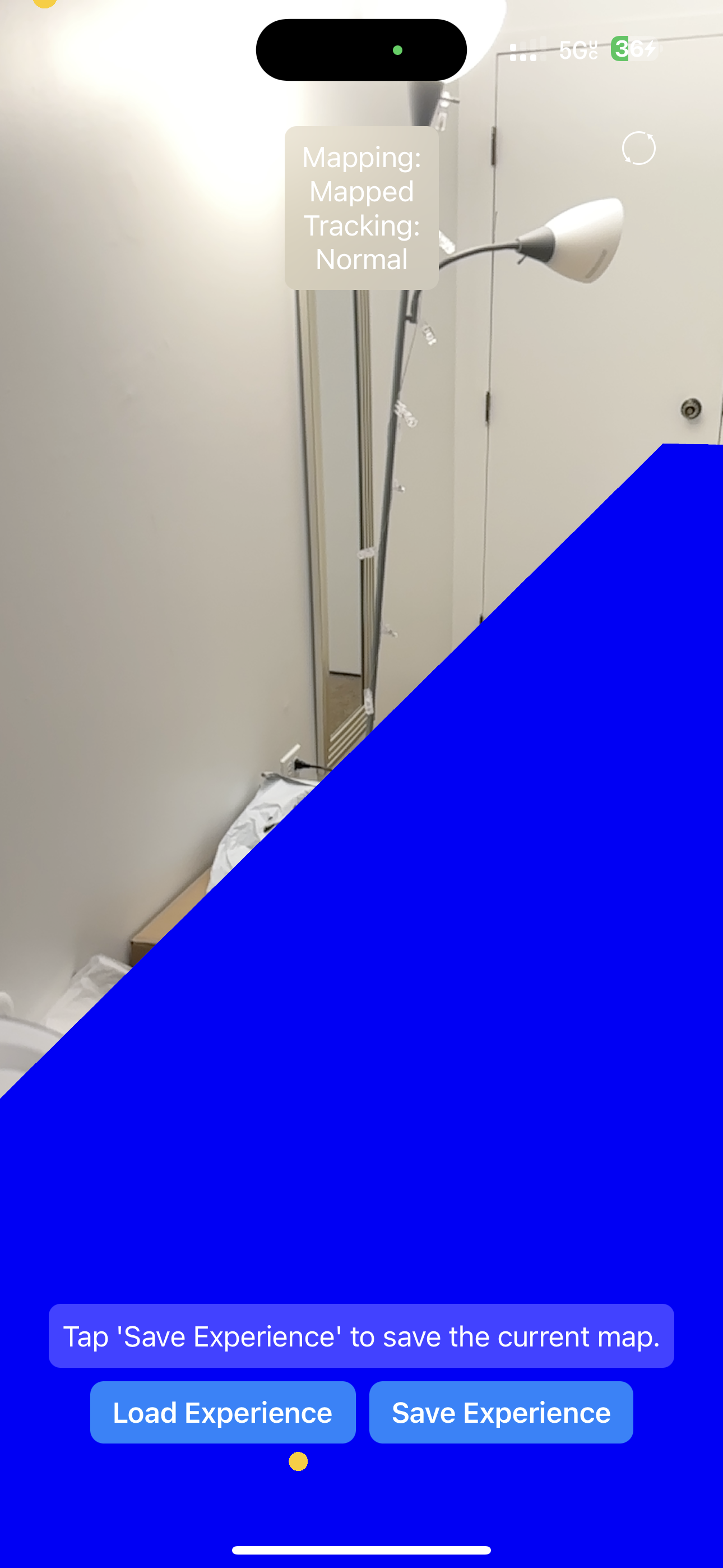

I’ve also been working on the augmented reality floor overlay in Swift and Xcode, doing research to figure out how the plane detection ARKit demo actually works in the backend. This research is being done to accomplish next steps of projecting an overlay/texture on the actual floor with edge detection and memory, which Harshul and I are currently working on. The augmented reality plane detection algorithms does two things (1) builds point cloud mesh of the environment by creating a map and (2) establish anchor points to assign positioning to the device relative to the environment that it is in. Memory is done through loop closing for SLAM, trying to match key points of the given frame with previously seen frames. If enough key points match (established threshold), then it is considered a match of the previously seen environment. Apple specifically uses Visual Interial Odometry, which essential maps a real world point to point in the camera sensor. Sensor readings are very frequent (1000/s) and allow interpreted position to be updated regularly.

Plane Detection: https://link.springer.com/chapter/10.1007/978-1-4842-6770-7_9 – This reading was helpful in looking at code snippets for the plane detection controllers in Swift. It details SceneView Delegate and ARPlaneAnchor, the latter of which is useful for our purposes.

Plane Detection with ARKit: https://arvrjourney.com/plane-detection-in-arkit-d1f3389f7410

Fundamentals about ARCore from Google: https://developers.google.com/ar/develop/fundamentals

Apple Developer Understanding World Tracking: https://developer.apple.com/documentation/arkit/arkit_in_ios/configuration_objects/understanding_world_tracking

Designing for AR, Creating Immersive Overlays: https://medium.com/@JakubWojciechowskiPL/designing-for-augmented-reality-ar-creating-immersive-digital-overlays-0ef4ae9182c2

Visualizing and Interacting with a Reconstructed Scene: https://developer.apple.com/documentation/arkit/arkit_in_ios/content_anchors/visualizing_and_interacting_with_a_reconstructed_scene

Placing Objects and Handling 3D Interaction: https://developer.apple.com/documentation/arkit/arkit_in_ios/environmental_analysis/placing_objects_and_handling_3d_interaction

Anchoring the AR content, updating AR content with plane geometry information (ARKit Apple Developer)

Progress and Next Steps

Harshul and I are working on turning all this theory into code for our specific use case. It’s different than the demos because we only need to map the floor. I’m in the process of figuring out how to project a texture on to the floor using my iPhone as a demo object and the floors around my house as the environment even though it does not perfectly mimic our scope. These projections will be including memory, and next steps include making sure that we can track the path of a specific object on the screen. Erin is working on thresholding dirt detection, and then we will work together to figure out how to map the detected dirt areas to the map. We are on schedule according to our Gantt chart, but have accounted for some slack time because blockers during these steps will seriously impact the future of the project. We are setting up regular syncs and updates so that each section worked on in parallel is making progress.

.

.