This week, I worked primarily on trying to get our Jetson hardware components configured so that they could run the software that we have been developing. Per our design, the Jetson is meant to run a Python script with a continuous video feed coming from the camera which is attached to the device. The Python script’s input is the aforementioned video feed from the Jetson camera, and the output is a timestamp along with a single boolean, detailing whether dirt has been detected on the image. The defined inputs and outputs of this file have changed since last week; originally I had planned to output a list of coordinates where dirt has been detected, but upon additional thought, mapping the coordinates from the Jetson camera may be too difficult a task, let alone the specific pixels highlighted by the dirt detection script. Rather, we have opted to simply send a single boolean, and narrow the window of the image where the dirt detection script is concerned. This decision was made for two reasons: 1) mapping specific pixels from the Jetson to the AR application in Swift may not be feasible with the limited resources we have, and 2) there is a range for which the active illumination works best, and where the camera is able to collect the cleanest images. I have decided to focus on that region of the camera’s input when processing the images through the dirt detection script.

Getting the Jetson camera fully set up was not as seamless as it had originally seemed. I did not have any prior trouble with the camera until recently, when I started seeing errors in the Jetson’s terminal claiming that there was no camera connected. In addition, the device failed to show up when I listed the camera sources, and if I tried to run any command (or scripts) which queried from the camera’s input, the output would declare the image’s width and height dimensions were both zero. This confused me, as this issue had not previously presented itself in any earlier testing I had done. After some digging, I found out that this problem typically arises when the Jetson is booted up without the camera inserted. In other words, in order for the CSI camera to be properly read as a camera input, it must be connected before the Jetson has been turned on. If the Jetson is unable to detect the camera, a restart may be necessary.

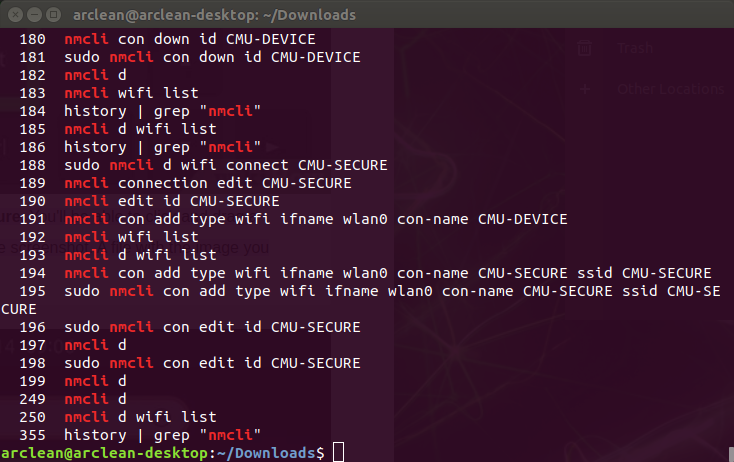

Another roadblock I had run into this week was trying to develop on the Jetson without an external monitor. I have not been developing directly on the Jetson device, since it has not been strictly necessary for the dirt detection algorithms to work. However, I am currently trying to get the data serialization to work, which requires extensive interaction with the Jetson. Since I have been moving around, carrying a monitor around with me is simply impractical. As such, I have been working with the Jetson connected to my laptop, rather than an external monitor with a USB mouse and keyboard. I used the `sudo screen` command in order to see the terminal of the Jetson, which is technically enough to get our project working, but I encountered many setbacks. For once, I was unable to view image outputs via the command line. When I was on campus, the process to getting the WiFi system set up on the Jetson was also incredibly long and annoying, since I only had access to command line arguments. I ended up using the command line tools from `nmcli` to connect to CMU-SECURE, and only then was I able to fetch the necessary files from Github. Since getting back home, I have been able to get a lot more done, since I have access to the GUI.

I am currently working on trying to get the Jetson to connect to an iPhone via a Bluetooth connection. To start, getting Bluetooth set up on the Jetson was honestly a bit of a pain. We are using a Kinivo BT-400 dongle, which is compatible with Linux. However, the device was not locatable by the Jetson when I first tried plugging it in, and there were continued issues with Bluetooth setup. Eventually, I found out that there were issues with the drivers, and I had to completely wipe and restore the hardware configuration files on the Jetson. The Bluetooth dongle seems to have started working after the driver update and a restart. I have also found a command line argument (bluetooth-sendto –device=[MAC_ADDRESS] file_path) which can be used to send files from the Jetson to another device. I have already written a bash script which can run this command, but sadly, this may not be usable. Unfortunately, Apple seems to have placed certain security measures on their devices, and I am not sure that I will find a way to circumvent those within the remaining time we have in the semester (if at all). An alternative option which Apple does allow is BLE, which stands for Bluetooth Low Energy. This is a technology which is used by CoreBluetooth, a framework which can be used in Swift. The next steps for me are to create a dummy app which uses the CoreBluetooth framework, and show that I am able to receive data from the Jetson. Note that this communication does not have to be two-way; the Jetson does not need to be able to receive any data from the iPhone; the iPhone simply needs to be able to read the serialized data from the Python script. If I am unable to get the Bluetooth connection established, at worst case, I am planning to have the Jetson continuously push data to either some website, or even a Github repository, which can then be read by the AR application. Obviously, doing this would incur higher delays and is not scalable, but this is a last resort. Regardless, the Bluetooth connection process is something I am still working on, and this is my priority for the foreseeable future.