This week I worked on iterating and adding on the mapping implementation that I worked on last week. After our meeting, we discussed working on the communication channels and integrating each component together that we had been working on in parallel. We discussed the types of information that we want to send from the Jetson to the iPhone, and determined that the only information we need is a UNIX timestamp and a binary classification as to whether the spot at that time is considered dirty. A data model sent from the Jetson via Bluetooth would be {“time”: UNIX timestamp, “dirty”: 1} for example. On the receiving end, the iPhone needed to map a UNIX timestamp with the camera coordinates at a given time. The Jetson has no sense of orientation while our AR code in XCode is able to perform that mapping, so connecting the data through a timestamp made the most sense to our group. Specifically, I worked on the Swift side (receiving end) where I grabbed the UNIX timestamps and mapped them to coordinates in the plane where the camera was positioned at that time. I used a struct called SCNVector3 that holds the XYZ coordinates of our camera position. Then I created a queue with limited capacity (it currently holds 5 entries but that can be easily changed in the future). This queue holds dictionaries that map {timestamp: SCNVector3 coordinates}. When the queue reaches capacity, it dequeues in a FIFO manner to enqueue more timestamps. This is important to account for possible time latency that it takes for the Jetson camera to transmit information via Bluetooth.

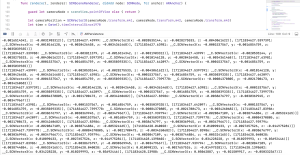

Below I’ve included a screenshot of some code that includes how I initialized the camera position SCNVector and the output of when I print the timeQueue over several UNIX time stamps. You can see that the queue dequeues the first element so the order of the UNIX timestamps in the queue are always monotonically ascending.

In addition, I did work to develop the initial floor mapping. Our mapping strategy happens in two steps: first the floor mapping, then annotating the floor based on covered area. For the initial floor mapping, I created a feature called “Freeze Map” button, which makes the dynamic floor mapping freeze to become a static floor mapping. I wrote accompanying functions for freezing the map and added this logic to the renderer functions, figuring out how to connect the frontend with the backend in Swift. To do this, I worked for the first time with the Main screen, and learned how to assign properties, names, and functionalities to buttons on a screen. This is the first time I’ve ever worked with the user interface of an iOS app, so it was definitely a learning curve in terms of figuring out how to make that happen in XCode. I had anticipated that this task would only take a day, but it ended up taking an additional day.

In the below photo, you can see the traced line that is drawn on the plane. This is testing SCNLine and tracking of camera position – currently a work in progress. Also, the “Freeze Map” button is present (although some of the word characters are cut out for some reason). The freeze button works to freeze the yellow plane that is pictured.

Overall, we are making good progress towards end-to-end integration and communication between our subsystems. I’ve established the data model needed that I need to receive from Erin’s work with the Bluetooth connection and the Jetson camera, and created the mapping needed to translate that into coordinates. We are slightly behind when it comes to establishing the Bluetooth connection because we are blocked by Apple’s security (i.e. given the current state, not being able to establish the actual Bluetooth connection from a iPhone to the Jetson). We are working on circumventing this, but have alternative approaches in place. For example, we could do the communication via web (HTTP request), or read/write to files.

I spent a good amount of time with Harshul also working on how to annotate and draw lines within planes to mark areas that have been covered by our vacuum. The current problem is that the drawings happen too slowly, bottlenecked by the latency of the ARkit functions that we are dealing with. My personal next steps are to try drawing lines with a SCNLine module, seeing if that shows a performance speedup, and working in parallel with Harshul to see which approach is the best. This is one of the major technical components left in our system, and what I will be working on for the coming week. We are preparing the connections between subsystems for our demo, and definitely want that to be the focus of the next few days!