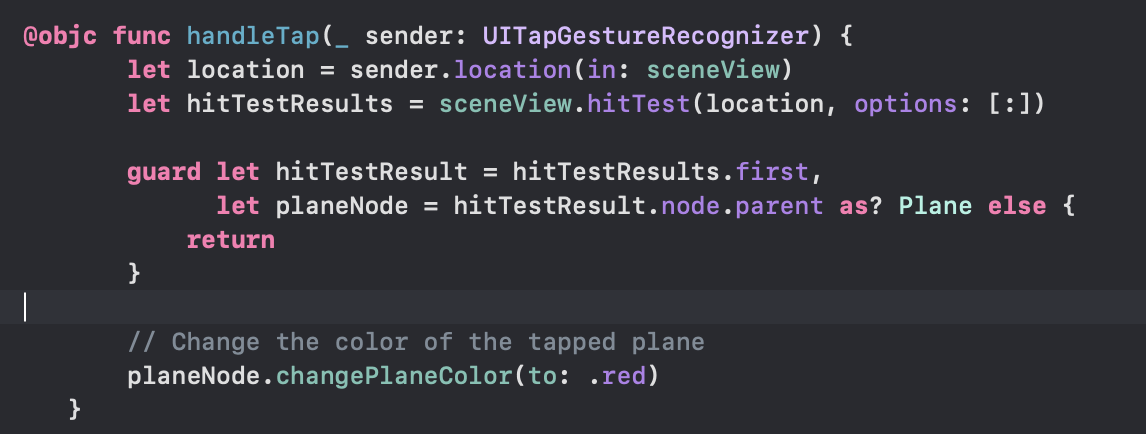

This week I worked on more of the AR app’s core functionality. Me and Nathalie worked together to identify functionality and relavant API features and then parallelized some of our prototyping by pursuing candidate approaches individually and then reconvening. I worked on being able to recolor a tapped plane. This was achieved by modifying the plane class and creating a tap gesture callback that modified the appropriate plane. This works because the way in which the nodes are placed in the world function in a similiar way to the DOM on a webpage with parent and children nodes with ARKit providing a relevant API for accessing and traversing these SceneNodes. Since we can insert custom objects we have the ability to update them.

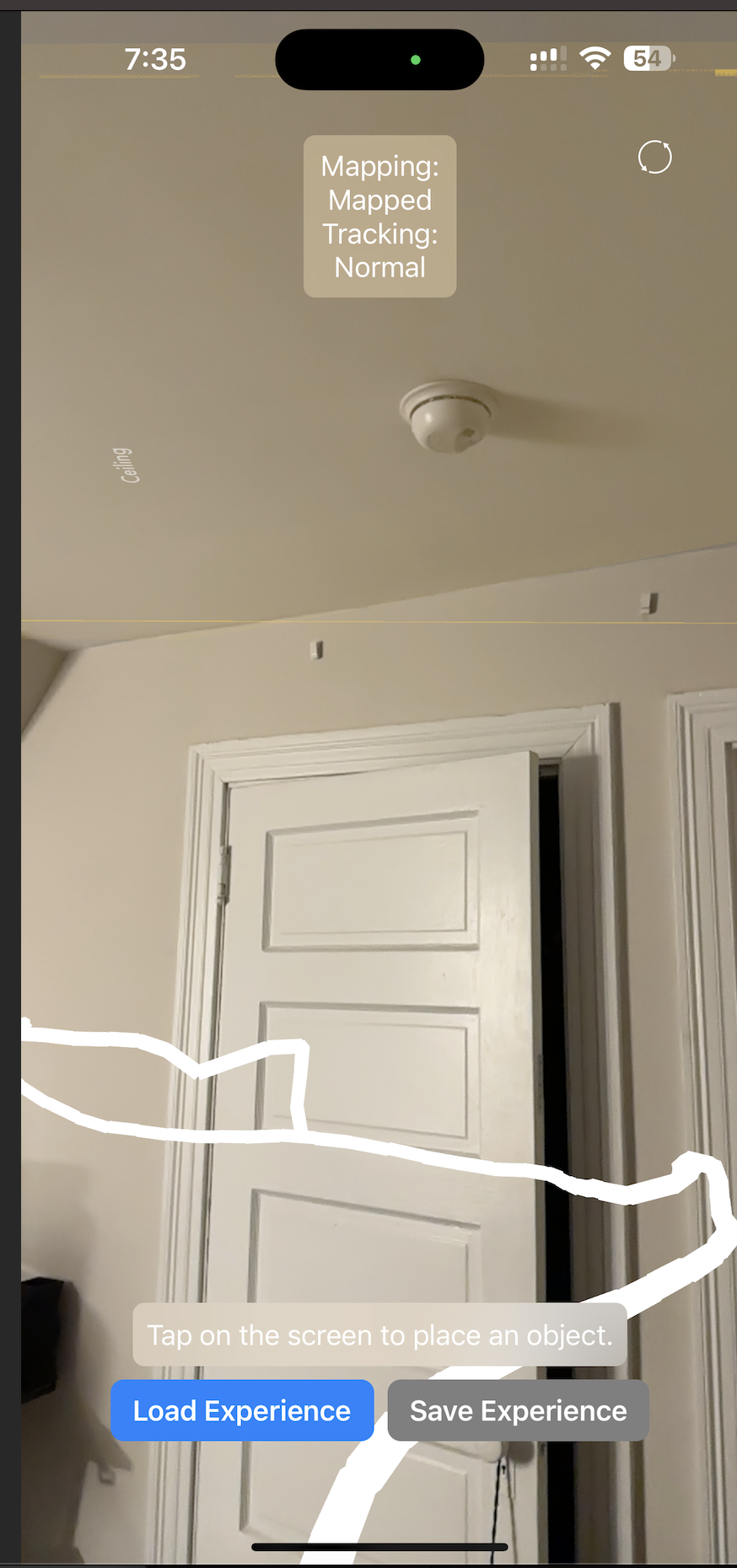

This image outlines this feature in action providing a way for the user to interact with the worldusing an intuitive action of tapping the screen.

The next feature that I mocked up was the ability for us to track the position of our phone in the world. The below image outlines a proof of concept of the white trail representing the camera’s position in the worldmap and it updates as we move the camera. More work needs to be done to optimise the amount of nodes added with some attempt at shortcutting to combine segments as well as Projecting these 3d coordinates into the perspective of the floor plane. and widening the footprint of the drawn trail into approximating the size of the vacum as well as computing a transform to translate the origin of the drawn trail towards the position of the actual vacuum mount. Fiducial Markers might be a way of providing a ground truth for the vacuum mount detecting fiducials/reference 2d images that are a candidate option to pursue next week.

In terms of scheduling things are generally on track. I expect that we will be able to have the core features of plane detection tracking and texture mapping all implemented for our interim demo with work pending to calibrate things to comply with our V&V plans and improve overall accuracy and system integration..