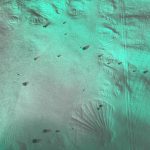

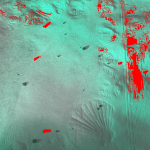

This week, I continued to try and improve the existing dirt detection model that we have. I also tried to start designing the mounting units for the Jetson camera and computer, but I realized I was only able to draw basic sketches of what I imagined the components to look like, as I have no experience with CAD and 3D modeling. I have voiced my concerns about this with my group, and we have planned to sync on this topic. Regarding the dirt detection, I had a long discussion with a friend who specializes in machine learning. We discussed the tradeoffs of the current approach that I am using. The old script, which produced the results shown in last week’s status report, relies heavily on Canny edge detection when classifying pixels to be either clean or dirty. The alternative approach my friend suggested was to use color to my advantage. Something I hadn’t realized earlier was that our use-case constraints give me the ability to use color to my advantage. Since our use-case confines the flooring to be white and patternless, I am able to assume that anything “white” is clean, assuming that the camera is able to capture dirt which is visible to the human eye from a five foot distance. Moreover, we are using active illumination. In theory, since our LED is green, I can simply try to threshold all values between certain shades of green to be “clean”, and any darker colors, or colors with grayer tones, to be dirt. This is because (in theory), the dirt that encounter will have height component. As such, when the light from the LED is shined onto the particle, the particle will cast a shadow behind it, which will be picked up by the camera. With this approach, I would only need to look for shadows, rather than look for the actual dirt in the image frame. Unfortunately, my first few attempts at working with this approach did not produce satisfactory results, but this would be a lot less computationally expensive than the current script that I am running for dirt detection, as it relies heavily on the CPU intensive package, OpenCV.

I also intend to get the AR development set up fully on my end soon. Since Nathalie and Harshul have been busy, they have not had the time to sync with me and get my development environment fully set up, although I have been caught up to speed regarding the capabilities and restrictions of the current working model.

My next steps as of this moment are to figure out the serialization of the data from the Jetson to the iPhone. While the dirt detection script is still imperfect, this is a nonblocking issue. I currently am in possession of most of our hardware, and I intend on getting the data serialization via Bluetooth done within the next week. This also will allow me to start benchmarking the delay that it will take for data to get from the Jetson to the iPhone, which is one of the components of delay we are worried about with regard to our use case requirements. We have shuffled around the tasks slightly, and so I will not be integrating the dirt right now; I will simply be working on data serialization. The underlying idea here is that even if I am serializing garbage data, that is fine; we simply need to be able to gauge how well the Bluetooth data transmission is. If we need to figure out a more efficient method, I can look into removing image metadata, which would reduce the size of the packets during the data transfer.