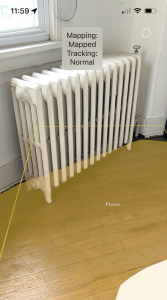

This week I spent time with Swift and Xcode to create the functionality of mapping the floor (and only the floor) and also uploading and testing it with our phones. I worked with Harshul on the augmented reality component, with our base implementation being inspired by the AR Perception and AR Reality Kit code. This functionality creates plane overlays on the floor, updating the plane by adding children nodes that together draw the floor texture. Not only does it dynamically add nodes to the plane surface object, but it also is able to classify the surface as a floor. The classification logic allows for surfaces to be labeled as tables, walls, ceilings, etc. to ensure that the AR overlay accurately represents the floor surface, minimizing confusion that can be caused by other elements in the picture, like walls and/or furniture.

The technology has memory, meaning that it locates each frame within a World Tracking Configuration, verifying if it has already seen the surface in view and re-rendering the previously created map. This memory is important because it is what will allow us to create marks on the plane for already-covered surface area, and remember what has been covered when the camera spans in and out of view. This means that once a surface has been mapped, that the code pulls what it already mapped rather than re-mapping the surface. In the next steps I plan on finding a way to “freeze” the map (as in, stop it from continuously adding child nodes), and then perform markings on the overlay to indicate what has been passed over, and be able to remember that going in and out of the frame.

There is a distinction between the bounding boxes (which are purely rectangular) and the actual overlay that is able to dynamically mold to the actual dimensions and outline of the floor surface. This overlay is what we are relying on for coverage, rather than the rectangular boxes because it is able to capture more granularity that is needed for the vacuum-able surface. I tested the initial mapping on different areas, and noticed that there are some potential issues with the error bounding of the surface. I’m in the process of testing the conditions in which the mapping is more accurate, and seeing how I can tweak the code to make things more precise if possible. I found that the mapping has more of a tendency to over-map rather than under-map, which is good because that fits our design requirements of wanting to clean the maximum surface possible. Our group had a discussion that we would rather map the floor surface area larger than it actually is rather than smaller, because the objective of our whole project is to show spots that have been covered. We decided that it would be acceptable for a user to notice that a certain surface area (like an object) cannot be vacuumed and still be satisfied with the coverage depicted. Hence, the mapping does not remove objects that lay in the way, but is able to mold to non rectangular planes.

With reference to the schedule, I continue to be on track with my tasks. I plan on working with Harshul for improving the augmented reality component, specifically with erasing/marking areas covered. I want to find ways to save an experience (i.e. freeze the frame mapping), and then be able to add/erase components to the frozen overlay. To do this, I will need to get dimensions of the vacuum and see what area of coverage that the vacuum actually gets, and how best to capture those markings in the overlay with augmented reality technologies.