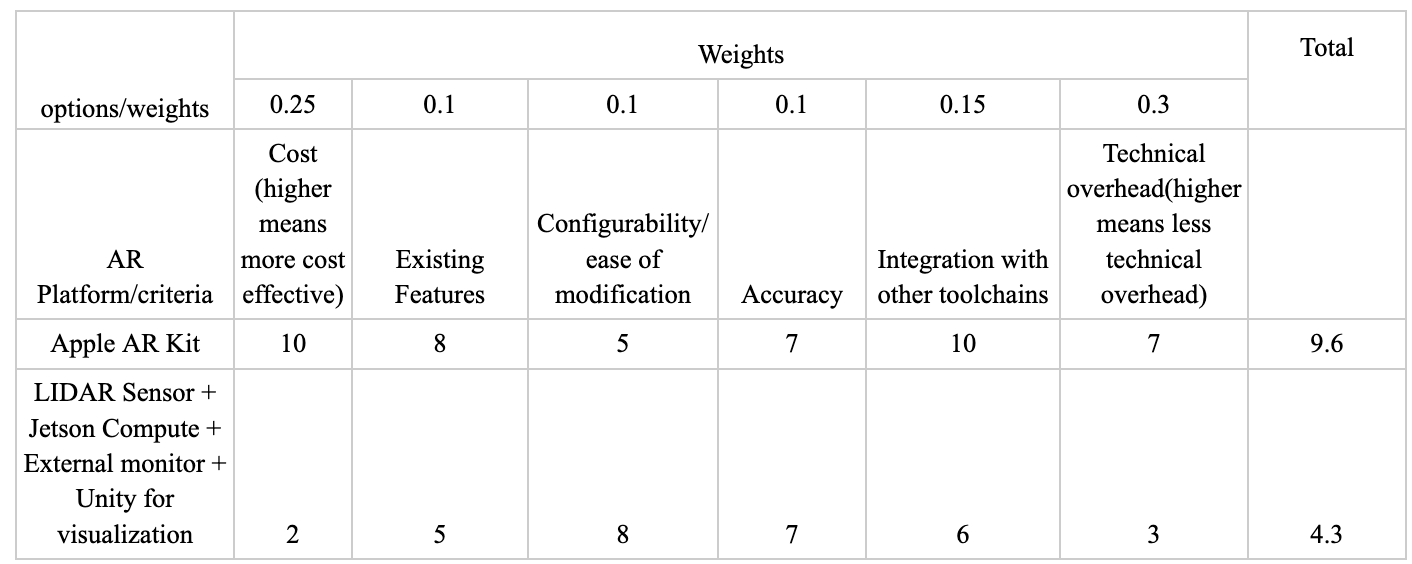

This week I spent the majority of my time working on the design report and working with the ARKit applications on the iPhone. In the report I primarily focused on the intro, the system architecture, the design trade studies and the section on the implementation details for object placement and the software implementation.The work on the architecture section involved refining the architecture work from the design presentation and clearly identifying subsystems and components that allow for easier quantification of progress and tasks as well as clearly defining subsystem boundaries and functionalities for system integration. We’d discussed tradeoffs in our component selection but the testing and research we had done was now quantified in the trade studies by creating comparison matrices with weighted metrics to quantitatively demonstrate the rationale behind our component selection as well as performing a tradeoff analysis on whether to purchase or custom fabricate certain components. Below is an example of one of the trade studies with a 1-10 likert scale and weights that sum to 1.0.

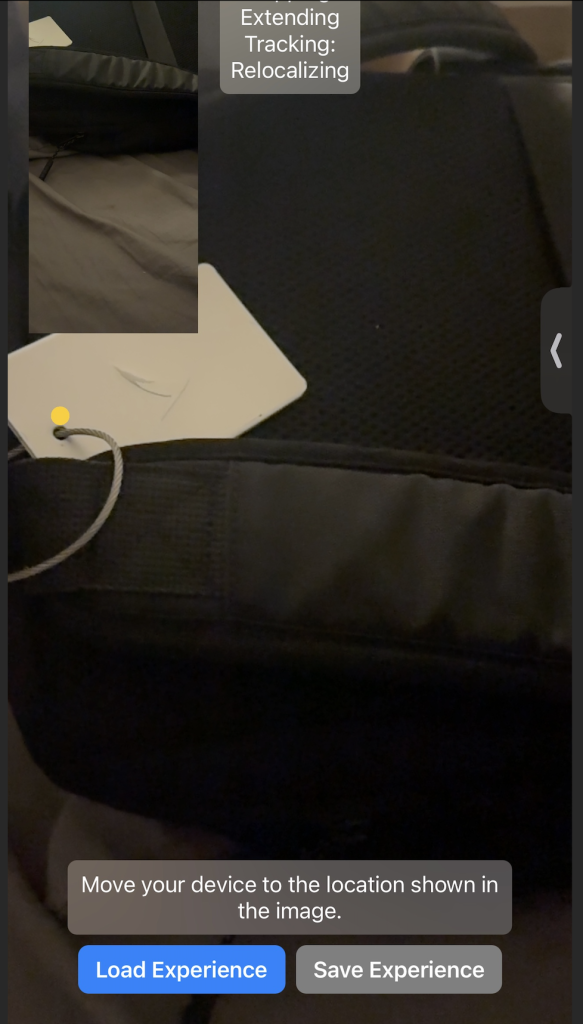

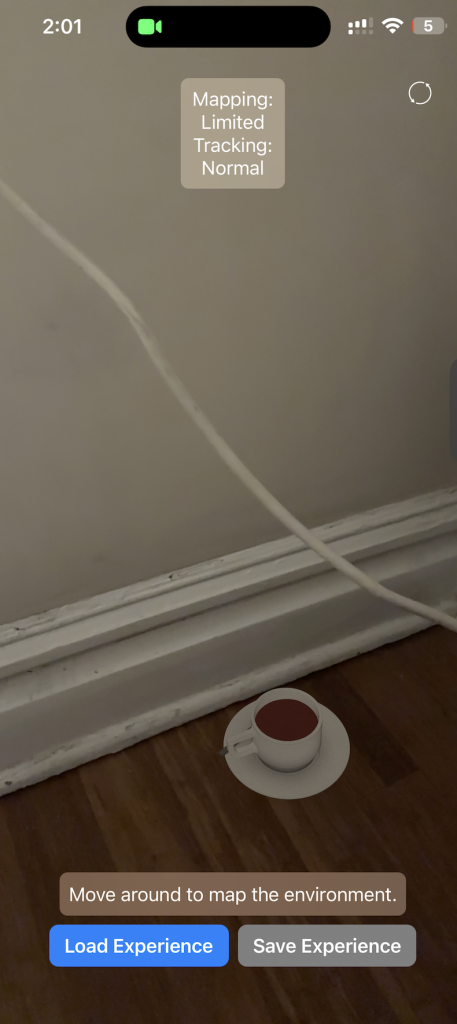

With respect to implementation details I ran an ARKit world tracking session as a baseline to test whether we can save existing map information and reload that data to avoid having to localize from scratch every time. This feature would enable us to map out the space initially to improve overall accuracy. The second feature that this app tested was the ability to place an object anchored to a specific point in the world, this tests the ability for the user to place an input as well as the ability for the ARKit API to anchor an object to a specific coordinate in the world map and be able to dynamically store and reload this without needing to re-localize from scratch. From here we plan on integrating this with the subsequent plane detection feature outlined in the next subsection as well as test mapping a full texture to a plane in the map instead of just placing an object into the world. As shown in the below images:

This was a key proof of concept in our ability to map and localize to the environment and combining this approach with Nathalie’s work on plane detection will be a key next step. Additionally this work on mapping allowed me to assist in coming up with the test metric for mapping for coverage. Researching options and using existing knowledge of the API I outlined the use of the planeExtent to extract the dimensions of a plane as well as the ability to compute point to point measurements of distance in AR to outline the mapping coverage test to ensure the dimensional error and drift error on the planes are within the bounds specified by our requirements.

With respect to timelines things are on track, certain component orders have been moved around on the gaant chart, but AR development is going well. The key next steps are to now take our proof of concept applications and start integrating them into a unified app that can localize, select a floor plane and then mesh a projected texture onto that plane .