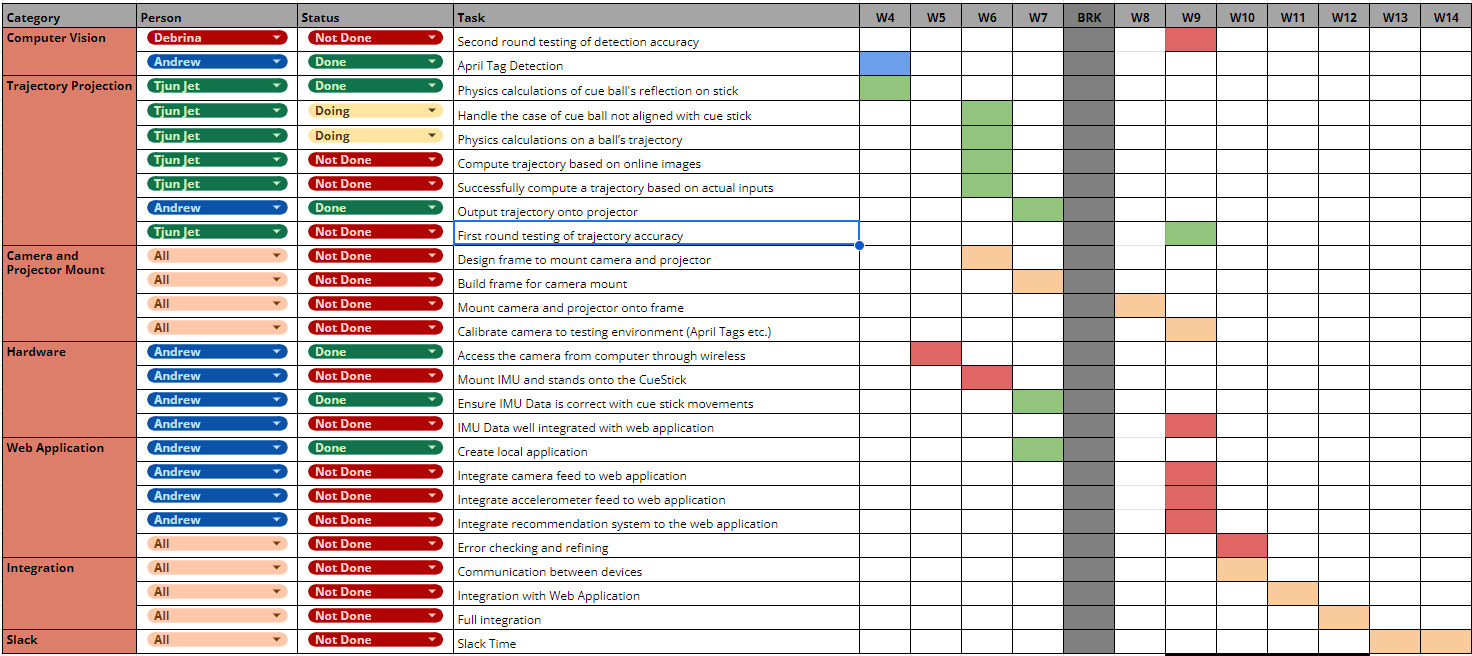

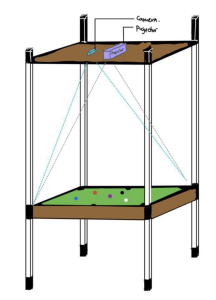

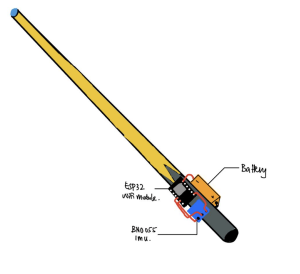

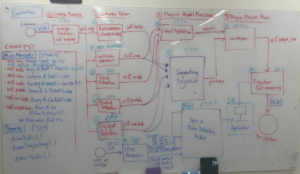

This week we were on schedule for our goals. Work was done for the web application as well as refining the cue stick system. Building out the web application involved a frontend (React) and backend (Flask/Sqlite), though we are considering phasing out the backend if WiFi speed is fast enough for us to go directly from ESP32 to frontend. Having the Arduino Nano was a convenient way to test the software with a wired connection; we phased out the Nano and are just using the ESP32 module which acts as a server and exposes API endpoints to get the gyroscope and accelerometer data for the IMU. We also calibrated our projector, tested the camera, and adjusted the height of various components. The projector needed to be high enough to display over the entire pool table, and the camera needed to be high enough for each frame to fit almost exactly into its FOV. After testing, we decided that the projector and camera needed to be much higher, which involves shifting the actual pool table down. For our structure, this is extremely convenient since the metal shelf frame has notches. We shifted down the pool table to lower notches in order to increase the vertical distance between pool table and camera/projector. In addition to this, we spent a good chunk of time this week writing up the Design Review report. This was a significant effort, as the report spanned 11 pages; we split up the report evenly. Debrina wrote the introduction, use case requirements, and design requirements; Andrew wrote the abstract, design trade, testing & verification, and part of the risk mitigation; Tjun Jet wrote the rest. This was a good checkpoint for us as well, since it forced us to document with detail all the work we’ve finished so far and assess next steps.

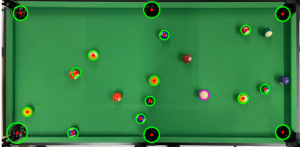

A risk that could jeopardize the project is the detection of the cue stick. Detecting the stick with zero machine learning proves to be a hard task, and the results are not always consistent just by using contour, color detection etc. The detection can be further improved with more layers and sophisticated heuristics. However, in the event that we still cannot detect the cue stick with high accuracy, we will opt to use a more reliable solution like AprilTags. The infrastructure for AprilTag detection is already built into our system. In the worst case we could attach AprilTags to the cue stick in order to have our camera detect it easily. This is the main contingent plan for this problem.

Part A (written by Andrew):

The production solution we are designing will meet the need for an intuitive pool training system. Taking into account global factors, pool itself is a global game played by people all over the world, and it has its origins dating back to the 1300s. As such, we need a system that is culture and language-agnostic. Namely, this system should work for someone in the United States as well as someone in France, Italy, etc. This requires our product to use minimal country-specific information/context – a concern we should think carefully about. Since we are used to living in the United States, we must think critically about these implicit biases. For instance, we planned our product to heavily rely on visual feedback instead of text. The trajectory prediction in particular is country/culture/language agnostic and so will meet world-wide contexts and factors. Additionally, we built the system as simple as possible, and with minimal effort on the part of the user. This would account for variations in different forms of pool. This also aids players who are not as technologically savvy. If we had made the primary method of feedback something more complicated or convoluted, then it would be a detriment to those who are not technology savvy.

Part B (written by Debrina):

The production solution we are designing meets the need for a pool training system that has an intuitive user interface. The consideration of cultural factors is similar to that of global factors. Our training system makes no assumptions on which languages are spoken by our users. Nor does it make any assumptions regarding the level of language proficiency our users have. The feedback provided by our pool training system is purely visual, which makes its interface easily understood by users of all cultures and backgrounds. Our product solution also has the potential to spread the accessibility and popularity of billiards in cultures where the game is not as widespread. Currently, the game of billiards is more popular in some countries and cultures than others (the United States, United Kingdom, and Philippines, to name a few). Our product solution’s intuitive user interface would be able to promote the game of billiards in other cultures. The versatility of our product solution provides people of all cultures an opportunity to learn to play billiards.

Part C (written by Tjun Jet):

CueTips considers various environmental factors in the selection of our material, power consumption, and the modularity and reusability of our product. When selecting the material to use to build the frame for our pool table, we not only considered the biodegradability of the material used, but also the lifespan of the material. We bought a frame that consisted of wooden boards to hold the pool table, and metal frames as the stand. We initially considered using plywood planks to build our own frames, but we decided against it when we realized that prototyping and building the frame over and over again could lead to a lot of material wastage. Furthermore, our frame is modular and reusable, meaning it is easy to take apart and rebuild. For instance, if a consumer is moving the location of the pool table, it will be easy for them to take it apart and build it in another area, without the need for large transportation costs. To ensure appropriate lighting for the camera detection, we also lined the frame with LED Neopixels. LED neopixels are generally energy-efficient compared to traditional lighting. When used efficiently, this minimizes unnecessary power consumption. Thus, by carefully selecting biodegradable material, choosing energy efficient lighting, and making our entire product easily transportable, modular, and reusable, CueTips aims to provide a pleasant yet environmentally friendly pool learning experience.