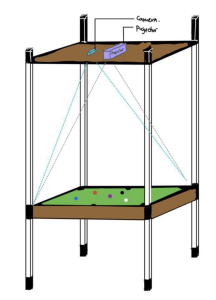

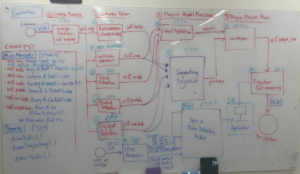

In the past week, I worked on designing the test suites for testing the ball prediction accuracy and shot calculation accuracy. I also helped to build the camera and projector to mount onto the shelf. Finally, I also worked on the presentation slides for our final presentation.

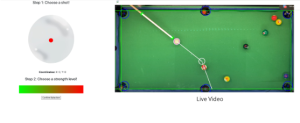

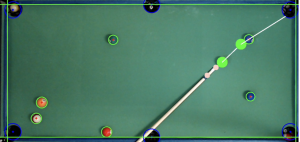

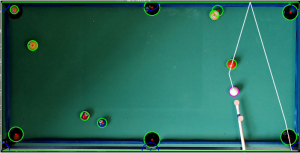

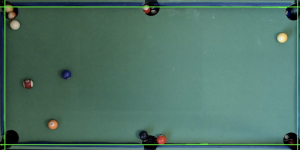

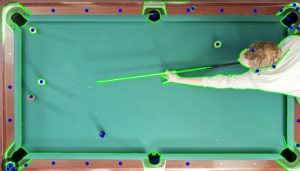

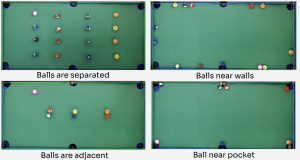

To test out our testing and verification, we had to test the accuracy of our ball prediction algorithm using computer vision, and the shot calculation accuracy. To test both of them, I helped to design some test suites for both accuracies. Firstly, to test whether our ball prediction in computer vision was accurate, we designed a test suite to calculate balls separated, balls near the pockets, balls adjacent to each other, and balls near to the walls. We then projected the predicted balls onto the table, and measured the average distance (of all the balls) from the actual ball and the predicted ball. Our use case requirement was to ensure that the distance between these balls were under 0.2inches, and we managed to achieve an average of less than 0.05 inches. Here is a picture of our test suite:

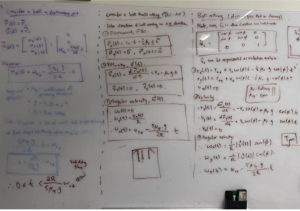

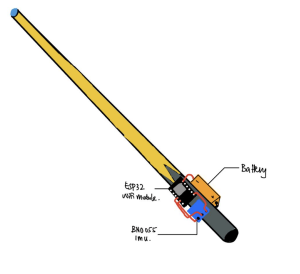

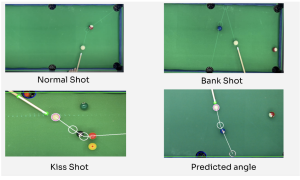

Similarly for shot calculation accuracy, performed two different tests. The first test was taking 20 shots of three different types – normal shots, bank shots, kiss shots, and we took the accuracy from those calculations. Here are what our test suites looked like:

In order to ensure that these tests could be run, we spent a good portion of the week adjusting the camera and projector well onto the shelf that we bought. This ensured that the user could see the predicted trajectory on the pool table. Finally, I spent a good portion of the week working on the presentation slides, as well as my final presentation.

Over the week, I also continued working on the web application that would aid for spin selections and velocity selections. This provided recommendations to users if they hit their actual spin selection and velocity selection.

I am currently on schedule to finish my tasks. From nowup till demo day, I plan to continue testing the different aforementioned test suites. Given that the accuracy of our bank shots were not that high, I want to try improving this accuracy and make sure that we get some good results before demo day. I also hope to finish the web application, and hopefully work on spin and velocity calculations to show on demo day.