These past two weeks, I did a lot of collaborative group work in integrating all the requisite parts required for a full implementation of the rover. For instance, one thing I did was help solder the third pump for our suction arm, as seen here. I also contributed to the group’s effort in the foray of investigating multiprocessing in Python.

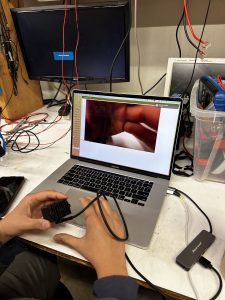

In addition, I also helped setup a testing suite for analyzing the coordinates and bounding box dimensions in our object detection pipeline, as well as analyzing what these different variables refer to. The testing suite can be seen below. I took the various detection.y, ymin, ymax, and other similar coordinates and measured spatially with a ruler to determine how the camera is calibrated.

I also investigated potentially abandoning a neural network altogether and using openCV thresholding, contouring, and rectangle-creating functions to use the depth color map to extract an object that is close enough. However, this idea fell short because as we would get closer to an object, the floor would also be lower than our desired object’s z-distance threshold, meaning the heatmap would reflect that the floor is just as close as the object. If I were to try and manually create a bounding box, it would also include the floor; there would be no way to differentiate. In addition, as the video frame displays, I saw some blips on the heatmap that showed closeness that wasn’t part of the object. These small blips could potentially cause issues if I were to threshold the frame since they wouldn’t be greyed out.

My progress is flip-flopping between mainly on schedule and a teeny bit behind, mainly because problems arise when performing integration tests that did not appear when doing unit tests, such as the lower accuracy resulting from the object detection pipeline. Since we budgeted this time, I would consider progress to be on schedule.

The main deliverable I hope to complete in the next week is to tweak and adjust the object detection pipeline so when we perform validation tests, we meet our desired accuracy threshold as described in our use case requirements. Additionally, I hope to be able to work with Hayden to successfully transmit the camera feed back to the monitor.

Throughout the semester, the acquisition of new knowledge was a particularly salient undertaking due to the fact that I had to learn and get used to the depthai module, features, and programming paradigm. To be able to accomplish these tasks and successfully utilize the module, I found it necessary to be able to learn quickly, which I tackled through sifting through the wide variety of examples created by depthai’s Luxonis. I utilized their depthai-examples repository, which had example programs for every feature of the camera, from spatial detection, object tracking, and depth. Being able to see example programs really allowed me to learn and quickly get acclimated to the module, and in addition, when searching the internet to help solve my error messages, I found forum posts and discussions that really helped my debugging process, which was especially helpful because the camera is so new and constantly undergoing changes when compared to different versions and models.

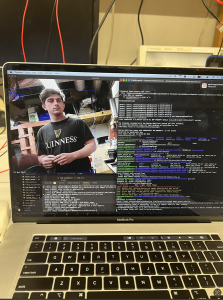

We can see a bounding box around Hayden, demonstrating that the pipeline is able to make detections. A closer glimpse into the code can be seen here:

We can see a bounding box around Hayden, demonstrating that the pipeline is able to make detections. A closer glimpse into the code can be seen here:

(I commanded the arm to go 20 cm away)

(I commanded the arm to go 20 cm away)