This week was a landslide of new occurrences, lots of bad, few very good.

Sunday night, we discovered that our belt drive could not work with the motors that we bought with our project. As such, we switched to using casters on the front to facilitate smoother driving. With the nature of the casters, rotation is now inconsistent. What that unfortunately means, though, is that we’ll need to do feedback with the camera movement and switch to only using the DC, non-encoder motor movement. I designed the holders for the casters and we mounted them Wednesday night.

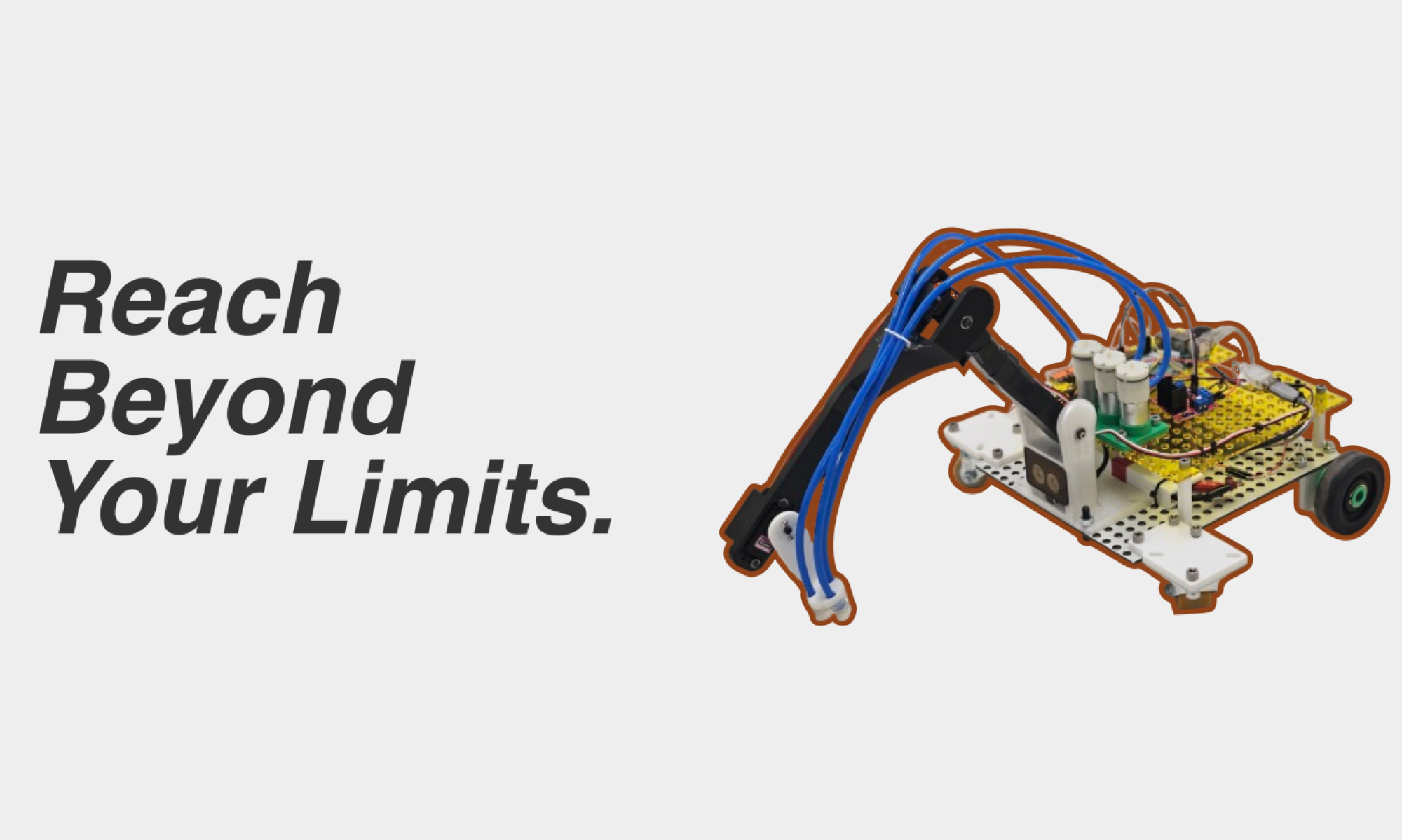

Wednesday morning, still unfortunately, we discovered that post-manufacturing, one of our servos had broken, likely from human misuse. What that meant was that I needed to rebuild some of the arm. It was at that time that the heat-set inserts in our arm began to fall apart, and the arm itself began to become undone. After verifying that our rover could drive more smoothly late Wednesday night, I verified once more that one of our servos was broken on Thursday, and debated a redesign. Unfortunately for my sleep schedule, I saw quite a lot of improvements that could be made to the initial design, and began work on redesigning the entire robot front end. By early Friday morning, the new and improved HomeRover was designed:

A couple of things that you’ll notice- a lot less moving parts were on this arm- with Roboclub’s fast-moving Bambu Printers, print times for each of these were a lot less than I expected. The camera is now at the x-center of the Rover. This is very important. Now, when we align with the object of interest, we don’t need to do more complex math and can now simplify our kinematics down even more. The arm is a lot more structurally sound. Double supported bearings on more critical joints allow for smooth movements. Now I hear you asking- Varun, is this going to add another two weeks of manufacturing time to HomeRover?

Nope! In less than 24 hours, fast printers and pre-established tolerances allowed me to fabricate the entirety of the new and improved HomeRover arm. Today, I sourced the rings that hold the wheels in place (so they won’t fall off so much). I positioned the servos such that they would not break at rest, and improved the servo at the end of the arm so that it can manipulate the final iPad more efficiently. In the end, we have a much better arm that will be more consistent. Touching on verification- I need to make sure that our arm moves consistently. While tuning our kinematics with Nathan, I need to make sure that things will not move differently than on demo day, so I will be testing the entire subsystem soon.

Great, so now we have a robot arm that is theoretically consistent, but we really need to get it to move consistently. I’m working on that too! Now that our problem has been exponentially simplified by the redesign, I was able to get my hands on a script that does that calculation for us.

With some very simple trigonometry, we have the AB and BC angles (shown above) that lead to distances that we need. Verifying our initial range, we wanted about from 10-30 cm away, and we have 11-36 cm away (according to these calculations.)

All this is very great, but I need to get the kinematics actually implemented. That’s a tomorrow issue 🙂

We are no longer nearly as behind as I thought we would, especially because the refabrication allows for that aforementioned simplification. By next week, I want to essentially have the HomeRover barebones functionality done, and I think it’s possible. By tomorrow, my goal is to be able to send the Raspberry Pi on the Rover a Y and Z coordinate (manually), and have the arm traverse correctly to that point and initiate the “pumps” (by printing out “I AM PUMPING” repeatedly into the terminal.)

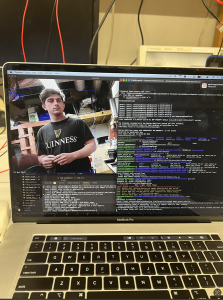

We can see a bounding box around Hayden, demonstrating that the pipeline is able to make detections. A closer glimpse into the code can be seen here:

We can see a bounding box around Hayden, demonstrating that the pipeline is able to make detections. A closer glimpse into the code can be seen here:

(I commanded the arm to go 20 cm away)

(I commanded the arm to go 20 cm away)