For the past two weeks, the main thing we as a team have been working on revolves around integration and connecting together all our separate subsystems. When connecting our object detection/depth map code that instructs the motors to turn and move a specific direction, we ran into difficulty parallelizing the two processes, either using Python’s multiprocessing and socket modules. The two control loops were blocking each other and preventing us from progressing in either program, but as stated in Varun’s status report, the end program is an overarching one acting as a state machine. After fully assembling the rover and running integration tests that involve the full pipeline of navigation -> detection -> pickup, the most significant risk lies on the detection side. Earlier in the week, we were running into an issue with the coordinates that the object detection pipeline was giving for the bounding box it created in that the y-coordinate it outputted wasn’t necessarily the top of of the object, but the center relative to some part of the camera, causing our suction to undershoot because of inaccurate kinematics. After investigating the various dimensions and y-values that exist in the code and comparing them to our hand measurements, we found that the detection.y value it spit out did reflect the top of the object, but regarding its magnitude, it reflected the distance from the bottom of the camera. To mitigate and manage this risk, as well as improve our kinematics in the process, we plan on finetuning a potential hard offset to all y values to ensure that we hit the top of the object as well as tuning with adding a variable offset based on the bounding box dimensions. We have started doing this, but plan on performing a lot more trials next week. Another risk involves the accuracy of the object detection, which meets below our standards as defined in the design and use case requirements. A potential cause for this issue is that since mobilenetSSD has a small label database, there’s a chance it doesn’t encompass all the objects we want to pickup, but since we don’t necessarily need identification, just detection, a potential risk mitigation strategy is to lower the confidence threshold of the detection pipeline to hopefully improve on detection accuracy.

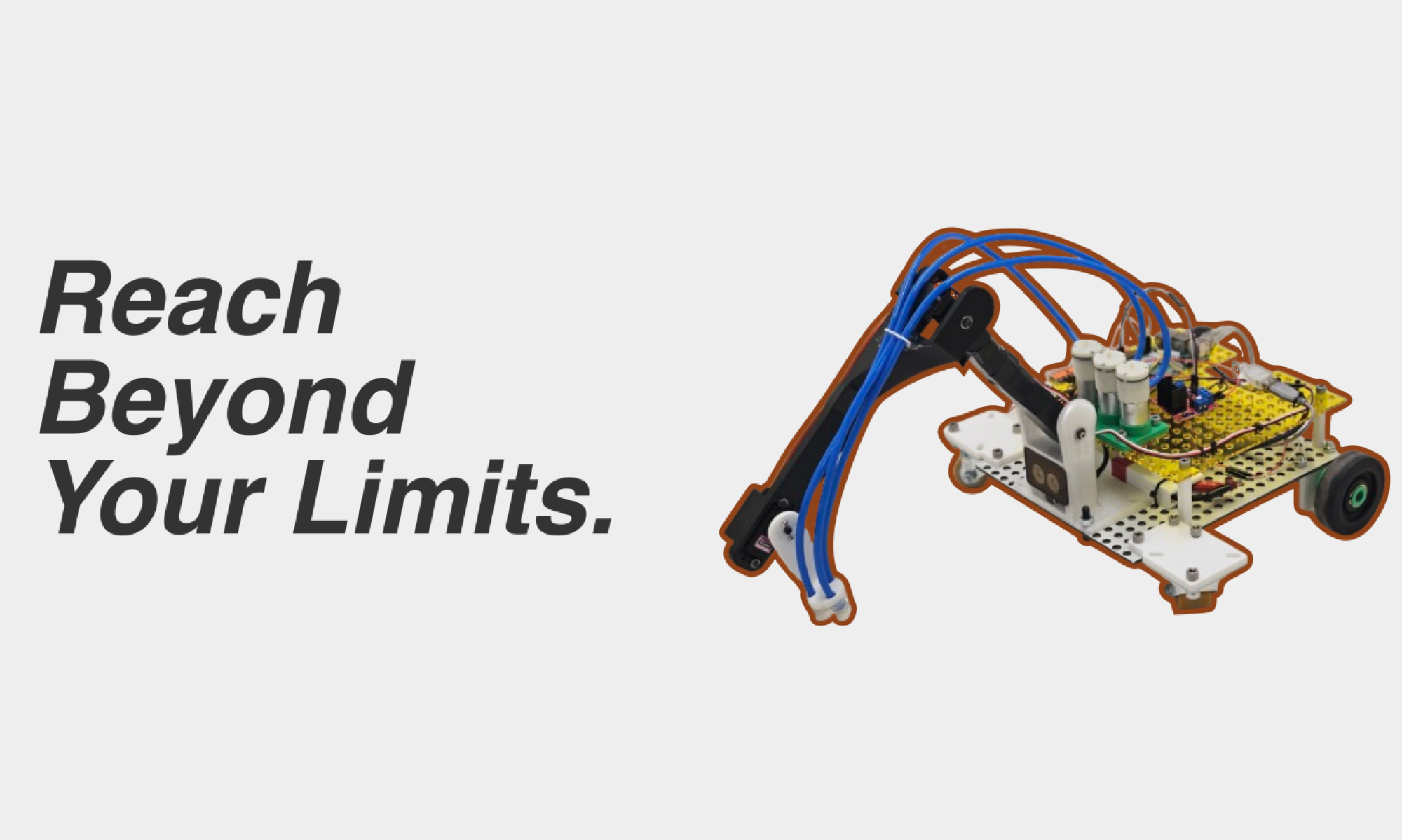

One change that was made to the design of the system was adding a second layer to our rover to house our electronics due to space constraints. The change does not incur major costs since the material and machinery were both provided by Roboclub. This is a quick, lasting fix, with not further costs needing mitigation going forward.

As stated in our individual status reports, we are fairly on track, with no major changes to the schedule needed.

A full run-through of our system can be found here, courtesy of Varun: https://drive.google.com/file/d/1vRQWD-5tSb0Tbd89OrpUtsAEdGx-FeZF/view?usp=sharing