This week I spent a few hours on the final presentation and poster. Besides that, with the conclusion of user testing on the front end, I have only been making small changes here and there. I have been helping Alex and Simon where they need it as we are still bringing our subsystems together. As for the schedule, we are running into flex time and I think we should be able to finish on time.

Alex’s Status Report 04/27

I’ve made a bunch of spare parts for everything we can. This is in preparation for the final demo this coming week. This week I mainly worked on integrating my subsystems with the pongpal robot. Specifically, I’ve been working on firing using the raspberry pi, integration testing that to make sure the accuracy is what we expect given our previous tests. It turns out that when I was testing last week I was adding a little bit of stochasticity to the pressure system (due to the bouncing of the wires when I connected them as I discussed during our final presentation) because it is now performing slightly better.

I am on schedule.

I look forward to finishing Pongpal next week!

Team Status Report for 4/27/24

As we approach the final demo next week, the only risk that remains is the parts failing. To mitigate this, we’ve made some spare parts that can be replaced on the fly. For example, we have multitude of motors for the pressure system, and we have replacement parts for all parts of the pressure system’s tubing. The aiming system also has a backup motor and motor drivers in case it fails.

We don’t have any major design changes this week.

We don’t have any schedule changes this week.

We’ve performed lots of unit tests and overall system tests recently. We’ve did individual unit tests for vertical and radial aiming system. The pressure system had reliability testing by shooting in the same setting multiple times. The vision system had accuracy testing by detecting the ball’s landing spot and cup’s true location. We performed user testing on our frontend. For the final integration testing, we’ve ensured that having the same setting yielded consistent landing location.

We were able to use the values from the testing for calibration. For example, the voltage reading from the pressure sensor was measured during testing to see what is the appropriate range for the game – we’ve found that 2V – 3V is the sweet spot, and have decided to use this range that users can actually set.

We haven’t made any significant design changes while testing, as the results passed our expected criteria.

Seung Yun’s Status Report for 4/27/24

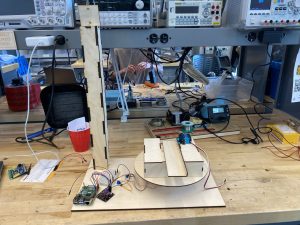

This week I’ve spent most of the time making minor adjustments to the aiming system to get it ready for the final demo, and working on the final presentation & poster & paper. I’ve also printed the camera pole for the LIDAR camera. As we’re integrating the pressure system with the aiming system, the weight of the pressure system put a lot of stress on the aiming system, so I had to make some adjustments such as slowing down the servo motor movement and increasing the speed of the stepper motor movement so that the whole thing moves more smoothly. Below is the final image of the finally assembled robot.

I’ve also worked with Alex and Mike to software-control the pressure system, and ensuring frontend calls the right endpoint on the pi.

I have finished all my task and is on schedule.

I will be ensuring everything works as expected and work more on final deliverables next week.

Mike’s Status Report for 4/20/24

This week I spent 4 hours in class working on the project with my team. The UI is getting very close to where we want it to be, so I have been helping out Simon and Alex with their respective systems wherever they need help. Besides that, I have been focusing on testing the UI by asking both pong players and non pong players to use our UI and fill out a form. Overall the reviews of the UI are good and I think we have almost met / have met our goal of a 95% satisfactory rate. As for the Gantt chart, I am on track and helping the teammates stay on track.

As for new things I learned, I have never sent requests with react before. In order to figure out how to get the front end to communicate with the backend, I watched guides on how to this connections between different frameworks. After watching these guides, I implemented a function that can send all our requests to our backend. It took a lot of trial and error and google searches, which were another way I learned how to send requests. Besides that, there are some parts of react I was rusty with, like hooks. I used online guides to jog my memory on how to use react hooks and implemented them in my part of the project.

Alex’s Status Report for 4/20/24

For the past 2 weeks I prototyped and finished making the launching subsystem. It was very rewarding to finally be able to shoot a ping pong ball out of the cannon (even though at the beginning it was going at a very high speed). I don’t really see many possible issues with our final bit of integration, something I was worried about at one point was how difficult it would be to move the pipe around, but after talking with Simon I am confident it won’t be an issue.

No major design changes this week. It is however, worth noting that we ended up only needing a thicker tube to fire the ping pong ball, so the problem we had firing the ping pong ball was indeed due to the resistance of the tube.

I also finished the cup detection. I take several 3d coordinate pictures (with the LIDAR), project these onto the plane that is perpendicular to gravity and then convolve a circle onto that image to get out the cup locations. Visually this works very well but I am having trouble extracting the precise cup location and will be finishing that up later tonight.

I’ve worked a bit on collecting data for ball detection and cup detection and am going to continue doing that next week. Next week I also plan on collecting data for the launching subsystem.

I had to learn a lot of stuff about piping and air pressure for this capstone project and I found a lot of useful websites explaining how to do projects that are similar to ours. But ultimately, I found a blog post explaining pressure and flow in terms of voltage and current and that proved to be very intuitive for me. I also learned a lot through trial and error of different computer vision algorithms for cup and ball detection. Something else I learned was how to apply my mathematical skills to write code that necessitates it (the ball dynamics and cup projection were especially enjoyable for me to finish).

No schedule changes this week and we will be focused on testing and integration for the rest of the semester.

Team Status Report for 4/20/24

We have lots of spare parts for the physical systems that mitigates most of the risk related to hardware failure. As we’re more or less done with individual subsystems, the problem could still arise as we’re integrating different subsystems. For example, the computational power difference between the Pi and the computer we’ve been testing the vision system on could lead to issues – we don’t anticipate this to be a problem, but worst case we do have a more powerful Pi (Pi5 that we didn’t end up using) so do these processing. Another problem that could be an issue is the motor shaking messing with the computer vision but we can avoid this issue through our software, to do so we simply don’t run any of the computer vision subsystem while the motor is running.

We have no major design changes this week.

We have no schedule changes this week. We will be focused on testing and integration for the rest of the semester.

Seung Yun’s Status Report for 4/20/24

For the past two weeks, I’ve focused on cleaning up the aiming system and preparing for the overall integration. I’ve refactored the code to remove the unnecessary ipc communication between the networking module and the motor control modules.

As the pressure system by Alex is working properly, I’ve redesigned the robot for full integration. Given that the pressure system’s pipe is rather thick and will need some spacing to bend properly, I’ve moved the balancing bridge on the lazy susan plate to the front and elevated the whole thing. I’ve also printed out a pole for the camera so it will be elevated by 50cm from the surface, and the whole base for the robot. The new physical robot looks like below:

I’ve also performed testing on the aiming system. I’ve set the angle of the vertical aiming and radial aiming system to various degrees, then took a picture of it to analyze the angle using an online tool like below. I’m still compiling the results of this to be included in the presentation next week.

I am on schedule according to our Gantt chart.

Next week, I will work on writing software on the pi to control the pressure system and put everything together, and work with Alex to run the vision algorithm on the pi, and work with Mike to connect the frontend to the pi properly. We’re at the integration mode and the lines between our responsibilities are rather blurry.

Throughout this project, I had to get out of my comfort zone and work with different tools – the biggest probably is CADing and physical design. I’ve only taken CAD class last semester and was new to laser cutting. I’ve had to read up on lots of other reference designs to design our robot. I’ve also asked for advice from Techspark and IDeate staff to make sure our design is feasible.

I’ve also had to learn how to work with microcontrollers, such as writing modules for motor controls and networking. I’ve followed lots of online tutorials to get the initial setup, then relied on documentation of specific libraries I was using to tune the code to our purposes.

Alex’s Status Report for 4/6/24

I used the demo this past week as a forcing function for my subsystems. I tested out the accuracy of the ball detection (admittedly this was right before the presentation) and found that it was worse than I expected because I was unable to capture as many frames as I had wanted. Later that day I refactored the code to take this into account. I also finally tested the motors we have and we found that we were lacking in power to launch the ping pong ball (more on this later). Finally, I got the cup detection working, but later this week I found that the code I wrote was not invariant to LIDAR rotation and have been debugging that for the last couple of days.

Next week I plan on ordering another round of parts for our pressure launching mechanism. The parts I am planning to order are larger tubes so that more air will be released when fired and also a larger motor. I also plan on finishing up the cup detection by making it invariant to the rotation of the LIDAR.

For verification you can see our group post for more details, but in essence we will be testing our ball detection by facing the camera up, throwing ping pong balls over the camera and measuring where they land by recording the ground with a camera and marking it. Then we will compare where the LIDAR thinks they land with where they actually land and find the difference. For verification of the cup detection we will be doing something similar where we place a cup and then measure out the distance of the cup from the LIDAR and compare that to the actual LIDAR reading. Finally, for the launching system we will test the precision by launching it multiple times (remember if we have issues with accuracy, we can just change the offset so it is accurate aswell).

Team Status Report for 4/6

The risk of the pressure system remains the biggest as of now. We’ve noticed the pressure is not leaving the tube as quickly as we hoped and making adjustments, but the new design might still encounter this issue – we are exploring mechanical shooting mechanisms to mitigate this risk. The hardware’s stability is also an issue. As we’ve experienced hardware failure on the microcontroller this week, having spare parts to mitigate this is going to be important.

We have no major design changes this week.

We’ve updated our Gantt chart this week to match the progress we’ve made. The cup detection and the pressure system has been moved back a little bit, and some of the workload has been re-distributed. The updated Gantt chart is attached below:

As we approach the last stretch, we have multiple verification and validation plans in place. For verification, as discussed in individual reports as well, the aiming subsystem will perform a series of movements and take physical measurements to verify it works.

For the validation, once all systems are built, we will test the system by launching the ball in the same setting 10 times to a grid paper, and note where it landed. As mentioned in design presentation previously, if we can draw a 2cm * 5cm bounding box on all the landing spots, we will have successfully validated our project.

For the computer vision ball detection validation, we will measure the accuracy by positioning the LIDAR sensor facing up. Then we will throwing a ball over the LIDAR sensor and record where it lands on the ground. We will then compare the actual landing location to where the LIDAR predicted. We will then measure the absolute magnitude of the difference between the two points. We currently plan on doing 50 trials.

For the computer vision cup validation we plan on setting up the cups and then moving the cups around and measuring the distance between where the cups are predicted to be and where they actually are, similar to the method used for ball detection. The main difference here will be that we need to map out in 3d space where the cups are relative to the LIDAR so it will be more of a pain to set up, but once we have our measuring set up and validated, we will be able to quickly collect data.