Team Status 4/29

- List all unit tests and overall system test carried out for experimentation of the system. List any findings and design changes made from your analysis of test results and other data obtained from the experimentation.

Signal Processing: ~30 samples tested, looking for pitch accuracy and onset accuracy primarily.

ML Model:

Web backend:

- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

The biggest risk to the project is still the quality of generated music. Although the quality of music generated by the pre trained model is good, It’s hard to predict how the finetuning will affect the generated music. To mitigate this risk we’re researching different techniques used when fine tuning models (such as freezing certain weights) to prevent divergence.

- Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

The overall design of the system remains the same.

- Provide an updated schedule if changes have occurred.

No changes

Eli Status 4/29

What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

I completed implementing pitch detection, note onsets and midi score generation. I am completely done with my parts of the project except for testing and integration.

Final procedure:

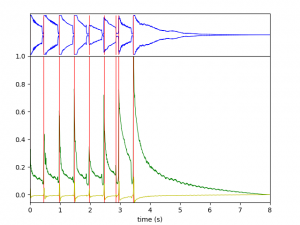

- Get amplitude envelope for detecting whether a note is being played or not:

2. Run pitch detection and onset detection (rough):

3. Put it all together:

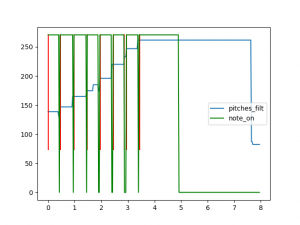

This graph is a little messy, but it is showing filtered pitches (spikes from 2. removed) in blue, note onsets in red, and whether a note is being played or not in green.

Still need to do testing, this is being held up due to wanting to test using our group’s recording procedure. Should be quick to do testing though.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Just behind on testing, but that will only take an hour or so maximum once the other parts of the project are integrated.

What deliverables do you hope to complete in the next week?

Finish integration/testing.

Vedant’s status report 4/29/2023

What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

This week I was helping Sachit get the web application working. We decided to work on it together since I have prior experience with Django and this would probably make integration easier.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Our progress is on track to be done by the demo

What deliverables do you hope to complete in the next week?

In the next week, I hope to integrate my generative music model with the web app interface

Sachit Status 4/29

What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

This week I started working with Vedant on the Django backend and started integrating the ML inference and signal processing.

I also tried out different communication methods for raw audio transmission from ESp32 to backend namely using UDP instead of TCP to improve audio quality. After realizing TCP was the way to go, also tried different audio recording parameter in terms of sample width, sampling rate to get best quality audio recorded.

In terms of frontend, kept improving its design and added a piano visualizer to visualize the accompaniment music.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Our progress is on track to be done by the demo

What deliverables do you hope to complete in the next week?

In the next week, I want to finish integration of Django backend with frontend.

Sachit’s Status 4/23

What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

I have completed the frontend and started working on the backend. Here I have attached a video of using the web dashboard with a normal user workflow.

https://drive.google.com/file/d/1e96onx2QcJHEMMVPJVD5GcPxBSgLwNwe/view?usp=sharing

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am on schedule.

What deliverables do you hope to complete in the next week?

Finish the backend in flask and integrate it with the frontend. Also complete the whole system workflow by integrating the ML inference into the backend. Perform some stress tests and user tests to improve dashboard.

Eli Status 4/23

What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

Implemented memoization for the Yin autocorrelation method. This speeds up computation significantly and allows me to take continuous lag windows which fixed the accuracy problem.

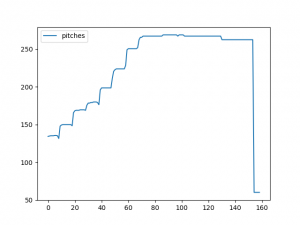

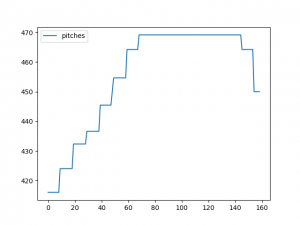

This is the output of the autocorrelation currently on a c major scale (frequency on left axis, time on bottom). This is now fully accurate.

I also implemented midi score generation. This is quick, flexible and fast. Generated sample midi scores and tested with Vedant’s model. Seems to be working fine.

Performed initial accuracy testing on a few different downloaded clean tone guitar samples. Accuracy >95% correct identification. The main source of inaccuracy is due to the tuning of the window sizes.

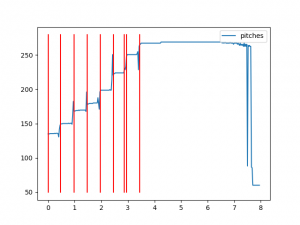

Worked on onset/offset detection. Found a few methods, likely going to stick with the built in aubio onset detection. There is some inaccuracy, but it seems good enough for primer generation:

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

On schedule.

What deliverables do you hope to complete in the next week?

Integrate and more fully test pitch detection/onset detection.

Team status report 04/23/2023

- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

The biggest risk to the project is still the quality of generated music. Although the quality of music generated by the pre trained model is good, It’s hard to predict how the finetuning will affect the generated music. To mitigate this risk we’re researching different techniques used when fine tuning models (such as freezing certain weights) to prevent divergence.

- Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

The overall design of the system remains the same.

- Provide an updated schedule if changes have occurred.

No changes

Vedant status report 04/23/2023

What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

This week a lot of time and effort was put into pivoting the implementation of the generative music model. After training a transformer model from scratch I quickly realized that given the time and resources I wouldn’t be able to get the results I need training a model from scratch. Following this I decided to use a pretrained model as a starting point and finetune that model for the different genres we presented in our use case requirements. I decided to use the pop music transformer (https://github.com/YatingMusic/remi) as a starting point and fine tune this model with files from the Lakh MIDI dataset segmented by genre.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I feel that my progress is on track, using a pretrained model means that models would need far lesser training time which makes up for this pivot.

What deliverables do you hope to complete in the next week?

Fine tune the model on the different datasets for the different genres.

Eli Status 4/8

What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

Implemented Yin autocorrelation method from paper.

http://audition.ens.fr/adc/pdf/2002_JASA_YIN.pdf

This autocorrelation method is extremely slow. I changed windowing parameters of the autocorrelation and changed the frequency range over which the autocorrelation operates. Now the speed is acceptable (%70 length of input), but the accuracy needs help. The autocorrelation is finding the second most powerful harmonic peaks rather than the main fundamental peak.

This is the output of the autocorrelation currently on a c major scale (frequency on left axis, time on bottom). The autocorrelation finds relative pitches very well. It sees whole step, whole step, half step, whole, whole, whole, half exactly as a major scale should be. It just thinks the fundamental is different.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

On schedule

What deliverables do you hope to complete in the next week?

Continue tuning pitch detection. Include onset detection for note timing and create MIDI score from input.