Summary

We made substantial progress in our individual subsystems. Leading up to the interim demo, we want to finalize some parts of our integration with respect to the signal processing class connected with the lighting engine, UI subsystem’s with feature query subsystems, and UI subsystem’s interaction with the lighting engine. Currently, the feature query subsystem is connected to the signal processing subsystem. We narrowed our subset of features in the signal processing subsystem, and tested some features strobe, fade, blackout, colour cycle, colour, and modifying its interaction with the lights, and we have a working UI as well.

Risk

The primary risk still comes from the latency of the signal processing, and how fast we will be able to generate metrics for the lighting calls to be made. We are using threads to help song recognition and extraction of features from spotify to work in tandem. Another risk is the time between the song being inputted, and the time the user is expecting a light change. Another risk as of now is determining how we are going to map the outputs from the signal processor and genre classifier to the lighting calls. We have to balance the amount of deterministic calls, and non-deterministic calls we want to make from the lighting engine.

Changes

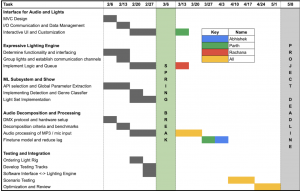

We are on track with our progress for the project, and we have been able to stay true to the internal deadlines we set up. Hence, there are no significant changes to our overall schedule. We are having a meeting about integration tomorrow, and we will see how we can write up a Show class that bridges the different subsystems together. We also want to plan for our interim demo to test for 3-4 samples with a few song inflections points, and vary the genres between them, and we want to test for user overrides.

Updated Schedule