Personal Accomplishments

https://drive.google.com/drive/u/0/folders/16YJbrFB_6vTO6P4SGzJ2yftce_aSI6WH

A large part of this week went into the proposal presentation slides. I presented this week, so a lot of detail needed to be fleshed out, and the presentation had to be understandable in a way that the audience could understand it without having a lot of background information about the numerous subsystems.

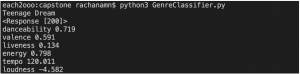

The genre classifier is able to output the danceability, valence, liveness, energy, tempo, and loudness attributes. I used Shazam’s song detection API, and used the song track title to poll spotify with a song query to get the audio features.

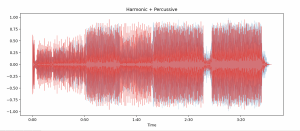

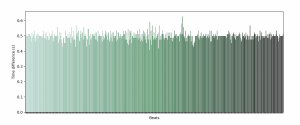

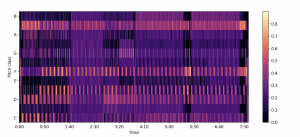

I found song features that can be split up into Timbral, Pitch, and Rhythmic features, and a way to construct data frames with these features embedded in it. We want to be able to chunk the data, and can iteratively find features for each chunk. I explored beat extraction features and splitting the harmonics, and percussion features for a select song, but did not chunk them according to the optimal features I can extract from a select section, and how to make this faster

On Track?

Yes, I am on track. The optimal chunk size might take some time to figure out because of the tradeoffs, and it requires interaction with the UI as well to iterate over all of the audio. I also want to help figure out how these features can be combined, and appropriately make artistic lighting choices.

Goals for Next Week

Finalize signal processing over chunks, and find an appropriate chunk size to parse through the song. We also want the signal generator to work for multiple songs in the same audio, and hope for Shazam’s song detection to work for this. I anticipate most of next week to be going in the design report.