Tests

Tests

I have been running my signal processing params module on a song that can be located on spotify and shazam to see whether those API calls are robust. Moreover, I have run them on pitch modulated files of 15 seconds, and amplitude modulated files of the same length. I have also been able to specify the resolution of the values i.e do i want to get 5 second averages or 3 second averages or just stick to my 1 second averages. They are currently all working fine. I want to be able to run some tests on voiced and unvoiced components, and also run beat detection on an unplugged file and make sure it is not sensing the percussive elements.

Personal Accomplishments

Alot of this week went into working on our interim demo from Sunday to Monday afternoon. There were a few interesting weight values we added to make it look brighter or more saturated on the light setup. So we tried to extract our amplitude, frequency values in a less convoluted way, and simplify it. We realized that combining features in general (global parameters, and signal parameters) together and trying to have both of them contribute to a certain lighting feature can be challenging as we need to benchmark this against a few test files to come up with possible weights.

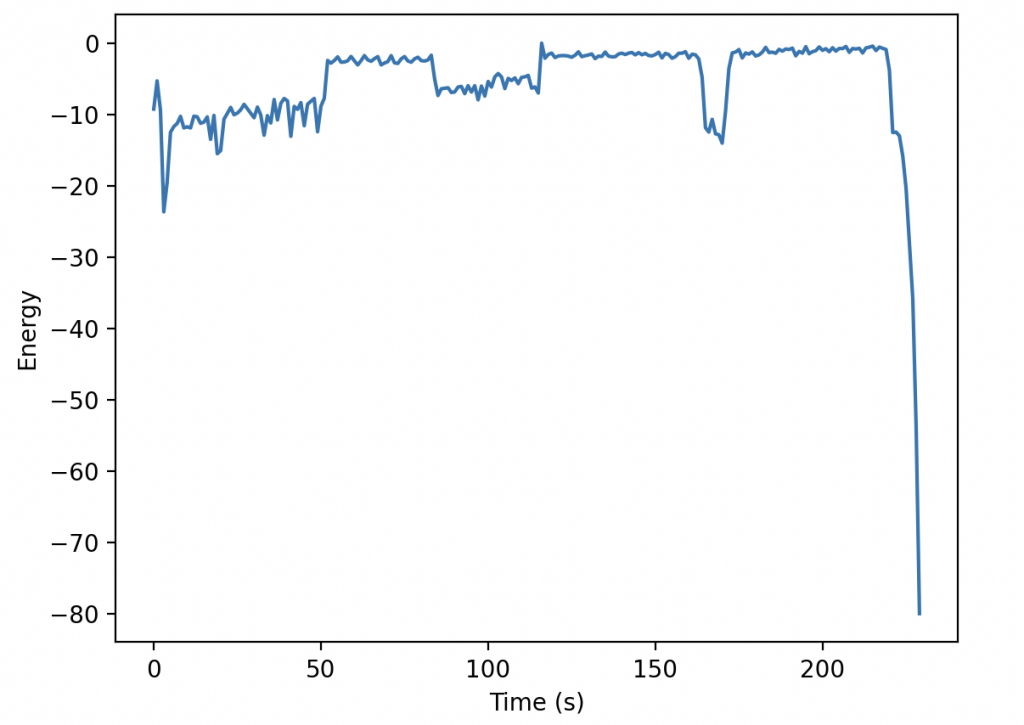

I also worked on incorporating beat detection along with energy division values to find the duration we want to call each effect on, and try to get the lighting to mirror the beats falling as much as possible. I was able to generate a energy plot across time using a few librosa functions. This broke the audio file into a few windows and extracted the rms values from it to determine average energy per frame. This is a little more specifc than the amplitude plots I was able to generate.

On Track?

We are on track, and I think its just a couple more hours of hard work to get to our desired state of using it on songs. For the interim demo, we used files like amplitude modulation and pitch modulation to show how the brightness and saturation values changed across those 2 features independently.

Compared to our previous week, we made a lot of progress in the lighting logic. Lighting logic is challenging to work on in general. Generating colours has made it alot easier to visualize other effects that we are trying to incorporate like flashing per beat, or the frequency with which we change colours.

Goals for Next Week

Goals for next week are mostly wrapping up lighting logic. We have a few high priority and low priority goals that we want to finish up for the project itself, and then after that we want to just run a bunch of tests and look at how users of the application are responding to it. We want to figure out what is the frequency we are going to cycle colours by at fast tempos, and then figure out parameter selection, and function selection for which functions for which lights. We also want to integrate spotify, and change the way we operate the lights either mirror it or run them in unison.