Personal Accomplishments

https://colab.research.google.com/drive/15RFAHvDop2-Yh4cbhbS3MnSKOgIHlB2V#scrollTo=1YY6_6ebt0I8

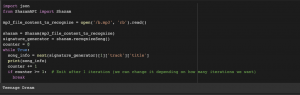

A simple Shazam API test to extract the song title from a song uploaded. It uses a signature generator object, and you can parse and extract different features from the Shazam object. In this case, it is able to get the song Teenage Dream in one iteration correctly in 10 seconds.

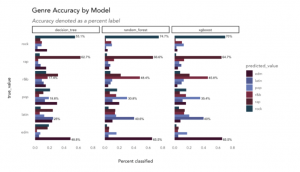

A major part of my work was to figure out what the genre detection and feature extraction from Spotify would consist of. Very early on in the week I realized that an ML model for genre detection is not as accurate. Extracting the genre incorrectly would be the first level of uncertainty, and then working with this uncertainty further to extract other features like danceability, valence, loudness, and liveness based on genre would introduce more unpredictability in the model.

Preliminary look at the genre detection ML part:

The accuracies are not above 70% making it difficult for us to rely on these models. So it made sense to simplify the design based on what was needed for the project to work successfully. The new workflow that I think will work based on some testing of the Shazam API, and my work with the Spotify API before is extracting the song title using the ShazamAPI. (The ShazamAPI allows you to get more attributes as well, some of which I will explore a little later), and using the song to poll the musical parameters (6 features) using the Spotify API. This will be later fed into other engines. This parameter extraction method would have to be changed a little for a microphone input.

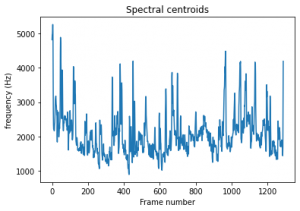

From this file : https://colab.research.google.com/drive/1hctVbgbCxK8SNuVWfW2e4kA7DtC2hZNC

I was able to get frequency bins. These represent strong harmonics, and this would be useful in classifying moods and adjusting mood lighting as the tonal component of a harmonic is helpful. I am able to get average amplitudes and this would help timing of lights, and how much flash we want. The spectral centroids indicate the center of the mass of the spectrum, and this is known to have a robust connection with the brightness of the sound which covaries with valence well.

On Track?

I am on track for genre classification. I might have to put in a little more work in understanding how different features of signal processing will correlate with the lighting setup. I am not sure how the FFT we are planning to do will relate to the features of lighting. I am also presenting for the Design Presentations this time, so I might not be able to put in alot of effort in the signal processing part till after Wednesday.

Goals for Next Week

I want to be able to upload a file, run the Shazam API, feed into the spotify API, get the json elements, and display it in musical parameters, so that I can pass this to the UI side of things. Running this for multiple files and different intervals to test how powerful and quick the Shazam API in song detection will be is the next goal after testing with a simple file.