Oscar’ status report for 4/29

- What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress.

I was trying to attach the chips to the gloves last week. I tried zip ties, but they seemed too thick to go through the gloves. I also tried sewing them on, but the pins on the back of the chip can sometimes poke through the gloves and weren’t comfortable to wear. I think I will hot glue the chips to 3M Velcro strips and then stick them onto the gloves. That way, I can rearrange the components easily and take out the batteries for charging.

- Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up with the project schedule?

I am behind schedule. I planned to make one glove this week, but sewing is harder than expected. I did not complete building one glove and I was out of town last weekend. I will spend most of tomorrow in the lab with Yuqi to finish building the gloves.

- What deliverables do you hope to complete in the next week?

By the end of Thursday, I hope to build both gloves.

By the end of Friday, I hope to merge my code base with Karen’s and clean up dead code.

By the end of Sunday, I hope to finish all integration testing and get out system ready for the final demo.

Oscar’s status report for 4/22

- What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress.

I was trying to integrate the radio chips with my synthesizer last week. However, I could not get them to work. I have checked that they are neither faulty nor my Arduino Nano. Also, since they worked when Yuqi tested them with Arduino Uno and bypass capacitors, I think the problem lies with the Arduino Nano’s current draw limit. I think the radio chip might need to draw current directly from the batteries, instead of from the Nano.

Update (Sunday, 4/23)

After some debugging, the radio chips are working and they can be powered directly by the Nanos. Here’s a demo video. Although in the video, the Nanos are powered by USB cables, we’ve also verified that they can be powered by two 3.7 LiPO batteries in series. Since the radio chip now works when using shorter wires to connect them and the Nanos, I think that it was the long wires and the breadboard that I previously used that caused too much noise.

- Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up with the project schedule?

I am behind schedule. I thought I could debug and integrate the radio circuits with my synthesizer this week. To make up for the progress, I will meet with Yuqi tomorrow and fix the radio circuits.

- What deliverables do you hope to complete in the next week?

I hope to debug and integrate the radio circuits and build one glove next week.

Oscar’s status report for 4/8

- What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress.

I rewrote the synthesis section of my synthesizer. Before it uses additive synthesis (adding sine waves of different frequencies). Now it uses wavetable synthesis (sampling an arbitrary periodical wavetable). I also added dual-channel support for my synthesizer. With wavetable synthesis, I only need to perform two look-ups in the wavetable and linear interpolation to generate a sample in the audio buffer. Previously I have to add results of multiple sine functions just to generate a sample. Here’s a demo video.

In short, compared to additive synthesis, wavetable synthesis is much faster and can mimic an arbitrary instrument more easily.

- Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up with the project schedule.

I am a little behind schedule. This week, Karen and I decided to use color tracking instead of hand tracking to reduce the lag of our system, so we are behind on our integration schedule. However, I will write a preliminary color-tracking program and integrate it with my synthesizer tomorrow as a preparation for the actual integration later.

- What deliverables do you hope to complete in the next week?

I am still hoping to integrate the radio transmission part into our system.

- Now that you are entering into the verification and validation phase of your project, provide a comprehensive update on what tests you have you run or are planning to run. In particular, how will you analyze the anticipated measured results to verify your contribution to the project meets the engineering design requirements or the use case requirements?

We ran a basic latency test after we merged our synthesizer and hand tracking program. Because the lag was unbearable, we decided to switch to color tracking and wavetable synthesis. For next week, I will be testing the latency of the new synthesizer. Using a performance analysis tool, I found that the old synthesizer takes about 8ms to fill up the audio buffer and write it to the speaker. Next week, I will make sure that the new synthesizer has a latency under 3ms, which gives the color tracking system about 10-3=7ms to process each video frame.

Oscar’s status report for 4/1

- What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress.

I merged most of my repo with Karen’s. Right now, our program uses the index finger’s horizontal position to determine the pitch and the vertical position to determine the volume. I haven’t integrated the code I wrote for communicating with the gyroscope chip yet because of our performance issue. Right now, tracking and playing notes simultaneously is a little laggy. Here’s a demo video.

- Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up with the project schedule.

I am a little behind schedule this week. I was expecting to merge all of our code bases together. I will be working extra time tomorrow (Apr 2nd) to reduce the lag and integrate the serial communication part into our code base.

- What deliverables do you hope to complete in the next week?

Next week, I hope to integrate the radio transmission part into our system.

Oscar’s status report for 3/25

- What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress.

This week I added the pitch bend function to my synthesizer. Now, the roll angle of the gyroscope chip can bend the pitch up or down by 2 semitones. Here’s a demo video. I spent quite some time lowering the latency of serial communication. I have to design a data packet to send volume (pitch angle) and pitch bend (roll angle) information in one go. Otherwise, the overhead of calling serial write and serial read two times will create pauses between writes to the audio buffer and shatter the audio production.

- Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up with the project schedule.

I am on schedule. However, the integration task next week will be more challenging.

- What deliverables do you hope to complete in the next week?

Next week, I will be working with Karen to integrate all the software components together as we prepare for the interim demo. If we have some extra time, I will research ways to create oscillators based on audio samples of real instruments instead of mathematical functions.

Oscar’s status report for 3/18

- What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress.

This week I integrated the gyroscope chip into my synthesizer. Specifically, now the volume is now controlled by the pitch angle of the gyroscope chip instead of the vertical position of the cursor. I spent quite some time researching the Arduino library for the gyro IC. I have tried AdaFruit_MPU6050 and MPU6050_light, but these do not have the required angular accuracy. In the end, I used Tiny_MPU6050, which contains a calibration function that makes the angle readings much more reliable. After that, I integrated the readings from Arduino into my synthesizer using the serial python library, normalized it, and used it as my volume parameter. Here is a demo video.

- Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up with the project schedule.

I am on schedule. However, because of the ethics discussion next week, I might spend some extra hours in the lab next weekend.

- What deliverables do you hope to complete in the next week?

After mapping the pitch angle to the volume, I will mainly focus on implementing real time frequency bend next week and controlling it with the roll angle of the gyroscope IC.

Oscar’s status report for 3/11

- What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress.

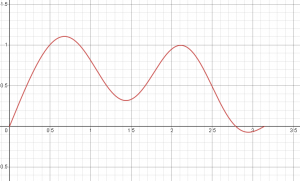

This week I added a gliding mechanism to fix the audio snap problem. Now, when the user switches to a new frequency, the synthesizer will play a sequence of in-between frequencies instead of jumping directly to the target frequency. I also rewrote the oscillator class to support customized timbre. I can now customize the sound by changing the mathematical expression of the oscillator’s waveform. In this demo video, the first oscillator uses a sine wave with glide duration = 0.05s. The second also uses a sine wave with glide duration = 0.1s. The third one’s waveform looks like the picture below and has a glide duration = 0.15s.

- Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up with the project schedule.

I am on schedule. However, I do foresee a heavier workload next week as I will be adding hardware (Arduino + gyroscope chip) to software (my synthesizer).

- What deliverables do you hope to complete in the next week?

Since our gyroscope chip has arrived, I will be testing its functionality next week. If there is no problem with the chip, I will incorporate its outputs to my synthesizer. I hope that I can use its tilt angle to control the volume of my synthesizer by the end of next week.

Oscar’s status report for 2/25

- What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress.

This week I continued to improve on the oscillator program I wrote last week to support pitch and volume control and timbre selection. By moving the mouse left and right, the program plays one of the eight notes between C4 and C5. The volume is controlled by the vertical position of the mouse. Also, the program will produce a different sound when the oscillator is initialized with different functions. Here is a demo video of the functionalities above.

I also spent quite a lot of time making my system both Windows and macOS compatible. Since I do not own a MacBook, Karen helped me make sure that my program is indeed macOS compatible. Previously I had some issues with using the simpleaudio library on Windows. This was resolved by using the pyaudio library instead. However, switching libraries means I have to rewrite the core part of my synthesizer from scratch.

- Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up with the project schedule.

I think my progress is on schedule. However, using an existing synthesizer software is always a backup plan. I have researched a little bit into open-sourced synthesizers this week and decided that Surge is currently the best available option.

- What deliverables do you hope to complete in the next week?

I plan to continue upgrade the linear volume control into a vibrato. Also, I will try to add a gliding mechanism to my synthesizer so that it smooths out the not transition.

Oscar’s status report for 2/18

- What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress.

This week I continued to improve on the oscillator program I wrote last week so that it supports mouse-controlled real-time playing. When run, if the cursor is on the left side of the screen, the program plays a C4 indefinitely. If the cursor is on the right, the program plays a E4 indefinitely. Here is a short demonstration video of it working.

I spent quite a lot of time fixing the “snaps” when looping clips of a frequency. First I thought it can be fixed by trimming the generated frequency array so that the difference between the first element and the last is relatively small. However, the snap only got a little bit better. I then realized I have to reduce the software overhead (actual gap in time) of repeating a frequency array, so I tried to find a python library that supports looping with the lowest overhead. I have tried packages like pyaudio, simpleaudio, and pymusiclooper. However, since most of these libraries loop a wavfile on an infinite loop, the gap between each repeat is still noticeable. In the end, I used a library called sounddevice that implements looping using callbacks, and since there is virtually no gap between repeats, looping is now unnoticeable.

- Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up to the project schedule.

I think my progress is on schedule. However, using an existing synthesizer software is always a back up plan. In that case, I only need to figure out how to change parameters through my own program instead of its GUI.

- What deliverables do you hope to complete in the next week?

I plan to add two more functionality to my oscillator program: real-time cursor-based volume control, and real-time vibrato. I plan to implement the vibrato using a envelope that performs amplitude modulation on the generated frequency array.

- Particular ECE courses that I found useful:

Signals and systems (18-290), as it helps me understand how do different musical effects work in the mathematical sense. In the end, musical effects is just modifying a discrete-time signal. Introduction to computer systems (18-213), for the programming skills I gained from that class. It helps me to better understand a library’s source code and therefore choose the best library to do the job.

Oscar’s Status Report for 2/11

Besides preparing for the presentation on Wednesday, I was researching potential software/code for our synthesizer. Specifically, I was looking for the following functionalities:

- Supports playing sounds of different frequencies and volumes.

- Can apply at least one adjustable musical effect.

- Supports at least two different tone colors/instrument sounds.

I have tried building a DIY synthesizer using Python libraries like pyo, synthesizer, and C++ libraries like STK. After wrestling with import errors and dependency issues, I decided to build a synthesizer based on this post. It has a step-by-step tutorial that teaches you how to build an oscillator, a modulator, and a controller in Python. I modified the code provided in the post and wrote a simple Python program that can generate numpy arrays representing arbitrary frequencies sounds. Currently, the program plays a “C4-E4-G4” sequence on repeat.

My progress is on schedule, and I am planning to upgrade the synthesizer program so that it responds to real-time inputs (for example, mouse movement). Also, I need to fix the audio “snaps” problem. When looping a frequency array, the difference between the first and last element will cause the synthesizer to play a snap sound. I need to find a way to smooth out the snaps and make looping unnoticeable.