This week, I focused on preparing for the interim demo and verifying test results for different scents. For the interim demo, my contributions in the initial code base were with the sensor readings, and adding the LCD display was a good way to verify the initial results of getting live sensor values displayed as the robot is scanning and randomly exploring. For data routing, I tested out various communication methods which each had its shortcomings. I tested out the communication from the Arduino to the ESP8266 Wi-Fi module using MQTT via cloud (which lacked hardware drivers), the Arduino to ESP8266 Wi-Fi module to a hosted web server, which would not work so well with our high frequency of data and high-speed control loop. Switching gears to the NodeMCU as an alternative, I also extensively explored I2C communication and Serial communication methods. These presented their own pros and cons, lacking the quick updates we needed for the sensor data to be classified correctly. The unit tests helped me recognize what methods would be effective to achieve the use case and design requirements for our project. The latency requirements for ScentBot would only be achieved if we either moved the sensor data readings over to the NodeMCU module or hosted it all locally on the Arduino. Even if we pursued option 1, this would mean a delay in communicating a classification result to the robot, causing more delays in Scentbot detecting the scent.

Testing with smoke and paint thinner, we found that simple thresholding and slop calculation methods will not work to differentiate between different scents, since all the values go up for our sensors regardless of the kind of object placed in front of it from our three initial choices for our use case requirement: alcohol, paint thinner and smoke.

This week, I generated initial datasets for alcohol and paint thinner and fed them through a naive binary classification CNN task on GCP’s Neuton Tiny ML platform. Since we have the increased memory due to the Arduino Mega, we can now explore having a model placed locally on the Arduino. The binary classification model shows promising results on initial training data. I will complete all dataset generation by this weekend and move ahead with exploring the analysis of a CNN on these different scents. The unit testing involved will be a prediction on a test dataset once we export the model, and explore the changed logic of the robot for scanning and detection mode than the one we have currently.

We also discovered interesting aspects about the power drain of our robot, caused by the Arduino, LCD display, and fan drawing from the 5V battery. I would like to perform tests as part of our final report on the battery life of our robot, as I think it is important to do this from the user’s perspective. We are now proceeding with just replacing batteries if it goes under that threshold.

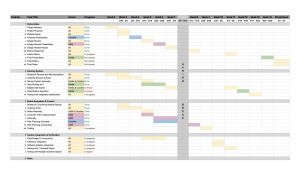

According to our new updated Gantt chart, my work is currently on schedule to be completed with data generation this week and in developing the classification model in the upcoming week. I also want to prepare for and start thinking about the final presentation and the skills I must display to properly showcase the work my teammates and I have done.