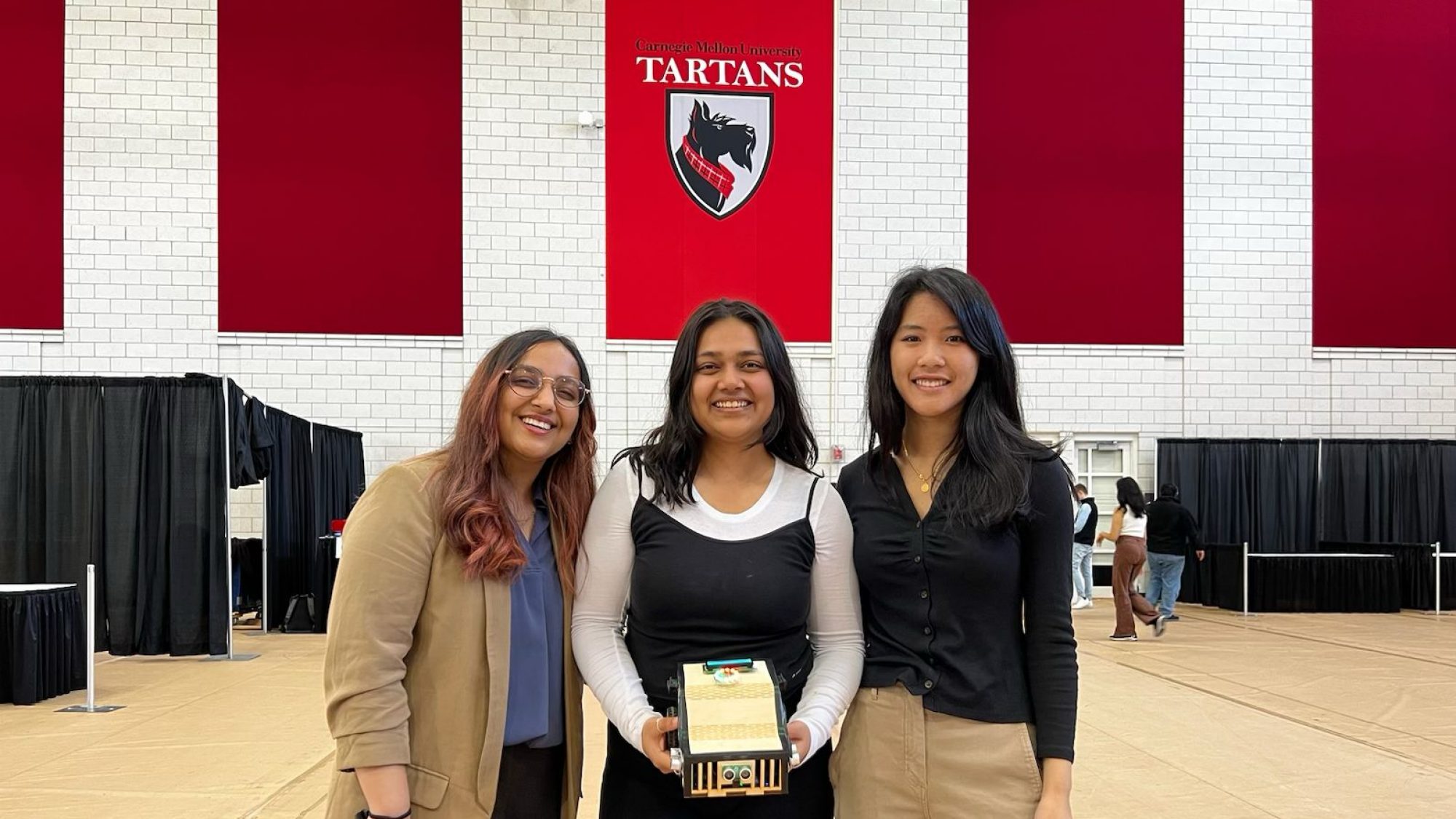

This week we worked on getting started on several aspects of our robot, including the CAD design and the Wavefront Segmentation algorithm for our path planning. We also ordered all the parts for our robot and received them, focusing on how different parts will integrate together. Additionally, we researched how to connect the Arduino and Wifi module to Azure, which is now the decided cloud platform for our ScentBot. Towards the end of the week, we collected this information onto our Design Review slides.

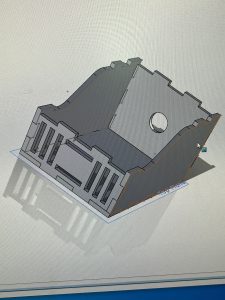

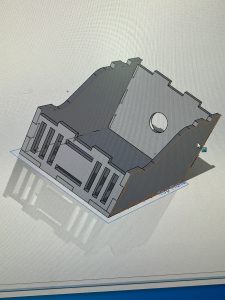

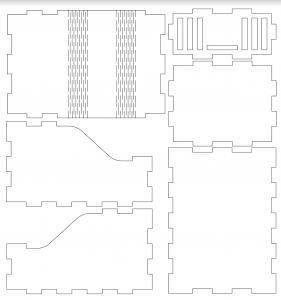

We have attached a photo of our completed CAD design.

With the parts we have ordered, we anticipate a few challenges, which we also discussed with our advisors. These would be good motor control and getting the robot to follow a straight path since we are assembling the robot using custom-built parts. We are also considering an alternate path planning approach because of the high dependence on sensor sensitivity in our project.

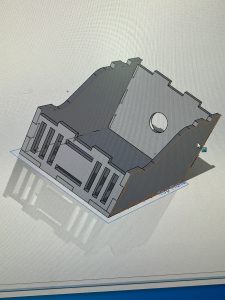

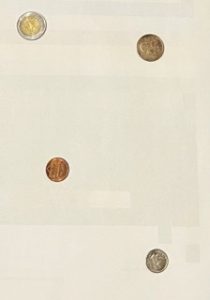

If the sensors are sensitive enough to detect an object from a distance farther than the 0.5m radial distance, we will change our test setup to have a single-scented object. This will be placed in a scent diffuser/spray to create a radial distribution of scent for our robot to follow. The robot will “randomly” explore the map until it detects a scent, and will follow in the direction of increasing probability. The robot will receive a travel distance and angle to follow and will reorient itself to a different angular orientation after this set distance. An image of our alternate testing approach is shown.

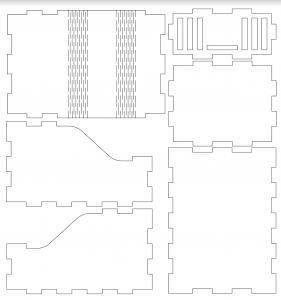

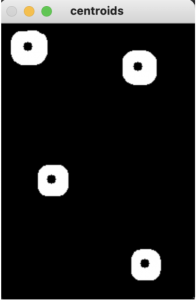

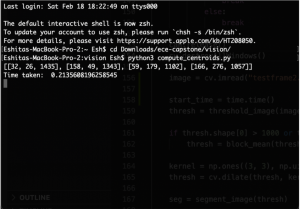

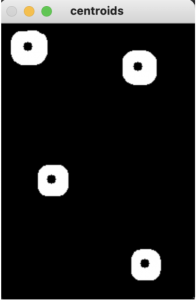

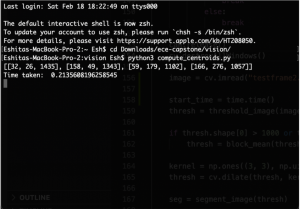

The Wavefront Segmentation algorithm runs on a letter size sheet of paper within 0.22s on average, and can detect objects present on a white background. It thresholds the images for faster computation, and calculates and prints the location of the centroids of the objects. One challenge we immediately faced was making sure shadows do not overlap within the image capture from the overhead camera.

The principles that we utilized to solve the problem of determining our robot design to fit our use-case requirements involved research into differential-drive robots, PID control, wavefront segmentation, Runge-Kutta localization, A* graph search, visibility graph, state machines on the Arduino, fluid dynamics & distributions, and chemical compositions and gas sensor types.