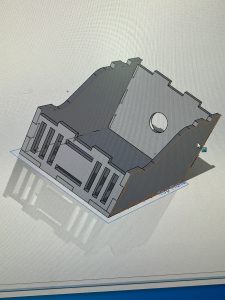

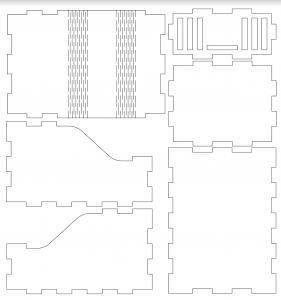

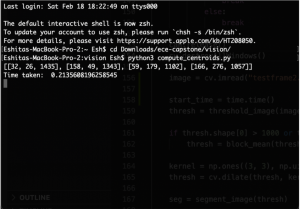

This week I focused on finalizing the robot CAD. I worked with Caroline to develop an effective layout for our parts on the robot and did the SolidWorks for the base plate. I also worked on researching controller strategies like pure pursuit and PID control for robot motion and finalized some algorithms – down-sampling and wavefront segmentation for vision, A* with visibility graphs for global path planning, and Runge-Kutta and PID control for motion. Some of the courses that cover these topics include: 18-370 (Fundamentals of Controls), 16-311 (Intro to Robotics), 16-385 (Computer Vision), and 18-794 (Pattern Recognition Theory).

I also started looking into alternatives for multi-threading on the Arduino as we discovered that we will need to have the robot sensing and moving at the same time to make good use of time. So far, I have found some approaches that make use of millis() based timing instead of delay() along with OOP to make a state machine on the Arduino. Additionally, I worked on preparing the slides for the design review and preparing for presenting next week. We plan on laser-cutting the robot chassis tomorrow (Sunday 02/19) and assembling the robot as and when the parts come in. Next week I plan on starting to test out the motors and calibrate them so that the robot can move along a fixed path. This will involve implementing the PID controller and odometry as well. Since we will be testing out the alternative approach of random motion before path planning, I’ve decided to prioritize odometry over path planning for the moment.